| World Gone Mad - Computer Vision: The Universal Interface |

| Written by Harry Fairhead | |||

| Sunday, 15 November 2020 | |||

|

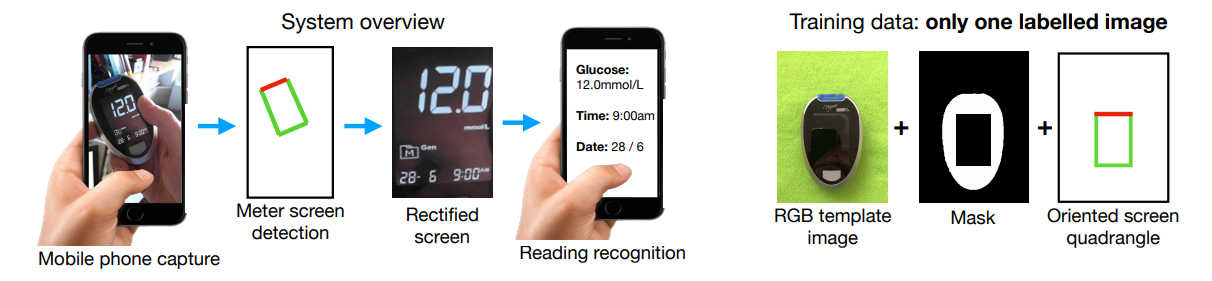

This is a good story - a new app allows easier monitoring of diabetes. It is the way that it has been achieved that should give us cause for thought. It reads data from a glucose meter but you can forget bluetooth or wifi - it uses computer vision to read the display just like a human. Long ago in a galaxy far, far away I set an IoT problem to a class of exceptional students - the task was to read a meter. The meter had no standard interface and had just an LED display. The solution I expected was to open the case, connect to the output of the LED display drivers and decode the digits. This was the solution I got in all but one case. This adventurous programmer decided software was better than hardware and wrote a program that would read the meter from a video input of its display. It was hardly computer vision - it simply looked for changes in brightness along fixed scan lines to work out which segments of the LED were lit. Is this a pass or a fail? Is it an exceptionally clever solution or a failure to understand how things actually work? Researchers at the University of Cambridge have more or less done the same thing. Only in this case the engineering case is much stronger. Older meters don't have interfaces and if they do they are often proprietary. Opening up the device to make a connection isn't an option either and so computer vision comes to the rescue: "The app uses computer vision techniques to read and record the glucose levels, time and date displayed on a typical glucose test via the camera on a mobile phone. The technology, which doesn’t require an internet or Bluetooth connection, works for any type of glucose meter, in any orientation and in a variety of light levels. It also reduces waste by eliminating the need to replace high-quality non-Bluetooth meters, making it a cost-effective solution to the NHS." When you add the fact that it is usually difficult to make a connection to medical devices from third party software then it makes even more sense. Computer vision as the universal device interface isn't an obvious idea, but it isn't as silly as it used to be given the processing power most people carry in their pockets and the fact that video input is commonplace. Perhaps you could say that it's all the fault of the mobile phone, making it possible to bring machine learning to such mundane tasks.

I've also always said that programmers, and engineers in general, are good at solving problems that affect them personally. Dr James Charles from Cambridge’s Department of Engineering admitted: “From a purely selfish point of view, this was something I really wanted to develop,” He has Type I diabetes and needs to take ten or so readings every day. Now he no longer has to transfer the data by hand. The software makes use of a neural network, LeDigit, to recognize the individual digits after finding the display screen in the scene. The clever part is the one-time learning to find the screen on a new device. You show the app the new device and it locates the screen where the digits will be displayed. What is more, the techique has been trained and tested not only on glucose meters but also on temperature, weight and multi-meters. This is a general app capable of doing much more than the important job it was designed for. "...we [built] a CNN based system which runs in real-time on mobile device with very high read accuracy (close to 100%). Our contributions include (i) introduction of an exciting new application domain, (ii) a method of training from purely synthetic data by reducing domain shift using a surprisingly simple approach which unlike adversarial training based methods does not even require unlabelled data; (iii) a highly accurate system for parsing digital meter screens and (iv) release of a new screen reading dataset. The system, although trained solely on synthetic data, transfers very well to the real-world. Our method of screen detection and text recognition also improves over the state of the art on our dataset."

More InformationReal-time screen reading: reducing domain shift for one-shot learning James Charles, Stefano Bucciarelli and Roberto Cipolla Related ArticlesOpenCV 3.0 Released - Computer Vision For The Rest Of Us Google AIY Cardboard And Raspberry Pi AI Militarizing Your Backyard with Python and AI OpenCV 3.0 Released - Computer Vision For The Rest Of Us To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info <ASIN:1871962633>

|

|||

| Last Updated ( Sunday, 15 November 2020 ) |