| CodeSearchNet Challenge To Improve Semantic Code Search |

| Written by Sue Gee | |||

| Friday, 27 September 2019 | |||

|

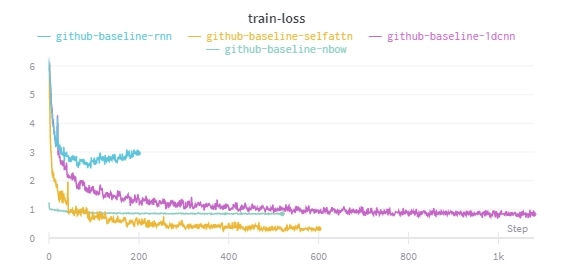

As developers we are only too aware of the difficulty of finding relevant code using a natural language query. Now GitHub has released a large dataset of code and natural language comment with the aim of furthering research on semantic code search together with a CodeSearchNet Challenge leaderboard to encourage participation. As explained on its GitHub repo, CodeSearchNet is a collection of datasets and benchmarks that explore the problem of code retrieval using natural language. It is collaboration between GitHub and the Deep Program Understanding group at Microsoft Research - Cambridge and the researchers, Hamel Husain, Ho-Hsiang Wu, Tiferet Gazit, Miltiadis Allamanis and Marc Brockschmidt, explain the background to inititative and give a full account of how the dataset was created in an arxiv paper. By way of introduction the researchers point out that while deep learning has fundamentally changed how we approach perceptive tasks such as image and speech recognition and has shown substantial successes in working with natural language data, this is difficult to extend to searching code. They note: Standard information retrieval methods do not work well in the code search domain, as there is often little shared vocabulary between search terms and results (e.g. consider a method called deserialize_JSON_obj_from_stream that may be a correct result for the query “read JSON data”). Even more problematic is that evaluating methods for this task is extremely hard, as there are no substantial datasets that were created for this task; instead, the community tries to make do with small datasets from related contexts (e.g. pairing questions on web forums to code chunks found in answers). To be able to apply deep learning techniques to semantic code search the CodeSearchNet Corpus was programmatically obtained by scraping open-source repositories and pairing individual functions with their (processed) documentation as natural language annotation. As outlined in the GitHub repo the primary dataset consists of 2 million ( The paper also explains that, for the purposes of the CodeSearchNet Challenge, a set of realistic queries and expert annotations for likely results has been defined. This consists of 99 natural languages queries paired with likely results for each of the six programming languages in which each query/result pair was labeled by a human expert, indicating the relevance of the result for the query. The Challenge uses a Weights & Biases benchmark to track and compare models trained on the this dataset. As well as a leaderboard you can see model comparisons and details of the run sets. Announcing the CodeSearchNet Challenge on the GitHub blog Hamel Husain reiterates how it is relevant to developers and their day-to-day experience: Searching for code to reuse, call into, or to see how others handle a problem is one of the most common tasks in a software developer’s day. However, search engines for code are often frustrating and never fully understand what we want, unlike regular web search engines. We started using modern machine learning techniques to improve code search but quickly realized that we were unable to measure our progress. Having shared this post on Hacker News he notes there: The reason we are excited to host this data is that we believe the community will be able to innovate and advance the state of the art much faster if it is provided in a tractable format for machine learning researchers. Another comment on Hacker News discusses the benefits of having the Challenge (which currently has no incentives other than "let's drive the field forward") is the degree of transparency it offers. According to poster mloncode: You can see: - All the system logging information including CPU/GPU utilization, with the runtime and type of GPU card used - Extensive logging of model training progression - All of the model artifacts and metadata - A link to the code on GitHub with the code that ran that data. - Anything emitted to stdout (for logging) - etc. This allows for extreme reproducibility and insight that is very helpful. With these tools, the community can see if an "ensemble everything: method is used and how long the model takes to train and what resources are consumed, etc. While it will be interesting to see results from the machine learning community, as jobbing developers we hope the challenge solves, or at least ameliorates, the underlying problems of semantic code search. Picture credit: GitHub blog More InformationIntroducing the CodeSearchNet challenge CodeSearchNet Challenge: Evaluating the State of Semantic Code Search by Hamel Husain, Ho-Hsiang Wu, Tiferet Gazit, Miltiadis Allamanis, Marc Brockschmidt CodeSearchNet Weights &Biases benchmark Related ArticlesBing Adds Intelligent Code Search Google Now Helps You Search For || And More Google Search Goes Semantic - The Knowledge Graph Facebook Open Sources Natural Language Processing Model Rule-Based Matching In Natural Language Processing Microsoft Expands Cognitive Services APIs

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Friday, 27 September 2019 ) |