| Put On Your Dancing Shoes With AI |

| Written by David Conrad |

| Saturday, 25 August 2018 |

|

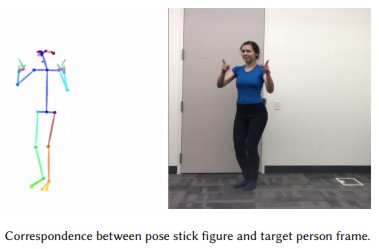

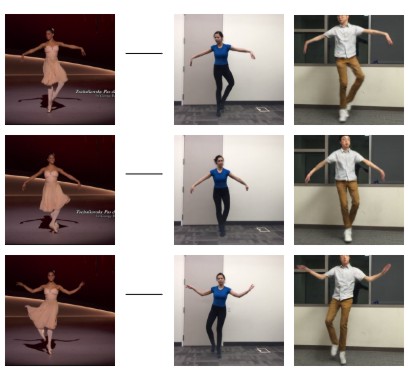

Using a deep learning based algorithm, researchers have produced videos in which untrained amateurs appear to have mastered the dance moves of pop stars, perform martial arts kicks and spin and twirl gracefully like ballerinas. Caroline Chan, Shiry Ginosar, Tinghui Zhou and Alexei A. Efros at UC Berkely have come up with a method for “do as I do" motion transfer. Given two videos, one of a target person performing standard moves who will later appear to be performing feats well outside their capability, and the other of a source subject whose motion is to be impose on the target person, motion is transferred between these subjects via an end to end pixel-based pipeline. To transfer motion between the source and target subjects in a frame-by-frame manner, required a mapping between images of the two individuals. However, there are no corresponding pairs of images of the two subjects performing the same motions to supervise learning this translation directly. Even if both subjects performed the same routine, there would not be an exact frame to frame body-pose correspondence due to body shape and stylistic differences unique to each subject. Instead keypoint-based pose, which encodes body position but not appearance, can serve as an intermediate representation between any two subjects and for this stick figures were used. From the target video, pose detections for each frame were obtained yielding a set of (pose stick figure, target person image) corresponding pairs. This aligned data was sufficient for learning an image-to-image translation model between pose stick figures and images of our target person in a supervised way. Then to transfer motion from source to target, the pose stick figures were input into the trained model to obtain images of the target subject in the same pose as the source. Two extra components improve the quality of the results: for temporal smoothness of the generated videos, the prediction at each frame on was predicted on that of the previous time step. To increase facial realism, a specialized GAN trained to generate the target person‘s face was included. The researchers concluded: Our method produces videos where motion is transferred between a variety of video subjects without the need for expensive 3D or motion capture data. Our main contributions are a learning-based pipeline for human motion transfer between videos, and the quality of our results which demonstrate complex motion transfer in realistic and detailed videos. We also conduct an ablation study on the components of our model comparing to a baseline framework. Watch the video, Everybody Dance Now, right to the end to see the target subjects performing moves for which ballerina's train half a lifetime. This is yet another example of style transfer, something which AI seems to be being used in multiple realms, as we noted only last week, see More Efficient Style Transfer Algorithm.

More InformationEverbody Dance Now by C Chan et al. Related ArticlesMore Efficient Style Transfer Algorithm Style Transfer Applied To Cooking - The Case Of The French Sukiyaki

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

| Last Updated ( Sunday, 14 June 2020 ) |