| Introduction To Kinect |

| Written by Harry Fairhead | |||||

Page 3 of 4

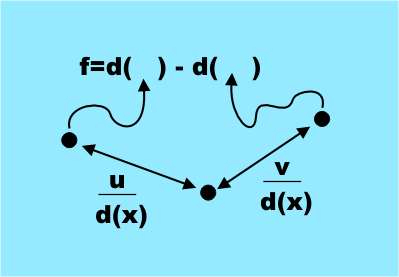

The Microsoft SDKAfter an initially cool reaction to the open source drivers, Microsoft realized that it had a big hit on its hands and decided to produce first an SDK, which could be used with the existing Kinect hardware, and a little later a special Kinect tailored to being used with a PC for more experimental purposes. The Microsoft Kinect SDK has a number of drawbacks compared to the open source drivers and middleware from PrimeSense. The first is that the SDK only works with Windows 7 and Windows 8. You can't use it to develop applications under Linux or the Mac. Also it isn't open source, so you can look under the hood to find out how it works and modify it to do what you want. There may be some negatives but there are also a number of positives. The first is that, unlike the open source drivers, the SKD drivers are complete and plug-and-play. They are easy to use. In addition they give you access to the raw data streams and the audio capabilities. As well as providing color and depth data, the Kinect has an array of microphones that can be used to perform speech input and other advanced audio processing. So far the audio capabilities of the Kinect have mostly been ignored by experimenters - perhaps because of the lack of support for it in the open source drivers. Another really good reason for using the Microsoft SDK is that it provides a much better Skeleton recognition and tracking algorithm that you will find in alternatives and in PrimeSense's NITE software. So if you intend to use player tracking then the Microsoft SDK does it best. Finally you can choose to use C#, Visual Basic .NET or C++ to write your programs in using either the full Visual Studio IDE or the free Express editions. In this book all of the examples are in C# - an edition using C++ is in preparation. The reason for choosing C# is that while it isn't as fast as C++ it is easier and has access to a lot of easy to use controls and class libraries. Tracking People and ObjectsSo how does Kinect track people? There are two answers to this question. The old way and the new way designed by Microsoft Research. The new Microsoft way of doing things has significant advantages, but at the moment only Microsoft has access to it. However, it has built the same algorithm into the SDK, so you can use it, but you can't modify it. Until the SDK became available the most used body tracking software was NITE from PrimeSense. This isn't open source but you can use it via a licence key that is provided at the OpenNI website. This works in the way that all body tracking software worked until Microsoft found a better way, so let's look briefly at its principles of operation as this is the sort of method you will have to implement to track more general objects. The Nite software takes the raw depth map and finds a skeleton position that shows the body position of the subject. It does this by performing a segmentation of the depth map into objects and then tracks the objects as they move from frame-to-frame.. This is achieved by construct an avatar, i.e a model of the body that is being detected, and attempting to find a match in the data provided by the depth camera. Tracking is a matter of updating the match by moving the avatar as the data changes. This was the basis of the first Kinect software that Microsoft tried out and it didn't work well enough for a commercial product. After about a minute or so it tended to lose the track and then not be able to recover it. It also had the problem that it only worked for people when were the same size and shape as the system's developer - because that was the size and shape of the avatar used for matching. Body recognition using the SDKMicrosoft Research has recently published a scientific paper and a video showing how the Kinect body tracking algorithm works - it's almost as amazing as some of the uses the Kinect has been put to! There are a number of different components that make Kinect a breakthrough. Its hardware is well designed and does the job at an affordable price. However once you have stopped being amazed by the fast depth measuring hardware your attention then has to fall on the way that it does body tracking. In this case the hero is a fairly classical pattern recognition technique but implemented with style. So what did Microsoft Research do about the problem to make the Kinect work so much better? They went back to first principles and decided to build a body recognition system that didn't depend on tracking but located body parts based on a local analysis of each pixel. Traditional pattern recognition works by training a decision making structure from lots of examples of the target. In order for this to work you generally present the classifier with lots of measurements of "features" which you hope contain the information needed to recognise the object. In many cases it is the task of designing the features to be measured that is the difficult task. The features that were used might surprise you in that they are simple and it is far from obvious that they do contain the information necessary to identify body parts. The features are all based on a simple formula: f=d(x+u/d(x))-d(x+v/d(x)) where (u,v) are a pair of displacement vectors and d(c) is the depth i.e. distance from the Kinect of the pixel at x. This is a very simple feature it is simply the difference in depth to two pixels offset from the target pixel by u and v.

The only complication is that the offset is scaled by the distance of the target pixel i.e. divided by d(x). This makes the offset depth independent and scales them with the apparent size of the body. It is clear that these features measure something to do with the 3D shape of the area around the pixel - that they are sufficient to tell the difference between say an arm or a leg is another matter. <ASIN:0596518390> <ASIN:0321643399> <ASIN:3540436782> |