| Muiti-core processors |

| Written by Administrator | ||||

| Friday, 21 May 2010 | ||||

Page 2 of 3

A speed barrierIn fact the early Pentium 4 design codenamed “Northwood” is really where things started to go wrong. Intel attempted to correct some of the problems with the very latest Pentium 4 design codenamed “Prescott”. It was supposed to be scalable to run at up to 10GHz but its speed only increased from 3.06GHz to 3.8GHz. We seem to have hit a speed barrier. Other manufacturers found the same problem in increasing clock speeds – the problem was general. Why had things suddenly hit a brick wall?

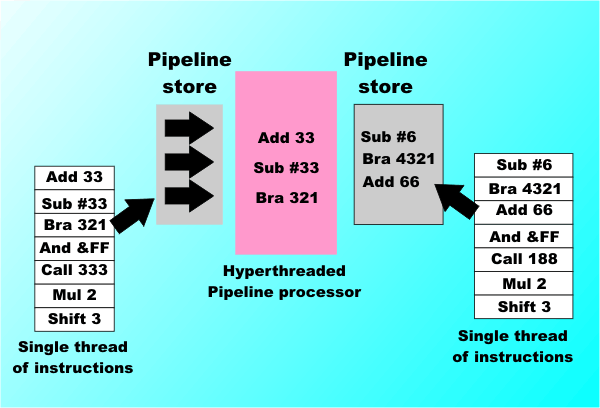

There are many reasons but the main one seems to be power dissipation. Put simply, the Pentium 4 runs hot and trying to increase the clock speed makes it run even hotter. Moore’s Law states that, on average, every two years the number of transistors on a chip doubles. This has been possible by reducing the size of each transistor but this reduction in size increases the power wasted as heat – the so called leakage power. Without special design techniques, such as switching off circuits that aren’t in use, the Pentium 4 Prescott design which uses 45nm technology to implement 125 million transistors, would generate 250Watts of heat and literally burn up as soon as it was switched on! As a result of this very fundamental heat problem Intel has abandoned all talk of 10GHz processors. AMD has had similar problems to Intel and its range of processors has stalled at around the 3GHz clock speed. It seems everyone is abandoning the idea that improving clock speed is the way to a better processor – instead the great hope for the future is “parallelizing” existing designs. A pipeline parallelizes the processing of a single thread of instructions now we are moving on to “thread parallelism”. HyperthreadingPipelines have their problems but the solution is in all cases simply to improve the sophistication of the pipeline. A clever pipeline is a fast pipeline and techniques such as out-of-order execution introduced in the Pentium Pro, instruction look-ahead and branch prediction introduced in the Pentium 4 are just some of the ideas that have been thought up to make the modern processor go faster. The pipeline idea has been so successful that it has produced processors so fast that keeping the pipeline busy is the real problem. As a result Intel invented the seemingly crazy idea of “hyperthreading”, first implemented in the Xeon family of processors but now standard. This can be seen as the first step on the road to dual-core processors so it’s worth looking at a little more closely. A modern pipeline processor has a problem with finding enough to do. The processor actually finds itself waiting for data to be fetched or for the pipleline to fill up with instructions. One way of making use of this unused power is to get the processor to run more than one “thread”. A thread is a sequence of instructions that can run independently of other threads. Operating systems like Windows support multiple threads and schedule them so each one gets its turn running on the processor. Individual programs can also be composed of multiple threads – one reading data, one processing it and another providing feedback to the user. Intel’s hyperthreading makes the single physical processor look like two logical processors. The instructions for two threads are loaded and the pipeline can be switched from one to another to keep the processor busy. In practice performance gains of 25% are achievable using a hyperthreaded Pentium 4. Of course, the speed of a single thread isn’t improved and to notice any difference an application has to be multi-threaded. Windows from XP and 2003 take advantage of hyperthreading if it’s available.

A hyperthreaded processor can switch its pipeline to running another thread so making it look as if it is two identical processors

|

||||

| Last Updated ( Thursday, 20 May 2010 ) |