| Cache Memory And The Caching Principle |

| Written by Harry Fairhead | |||||||

| Thursday, 23 September 2021 | |||||||

Page 3 of 3

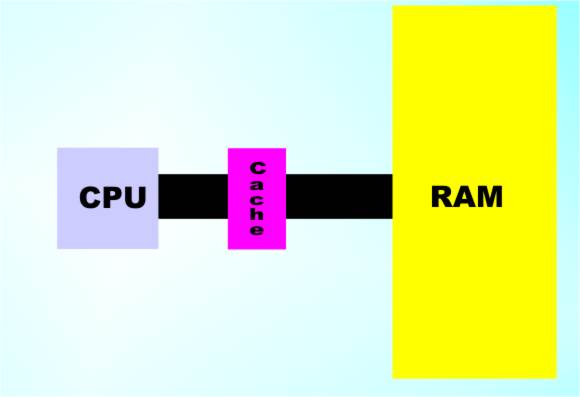

The Cache PrincipleThe cache system works so well that every modern computer uses it. In fact cache memory is so standard that it is built into the processor chips we use. Originally cache memory was implemented on the motherboard but as processor design developed the cache was integrated into the processor. For a while a system of two level caching was used with an L1 cache in the chip and an L2 cache on the motherboard. Yes you can make use of the caching principle more than once to speed things up. However the on-chip cache can interface with the processor more closely and it can be big and fast. Today caches implemented on the motherboard have vanished. There is still the idea of a hierarchy of memory however. The slowest but biggest memory is usually the disk drive, then RAM and then cache RAM. A cache can be useful between each level. So today you will find Flash memory being used as a cache to speed up disk drives. The one remaining question is how big should a cache be? The answer is precise – it should be just bigger than the average working set. Of course you can’t easily know that number, irritating isn’t it! What you can say is that adding cache follows a law of diminishing returns so a big cache is good but a bigger cache is just a waste of money. The only way you can find out is to examine the ratio of cache hits to misses. You should also be able to see that the cache idea is a general one. The same trick can be used to speed up disk access or any access. In software you can build a cache to store data or result that you might need again soon so that you don't have to recompute or reacquire them. For example, caching can speed up web page delivery by keeping pages that have just been requested in memory so that that they don't have to be reconstructed. As long as the working set idea applies i.e. that data you have just read is most likely to be the data you want to read next then the cache principle works. If you decide to take a look at a real cache implementation then be prepared for a lot more complexity than described here. Real caches have to take into account the entire structure of the addressing mechanism and pipeline used by a machine. Related ArticlesFlash Memory - Changing Storage Processor Design - RISC,CISC & ROPS

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info <ASIN:0123229804> <ASIN:0123797519> |

|||||||

| Last Updated ( Thursday, 23 September 2021 ) |