| Programmer's Guide To Theory - Practical Grammar |

| Written by Mike James | |||||

| Wednesday, 24 January 2024 | |||||

Page 3 of 4

Generating ExamplesYou can think of the BNF rules that define a language as a way of generating examples of the language or of testing to see if a statement is a valid example of the language. Generating examples is very easy. It’s rather like playing dominoes with production rules. You pick a rule and select one of the parts of the rule separated by “OR” and then try and find a rule that starts with any non-terminal on the right. You replace the non-terminal items until you have a symbol string with no non-terminal items – this is a legal example of the language. It has to be because you generated it using nothing but the rules that define the language. Working the other way, i.e. from an example of the language to a set of rules that produce it, turns out to be more difficult. Finding the production rules is called “parsing” the expression and which parsing algorithms work well can depend on the nature of the grammar. That is, particularly simple grammars can be parsed using particularly simple and efficient methods. At this point the typical course in computer science spends a great deal of time explaining the different parsing algorithms available and a great many students simply cannot see the wood for the trees – syntax trees that is! While the study of parsing methods is important, you only understand why you are doing it when you discover what parsing can do for you. Obviously if you can construct a legal parse of an expression then the expression is grammatical and this is worth knowing. For example, any reasonable grammar of arithmetic expressions would soon throw out /3+*5 as nonsense because there is no set of rules that create it. This is valuable but there is more. If you have parsed an expression the way that it is built up shows you what it means. This is a function of grammar that is usually ignored because grammar is supposed to be only to do with syntax, which is supposed to have nothing to do with meaning or semantics. In English, for example, you can have syntax without meaning; “The green dream sleeps furiously.” This is a perfectly good English sentence from the point of view of grammar, but, ignoring poetry for the moment, it doesn’t have any meaning. It is pure syntax without any semantics. This disassociation of syntax and semantics is often confused with the idea that syntax conveys no meaning at all and this is wrong. For example, even in our nonsense sentence you can identify that something “the dream” has the property “green” and it is doing something, i.e. “sleeping”, and that it is doing it in a certain way, i.e. “furiously”. This is a lot of information that is brought to you courtesy of the syntax and while the whole thing may not make sense the syntax is still doing its best to help you get at the meaning.

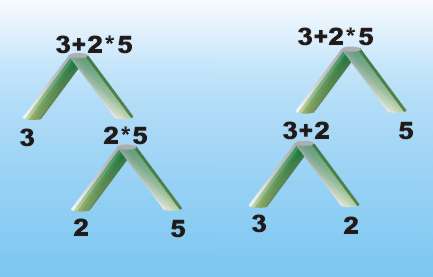

Syntax is semanticsSo in natural languages syntax carries with it some general indications of meaning. The same is true of the grammar of a programming language. Consider a simple arithmetic expression 3+2*5 As long as you know the rules of arithmetic you will realize that you have to do the multiplication first. Arithmetic notation is a remarkably sophisticated mini-language which is why it takes some time to learn in school and why beginners make mistakes. Implementing this arithmetic expression as a program is difficult because you can't simply read it from left to right and implement each operation as you meet it. That is 3+2*5 isn't (3+2)*5 but 3+(2*5) the multiplication has a higher priority. A simple grammar for this type of expression, leaving out the obvious detail, might be <expression> -> <expression> + <expression> | This parses the expression perfectly but it doesn’t help with the meaning of the expression because there are two possible ways that the grammar fits – <expression> -> <expression 1>+<expression 2> or <expression> -> <expression 1>*<expression 2> These are both perfectly valid parses of the expression as far as the grammar is concerned but only the first parsing groups the expressions together in a way that is meaningful. We know that the 2 and 5 should group as a unit of meaning and not the 3 and the 2 but this grammar gives rise to two possible syntax trees –

We need to use a grammar that reflects the meaning of the expression. For example, the grammar: <expression> -> <multi-expression> + <multi–expression> also allows 3+2*5 to be legal but it only fits in one way: <expression> -> <multi-expression> + <multi-expression> <multi-expression> -> <value> * <value> This means that this particular grammar only gives the syntax tree that corresponds to the correct grouping of the arithmetic operators and their operands. If contains the information that multiplication groups together more strongly than addition. In this case we have a grammar that reflects the semantics or meaning of the language and this is vital if the grammar is going to help with the translation. There may be many grammars that generate a language and any one of these is fine for generating an expression or proving an expression legal but when it comes to parsing we need a grammar that means something. Perhaps is isn't true to say syntax is semantics but if it is going to help you really have to pick the syntax that reflects the semantics. |

|||||

| Last Updated ( Wednesday, 24 January 2024 ) |