| Introduction to Boolean Logic |

| Written by Mike James | |||||||||||||||

| Tuesday, 11 March 2025 | |||||||||||||||

|

Page 5 of 7

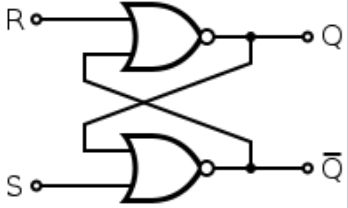

Sequential LogicComputers also include sequential logic, which includes an element of time. For example, a flip-flop, again perfectly good jargon, is a circuit that changes state in the same way as a pendulum “flips” from side to side. The odd thing is that you can make a flip-flop from two NOT gates and most sequential logic can be understood in terms of combinations of logic gates. However, this is an incomplete explanation and requires something beyond Boolean logic. For example, Boolean logic cannot cope with the statement: A = NOT A If A is true then NOT A is false and so A must be false, but this means that NOT A is true, which means that A must be true, which means, and so on…So from the point of view of Boolean logic this is nonsense. Now imagine that the on one side of a blackboard you write “A is true” and on the other side you write “NOT A is true”. Now when you read “A is true” you are happy with this. When you turn the blackboard over you see “Not A is true” and so conclude A is false. As you keep flipping the board over the value of A flips from true to false and back again. This is, of course, just the paradox of self-reference as introduced and used in earlier chapters. The big difference is that now it isn't a paradox as you can include time in the system. If you think that each time you turn the blackboard over we create a new state then there is no paradox - when the board is one way we have true, the other and we have false. This turning over of the blackboard is the extra part of the theory needed to deal with sequential logic - it shows how time changes things. So computer hardware is not just one large statement in Boolean logic, which just happens to be true or false. Its state changes with every tick of its system clock. Even if you eliminate the system clock and only look at combinatorial logic, time still enters the picture. When the inputs to a combinatorial circuit change there is a period when the state of the system changes from its initial to its final state - a transient state change. For example, in an n-bit full adder we have to allow time for the carry generated at the low order bits to propagate to the higher order bits. Even a seemingly static logic circuit is in fact dynamic. As a small example consider the SR (Set/Reset) latch made from two NOR gates.

If both S and R are at zero then the output doesn't change - it is latched. If you set R to one then the output Q goes low and it is reset. If you set S to one then the output goes high and it is set. If you put a one on S and R then we appear to have a paradox because we can't set and reset the latch at the same time. In fact what happens is that the latch either goes to one or zero depending on how fast signals propagate though it and the exact timing of the inputs. The SR latch is useful because it "remembers" its last state - was it set or reset. It is a very simple memory component. Even simple computers are more complicated than simple static Boolean logic would suggest. In the real world you cannot ignore time and the time development of the system which Boolean logic ignores. |

|||||||||||||||

| Last Updated ( Tuesday, 11 March 2025 ) |