| Toyota Code Could Be Lethal |

| Written by Harry Fairhead |

| Wednesday, 26 February 2014 |

|

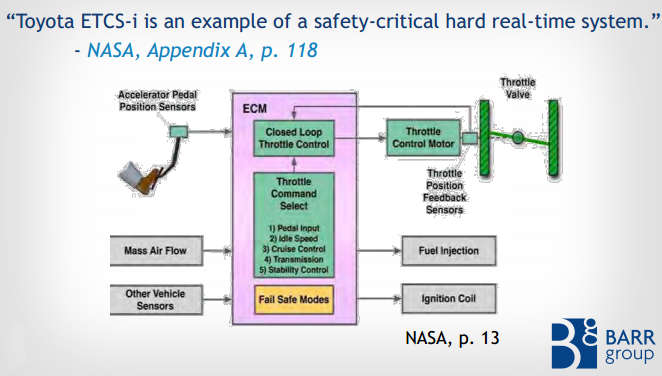

You may have heard about the Toyota unintended acceleration case, but you might have missed the analysis of the software that connected the gas peddle to the throttle. Put simply it's frightening enough to make you buy a pre-computer car. Back in 2009 there were reports that Toyota Camrys and Corollas were suddenly accelerating to 90mph without the driver moving the gas peddle and that braking didn't help. The incidents included a number of fatalities and one driver was sent to prison for killing three people, despite claiming that his car sped up without reason. The first thing to point out is that if you grew up in the era when an accelerator pedal was connected to the throttle valve by a wire then you need to think again. Modern cars mostly use "drive-by-wire" technology and the link between the accelerator and the throttle is via electronics controlled by software.

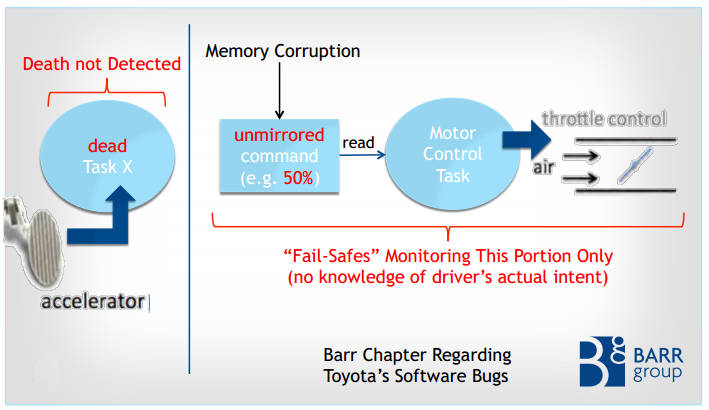

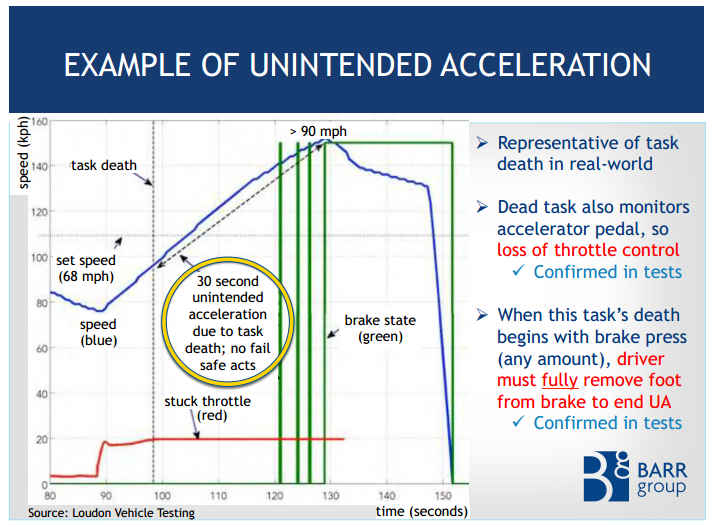

Toyota recalled the cars and fixed a problem with the floor mat that it claimed could foul the gas pedal. Later the car's software was updated as part of another recall. A first investigation by NASA found no problems with the software, but they couldn't rule out bugs that might have caused the problem. Toyota took this as an exoneration and the National Highway Traffic Safety Administration closed the matter. However, a driver injured by a UA (unintended acceleration) sued Toyota in Oklahoma and won $1.5 million after embedded software experts from the Barr Group presented evidence that the software was very badly designed. This was back in October of 2013, but the matter has just been reported in the technical press as "Stack overflow causes unexpected acceleration". However, the truth is even worse than this simple cause might suggest. The slides used in the court presentation tell a frightening story - but one that most programmers might expect. The examination of the software that controlled the throttle found that it was of very poor quality. There were more than 11,000 global variables in use; most of the functions were very long and complex; and the code's cyclomatic complexity was much greater than 50. In fact the throttle angle function scored over 100, which puts it in the unmaintainable class. No single flaw was found that could be conclusively blamed for the UA - but there were many that could have caused the problem. In particular, the way that the stack was used could have resulted in an overflow that wiped out essential OS data. Not only was stack usage up to 94% in normal operation, the code was recursive! Recursive code is generally avoided in embedded application because it is harder to demonstrate that it has a good chance of working reliably. MISRA - the Motor Industry Software Reliability Association - has a rule that explicitly forbids recursion. Toyota claimed it followed MISRA standards but more than 80,000 violations were found. Equally worrying was the fact that nothing had been done to deal with a stack overflow. The memory area that the stack grew into was not protected and there was no stack monitoring. An even more shocking situation is the way the operating system handled tasks and the way they were organized. It turned out that tasks could be killed by the OS for a range of reasons, and in most cases the death of the task would not be noticed by the rest of the system. The usual way of dealing with this sort of problem is to use a watchdog timer. Vital tasks reset the timer as part of their normal running. If they fail then the watchdog timer isn't reset and restarts the entire system.

You might think that a restart is as dangerous as a UA, but a reset occurs in a few milliseconds and the most the the driver would notice would be a brief loss of rpm. Unfortunately, the watchdog did not monitor the majority of OS tasks and hence the system could carry on running. If, for example, the accelerator task was killed then the throttle control task would carry on running and either stick at the last setting or increase due to corrupt shared variables. To compound the problem, a second processor monitoring the system could have reset the system if it noted that the throttle was open when the brake was being applied. However, if the task died while the brake was on then the system did not respond to the unusual condition until the brake was completely released and reapplied. So there you are in a car that has suddenly acquired a mind of its own and is increasing in speed. Is your first reaction is to take your foot off the brake?

There are lots of other more minor, but just as vital points, made about the safety of the software, but perhaps the final one was the use of a single task to implement the fail-safe modes and collect the diagnostic codes. If this task was killed there would be no record of what had happened. The real failure is the lack of understanding of how to build safety critical software. I am very surprised that in such a system a multitasking operating system is in use at all. In most cases critical software should be built without the use of asynchronous programming, events or recursion. The control flow should be deterministic and there should be watchdog timers to ensure that it is working as required at all times. If it isn't working then reboot is nearly always a suitable solution. This story is enough to make you think that a good old wire cable has a lot going for it.

More InformationAre We Shooting Ourselves in the Foot with Stack Overflow? Related ArticlesRobot cars - provably uncrashable?

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

| Last Updated ( Wednesday, 26 February 2014 ) |