| Fire Phone - The First AI Phone |

| Written by Mike James |

| Thursday, 19 June 2014 |

|

Amazon has, as expected, announced a new Android phone but it isn't the cheap one we predicted. Instead it has AI and software smarts all over it and guess what - there are SDKs for that. Amazon's new phone was a very poorly kept secret and it was thought that it might try to shake up the market by making it free - after all it is another portal into the Amazon marketing empire. At $199 on contract it isn't the big shock that the rest of the Android makers were fearing. It is however a shock in another way. It is stuffed with software gadgets that make use of some very sophisticated techniques. It is so smart that you might want to call it the first AI phone - although not all of the techniques used are pure AI. So what are these new technologies? The most visible to the user is probably Dynamic Perspective. This uses four specialized cameras, four infrared LEDs, a dedicated custom processor, real-time computer vision algorithms, and a custom power-efficient graphics rendering engine to track head movements in real time. All of this is available in the SDK including head tracking and motion gesture. What can you do with it? A good question and it isn't clear that Amazon knows the answer. The demos reveal that you can create something like virtual reality with a 3D effect and you can use the tilt of the phone to trigger actions. The 3D effect is created by matching the perspective of the image displayed on the phone to your viewing angle so as you move around the phone you see different sides of the object. Beyond this it is up to you to work out how you can use all of this in games and general apps. You can see Dynamic Perspective in action in the following video:

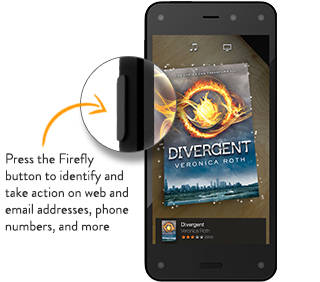

The second big new technology is Firefly. This is Amazon's way of getting more customers. It is essentially an app that can identify 100 million objects. You show it something and it will let you buy it from Amazon. It can recognize a phone number, a book, a QR code and so on. It will even listen to the ambient noise around you and identify any music or TV show, including the episode. The phone has enough processing power to perform the pre-processing and send only the parts that matter to an Amazon server, which does the actual identification. The Firefly SDK lets you use this to recognize real world objects. You can build Firefly plugins to receive notification of what the algorithm has detected. What you do after that is up to you. One example that Amazon gives is a plug-in that looks up the next concert for a musican who has been identified.

There are lots of other attractive features bundled into the Fire Phone including a good camera with HDR, panorama mode and 13MP optical image stabilization; unlimited cloud photo storage, and the Mayday live help button that connects you to Amazon. It's impressive, but what really makes it different are dynamic perspective and Firefly both of which are advanced computing in a phone. Who would have thought that advanced graphics and AI would make their way in the world via a phone designed to sell you things? Come to think of it, it does seem all too likely. The Fire Phone does seem to offer some new features that you can take advantage of immediately, but how big the market for your unique app will be is something we will have to wait and see. Could the same technology be ported to a tablet? I don't see any good reason why not. More InformationRelated ArticlesGoogle Explains How AI Photo Search Works Google Helps Tell An Apple From Apple Your phone detects what you are doing

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

| Last Updated ( Thursday, 19 June 2014 ) |