| Apache Releases Spark 1.6 |

| Written by Kay Ewbank |

| Friday, 08 January 2016 |

|

A new faster version of Apache's Spark open source data processing engine has been released with a new Dataset API and improved data science features. Apache Spark 1.6 is faster, has a new Dataset API, and the data science features have been improved.

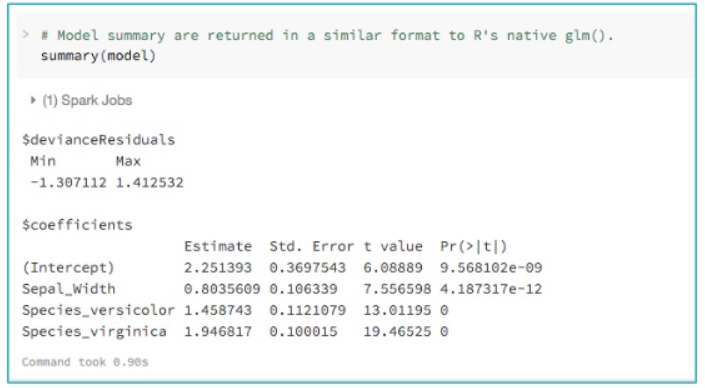

The improved performance starts with changes to the scanning of Parquet data. Parquet is one of the most commonly used data formats with Spark. In the past, Spark’s Parquet reader used parquet-mr to read and decode Parquet files. The developers profiled many Spark applications and found that many cycles tend to be spent in “record assembly”, a process that reconstructs records from Parquet columns. A new Parquet reader has been introduced that bypasses parquert-mr’s record assembly and uses a more optimized code path for flat schemas. According to a blog post about the new version: "In our benchmarks, this new reader increases the scan throughput for 5 columns from 2.9 million rows per second to 4.5 million rows per second, an almost 50% improvement!" The memory management is another area that has been improved. Before Spark 1.6, Spark statically divided the available memory into two regions: execution memory and cache memory. The execution memory was used for sorting, hashing, and shuffling, while the cache memory was used to cache hot data. Spark 1.6 introduces a new memory manager that automatically tunes the size of different memory regions. Regions are automatically increased in size or shrunk by the memory manager according to the needs of the executing application. The developers say that for many applications, this will mean a significant increase in available memory that can be used for operators such as joins and aggregations, without any user tuning. The new API is another area that is designed to provide better performance, though you might need to change the way your apps are coded. The developers say streaming state management is ten times faster in the new version. The reworked state management API in Spark Streaming introduces a new mapWithState API that scales linearly to the number of updates rather than the total number of records. It does this by having an efficient implementation that tracks “deltas”, rather than always requiring full scans over data, and the developers say that this has resulted in an order of magnitude performance improvements in many workloads. The DatFrame API is another area that has been improved with the addition of a typed extension called Datasets. DataFrames were introduced earlier in 2015 to add high-level functions that give Spark a clearer understanding of the structure of data as well as the computation being performed. This information is used by the Catalyst optimizer and the Tungsten execution engine to automatically speed up real-world Big Data analyses. However, users of Spark have reported concerns over the lack of support for compile-time type safety, hence the introduction of the Datasets typed extension. This extends the DataFrame API to supports static typing and user functions that run directly on existing Scala or Java types. Another area that has been improved is the support for data science, with the addition of machine learning pipeline persistence: The developers say that a lot of machine learning applications make use of Spark’s ML pipeline feature to construct learning pipelines. However, until now, if you wanted your application to store the pipeline externally, you needed to implement custom persistence code. In Spark 1.6, the pipeline API provides the means to save and reload pipelines from a previous state and apply models built previously to new data later. The new version has added a number of machine learning algorithms, including:

More details on the new version can be found in the Release Notes, along with working examples.

More InformationRelated ArticlesTo be informed about new articles on I Programmer, sign up for our weekly newsletter,subscribe to the RSS feed and follow us on, Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Friday, 08 January 2016 ) |