| New face animation algorithm |

| Written by David Conrad | |||

| Wednesday, 10 August 2011 | |||

|

While facial recognition is getting all the attention a group at Microsoft Research have been looking at the face from a different point of view - animation. With 52 muscles controlling an object we are designed to recognize in minute detail creating an animated face is a tough problem. Either the technique gets too little detail or simply animate the high resolution details inadequately to be realistic. Now we have a method of creating very high resolution 3D meshes that are controlled by a lower resolution motion capture to produce high fidelity facial expression animation. The approach taken by the team lead by Xin tong (Microsoft Research Asia) and Jinxlang Chai (Texas A&M University) is simple in theory but tricky in practice but does succeed in getting high quality animation that includes the more subtle winkling and skin behaviour. There methods are to be presented at the forthcoming SIGGRAPH 2011 and you can read the full paper at the link below but essentially it depends on using motion capture to create low resolution animated 3D framework that can then be combined with a relatively small number of higher resolution 3D scans to create a high resolution animated mesh.

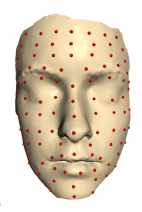

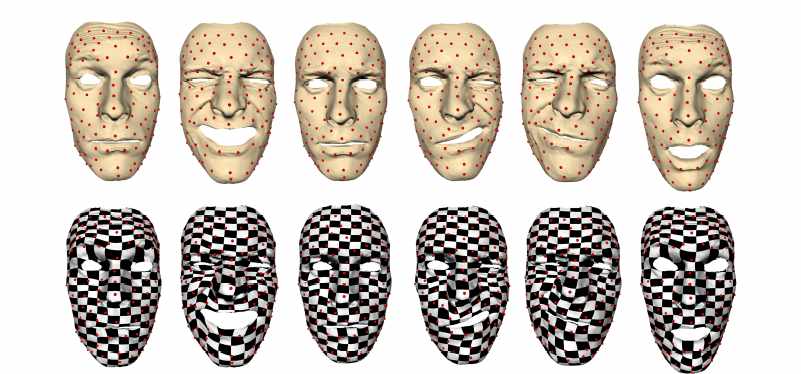

First three actors were used with facial motion capture at 100 points. The actors went though a series of set facial expressions to determine how the face moves when transitioning between them. Next a high resolution laser scanner captured a much finer 3D model of the faces which were aligned with the corresponding lower resolution motion frames using a new registration algorithm. The high resolution 3D models captured fine details such as wrinkles which were missing from the course 100 point motion capture meshes. First a the two meshes were matched up using only a rigid transformation. This places high resolution mesh into a position indicated by the motion markers but without allowing for relative movement between the markers due to the face being elastic - stretching and wrinkling during the movement. The next step segments the face and moves the regions independently to get a better match so improving the position of the high resolution 3D frame as specified by the motion markers.

Low and high resolution mesh registrations. The result of all this work is a set of very high resolution 3D meshes that not only embody the gross movements captured by the 100 point motion capture but finer movements that are implied by the larger scale motions. However the problem isn't solved just yet because we only have motion data for the 100 marker points. To animate the finer mesh intermediate points between the markers are identified in different scans as being the same facial feature. This achieved by partitioning the face into regions and performing the matching within each region. The finished product is a set of very high resolution snapshots of facial expressions which can be morphed into one another using the blendshape algorithm. Essentially all that has been achieved is a way of creating a high resolution motion capture of the face without having to stick hundreds or thousands of marker dots on the actors face - something that is close to impossible. The next step is to discover if the same quality can be achieved using even fewer motion capture markers.

More InformationLeveraging Motion Capture and 3D Scanning for High-fidelity Facial Performance Acquisition

If you would like to be informed about new articles on I Programmer you can either follow us on Twitter or Facebook or you can subscribe to our weekly newsletter.

|

|||

| Last Updated ( Wednesday, 10 August 2011 ) |