| Decoding What The Eye Sees |

| Written by Mike James | |||

| Thursday, 17 May 2018 | |||

|

The latest breakthrough in brain function is the reconstruction of what the eye sees from the activity of neurons that form the optic nerve. Man machine neural interfaces get closer. Understanding the way that real neurons work together is a huge task, and we only take small steps towards the goal. The most recent work involved recording the signals from the neurons at the back of the retina and successfully reconstructing a video of randomly moving dots that were on display at the time. If you think that this is an easy task then you need to keep in mind how real neurons work. The eye isn't like a video system with signal levels proportional to the light. A neuron fires of pulses at irregular intervals, even when nothing is happening. The rate of firing increases as the input stimulation increase. The network is a spiking network with rate of firing roughly correlated with activity. The research group, Vicente Botella-Soler, Stéphane Deny, Georg Martius, Olivier Marre and Gašper Tkačik from Sorbonne Université, Max Planck Institute for Intelligent Systems and Institute of Science and Technology Austria, set out to record from 100 neurons in the retina of rats while they watched a video of randomly moving disks. The data was analysed in a number of ways, but it is surprising that the simplest approach based on regularized linear regression worked reasonably well. One point that is important is that all of the analysis used acausal filters - which means that all of the data was used as if it was a snapshot of the activity over a window of time. Causal processing, where the analysis is restricted to the present and past activity produced a poor performance. This means that it would be difficult to use the technique to show what the rats were looking at in real time. Other, more sophisticated, techniques were tried, including a nonlinear version of the regression and a deep neural network. As you might expect, both worked better than linear with the neural network doing as well as the best nonlinear regression. A small but curious observation in the paper about the neural network is worth repeating in case anyone can explain it: Interestingly, only around 42 units per hidden layer have non-zero connections after training. Although, if started with only 50 units we observed worse performance. The model started out with 150 units in the hidden layer. You can see the reconstructed video versus the input video:

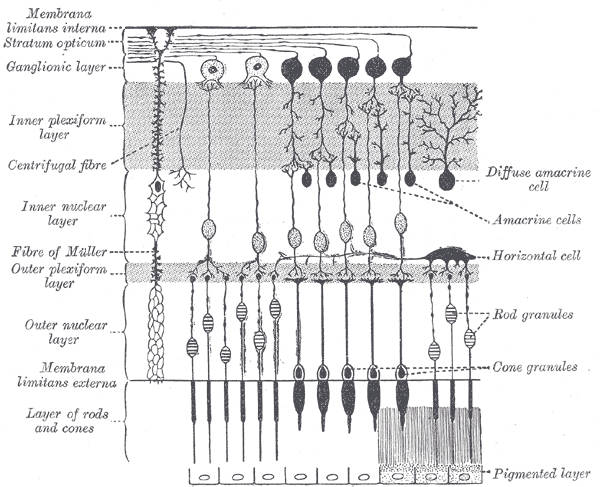

The team then go on to investigate the nature of the encoding performed by the retina: "Here we proposed a simple mechanism to discriminate spontaneous from stimulus-driven activity using history dependence of neural spiking: because neuronal encoding is nonlinear, the effect of spike-history dependence on neural firing substantially differs between epochs in which the neuron also experiences a strong stimulus drive and epochs in which it does not. In such situations, nonlinear methods can discriminate between a true stimulus fluctuation and spontaneous-like firing from statistical structure intrinsic to individual spike trains, even when the mean firing rate doesn’t change appreciably between different epochs. This mechanism is not specific to the retina, and may well apply in other systems that display both stimulus-evoked and spontaneous activity." Before you start to imagine uses of this technology that extend into science fiction, it is worth point out that the ganglion cells are the last layer of neurons that make up the retina. Their axons are bundled together to form the optic nerve. So in engineering terms we are tapping into the video feed cable, rather than the brain. The brain takes the output of this layer and turns it into the rich representation of the world we take for granted and to find out how it does this we need to move to the other end of the cable.

More InformationBotella-Soler V, Deny S, Martius G, Marre O, Tkačik G (2018) Nonlinear decoding of a complex movie from the mammalian retina. PLoS Comput Biol 14(5): e1006057. https://doi.org/10.1371/journal.pcbi.1006057 Related ArticlesGolden Goose Award To Hubel & Wiesel A Billion Neuronal Connections On The Cheap A Worm's Mind In An Arduino Body OpenWorm Building Life Cell By Cell IBM's TrueNorth Simulates 530 Billion Neurons The Influence Of Warren McCulloch To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Thursday, 17 May 2018 ) |