| How Memory Works |

| Written by Harry Fairhead | ||||

| Thursday, 16 May 2024 | ||||

Page 1 of 3 Exactly how does computer memory work? What is surprising is that it still works in more or less the same way as when Babbage designed his Analytical Engine or the IBM 360 accessed core memory. So where do all our programs live? We have already explored the "memory principle”. Normally memory is described as a storage facility where data can be stored and retrieved by the use of an address. This is accurate but incomplete. A computer memory is a mechanism whereby if you supply it with an address it delivers up for you the data that you previously stored using that address. The subtle difference is that the whole process has to be automatic and this provided two problems to the early computer builders. First how to build something that stores data at all and second how to store and retrieve it automatically using a suitable address. For example, a set of pigeon hole style mail boxes is often used as a model for memory. A letter comes in and some one puts it in the correct box. Then when some one wants to retrieve the letter they look for the correct name and pull any items out of the box. This isn't a model of computer memory. If it was then the letters would go into the correct box without any help and they would retrieve themselves as soon as the name was known. The point is that the human, in your mental model, who puts the data in the correct pigeon hole and gets the data is part of the memory mechanism. When you build a working memory it isn't just the pigeon holes that you need to implement but the "human" store and retireve system. A working memory associates the data and the address without any mysterious intermediates looking for one based on the other. That is to build a memory you have two problems:

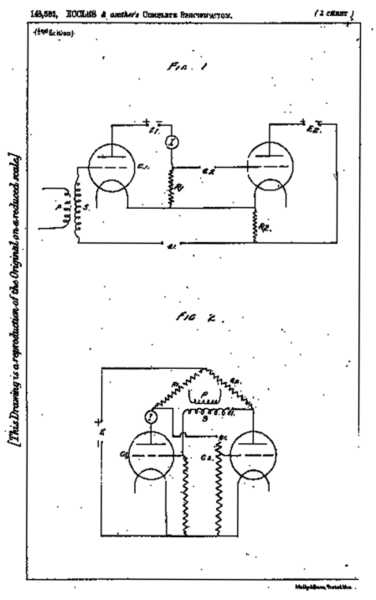

The odd thing is that right back at the very beginning how to implement a computer memory was fairly obvious and relatively easy. The numbers were stored in a natural fashion on cog wheels and it was relatively easy to think up a mechanical system to address the memory. The trouble really begins when we move into the era of electronics. The first electronic computers used valves, which were very good at amplifying and switching, and while it was relatively easy to see how they could be used to build logic gates using them to create memory didn’t seem as obvious. Memory Dynamic And StaticIn fact the problem had been more or less solved in 1919, before any of the great valve based computers were started. Two UK physicists, Eccles and Jordan, invented a circuit which used two valves. Two trigger lines could be used to turn one of the valves on and the other off. A little more work and they invented a whole family of two-valve circuits which depended on the action of one valve switching the other valve off.

From Eccles and Jordan's patent.

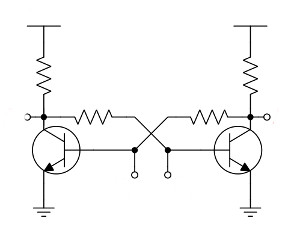

One of these circuits was the “bi-stable latch". This had a single input line, which could be high or low, and a single “enable” or “latch” line. When the latch line was low the output of the bi-stable followed the input but when the latch line was high the output was fixed at its last value irrespective of the input. You can think of this as the action of a memory – when the latch is high the bi-stable remembers the input applied to it. A simple circuit but the basis of most of the early high-speed computer memory. Later the same circuit was translated into transistors and then into integrated circuits. The only problem with this arrangement is that two valves, or transistors, are required for every bit stored - at any given time one is on and the other off.

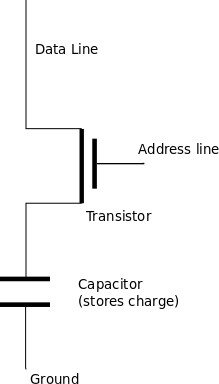

Transistor Memory Element

The modern method of doing the same job is to use a single transistor to maintain a charge on a single capacitor. If the capacitor is charged then a 1 is stored, if not a 0. The only problem with this arrangement is that the charge leaks away and if you read the memory location any charge is wiped out by the act of reading.

To get over these problems the memory location has to be periodically refreshed by being read and then re-written with its contents. Because of this the single transistor arrangement is called “dynamic” memory and the two-transistor arrangement is called “static” memory. Most computers today still use dynamic memory and have to include refresh cycles to keep the memory from losing data, which slows things down. More StorageThis is all very well but even single transistors are expensive and if you want to store lots of data you need something a bit cheaper and with more capacity. This problem was even worse if you were using valves as they were big, power hungry and failed often. Although storage based on using electronic components such as valves and transistors was the most obvious form of memory engineers have long needed to look for other methods to provide larger amounts of storage. Back in the early days Eckert (one of the designers of ENIAC, one of the first electronic computers ) thought of using a delay line. The basic idea was to take a long tube of Mercury and place a loudspeaker at one end and a microphone at the other. Sound waves injected at one end took time to get to the other end and so you could store a stream of pulses in the tube. The problem here was that the pulses had to be recirculated and you could only retrieve or store a particular bit when it passed out of the tube and was on its way back to the loudspeaker. This created all sorts of problems for the early programmers. Indeed Alan Turing’s only real computer, the ACE, had a machine code that included a value for the timing of the pulse that was to be stored or retrieved. He even thought out ways of optimising his programs relative to the circulating pulses in the delay line – something he elevated to the status of a high art. After the era of the delay line more obviously electronic methods started to take over. The delay line did have a brief reprieve when Williams invented a recirculating storage system based on a CRT (Cathode Ray Tube). The idea was that bits were stored as dots (pixels) on the face of a TV tube and a photosensor was used to pick up their presence or absence. A good idea and it worked well but the tubes were bulky and expensive.

<ASIN:0811854426> <ASIN:0789736136> <ASIN:1844254402> |

||||

| Last Updated ( Sunday, 26 May 2024 ) |