| Inside Kinect |

| Wednesday, 16 June 2010 | |||

|

The technology behind Microsoft's Kinect (project Natal) whole body user interface is there for anyone to use - you might even be able to implement it using nothing but software.

Microsoft has renamed project Natal, its new game interface, as Kinect - and don't you think that most of Microsoft's code names are better than the final moniker that they adopt for the finished , or nearly finished, thing. While there is a lot of over-hyped and staged excitement about the new human interface it is interesting to know that the same technology is already generally available. It seems that Natal/Kinect is the next step down the gestural interface road but notice that this step is mainly soft rather than hard. The new Kinect XBOX 360 add on is mainly an innovation in software.

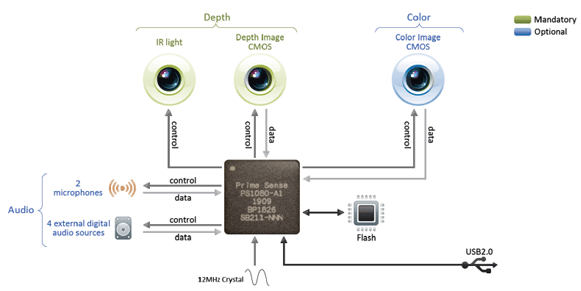

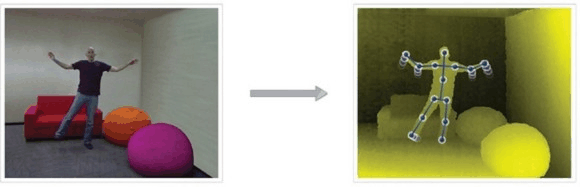

It is a box with some cameras that makes use of IR illumination to obtain depth data, color images and sound. The IR is used as a distance ranging device much in the same way a camera autofocus works. It is claimed that the system can measure distance with a 1cm accuracy at 2m and has a resolution of 3mm at 2m. The depth image is also 640x480 i.e. standard VGA resolution. The color image is 1600x1200. A custom chip processes the data to provide a depth field that is correlated with the color image. That is the software can match each pixel with its approximate depth. The preprocessed data is fed to the machine via a USB interface in the form of a depth field map and a color image.

The PrimeSense reference implementation At this point you might be wondering why a depth map is so important. The answer is that many visual recognition tasks are much easier with depth information. If you try to process a flat 2D image then pixels with similar colors that are near to each other might not belong to the same object. If you have 3D information then pixels that correspond to locations physically near to each other tend to belong to the same object, irrespective of their color! It has often been said that pattern recognition has been made artificially difficult because most systems rely on 2D data. Microsoft acquired the basic hardware for Kinect from another company PrimeSense and this means that the same technology is available off the shelf. So far it hasn't acquired the company, just the technology to base its own input device on. Microsoft developed its own recognition software to work with the imported hardware but PrimeSense also has some middleware - Nite - which already performs gesture recognition and even goes as far as allowing users to control devices and play games. "The NITE framework also offers a set of developer tools for customizing game controls and adding new functionality – all without having to write a single line of depth processing code."

So you too can have a Kinect-like input device complete with framework working with your application. So much for Microsoft's head start in the new world of whole body user interfaces. Of course Microsoft controls the technology and the access to the technology on the XBOX platform but it's much more open on other platforms - including the PC - than you might have suspected. Taking this just a little bit further you don't have to be an expert in AI to realize that even the hardware aspects of the PrimeSense device aren't really necessary. You can do scene depth analysis using just two standard video cameras and some parallax processing code. Its not as good having a hardware generated depth map but it might be good enough. In other words, whole body input may not need any special hardware at all.

While it looks as if Microsoft has it all sewn up for whole body user interfaces until some other big company catches up this isn't the case. The technology is there for smaller companies to use and if you feel like experimenting with creating your own depth maps perhaps you could even make an open source equivalent. Further reading: Getting Started with PC Kinect A Kinect Princess Leia hologram in realtime

|

|||

| Last Updated ( Monday, 31 January 2011 ) |