| pgstream - Real Time Change Data Capture For PostgreSQL |

| Written by Nikos Vaggalis | |||

| Monday, 16 September 2024 | |||

|

As we've said before, Postgres is for everything, for all the stacks. Now add Change Data Capture to that list too, thanks to pgstream. When we say stacks, we mean the Data stack, the ML stack, the Time Series stack, Spatial Data Management, Real Time Streaming, ETL and so on. PostgreSQL is not just a DBMS any more but has been turned into a Swiss Army Knife that can do just about anything. I won't give specific examples since we here at IProgrammer have dealt with that extensively, so if you're interested just browse the site to find many PostgreSQL-related articles. With that out of the way, let's concentrate on this latest tool, pgstream. Pgstream is a standalone utility and not an extension for Postgres, and is built by the folks at Xata, the serverless data platform built on top of PostgreSQL that provides a full-text and vector search engine, record-level file attachments and table-level aggregations amongst other functionality. The official definition of pgstream is that it facilitates Postgres replication with DDL changes. What does that encompass?

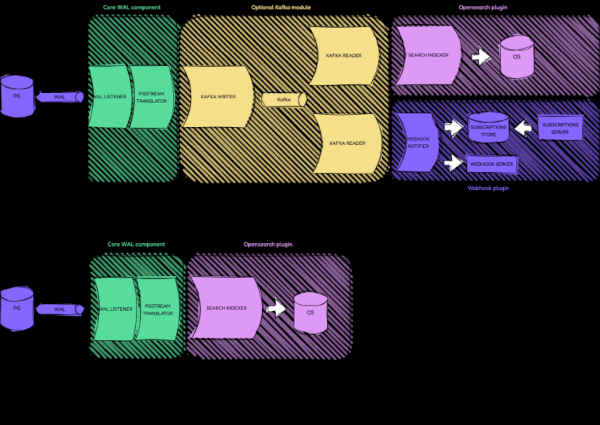

All this diverse functionality is there because pgstream is modularized, while at its core lies the WAL listener, a listener that listens to WAL data, regardless of the source which consumes and manages the WAL events, delegating the processing of those entries to the modules that form the processing pipeline.

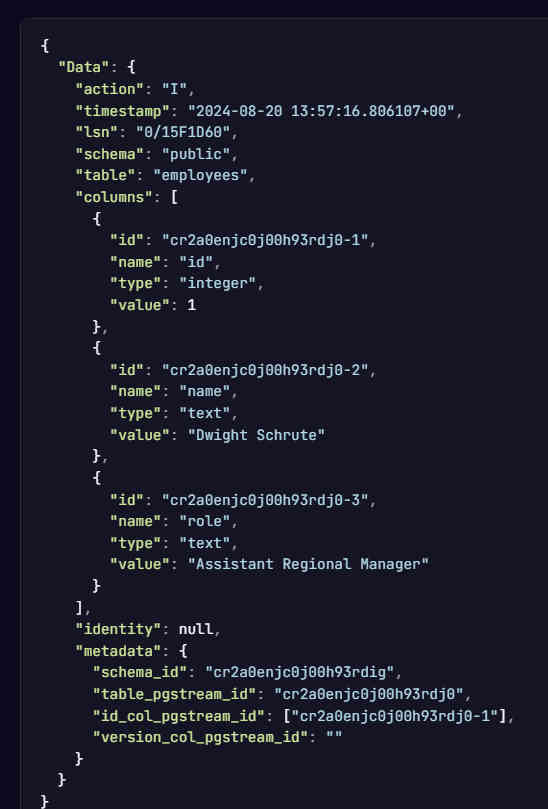

The killer selling point of course is that of real time schema change replication. Unlike other CDC solutions, pgstream uniquely tracks and replicates schema changes continuously, taking explicit actions on DDL changes and triggering events downstream. As far as the the full-text search feature goes, baked into Xata too, it uses Elasticsearch on top of Postgres. To keep these two datastores in sync, it captures and identifies data and schema changes in Postgres and pushes these modifications downstream to Elasticsearch with minimal latency. Then, firing Web hooks is useful upon whenever there's a data (or schema) change in the database and there's need to propagate that data to an external endpoint, like a Web service. This means that whenever a row is inserted, updated, or deleted, or a table is created, altered, truncated or deleted, a webhook which is subscribed to the relevant wal event is notified of the relevant event details. The firing of the webhook is happening after the DDL is committed since it relies on a trigger that populates the pgstream.schema_log table with the schema in JSON format, and this should only happen once the operation is committed. That entry in the pgstream.schema_log table is then replicated downstream and processed by the webhook notifier to send the notifications to subscribed webhook URLs. So when the following DDL is happening: INSERT INTO employees(name, role) VALUES('Dwight Schrute', 'Assistant Regional Manager'); This is what is produced and propagated to the Webhook.

So simple. Contrast that with of the trouble I had to go through when manually implementing a CDC/Webhook functionality that involved a complex pipeline of triggers and database events under the Ingres dbms in order to transform and manipulate data collected by SQL queries into HL7 packets before they got automatically sent to a Web service at the end of the pipeline. Enough said, let's see how you can get started with pgstream. For that you need a Postgres instance that has logical replication enabled and the wal2json output plugin loaded. There are a number of ways to do this, but the most straight forward is with the docker-compose file from the pgstream repo: git clone https://github.com/xataio/pgstream.git then start the Postgres instance: docker compose -f build/docker/docker-compose.yml up db Then, in a new terminal window, you can connect to it and create a simple table to play with: psql "postgres://postgres:postgres@localhost:5432/postgres"; Now install pgstream. Since we already have the repo cloned we build it from source: go build The above assumes you have Go installed. The next step is to initialize pgstream, like this: ./pgstream init --pgurl "postgres://postgres:postgres@localhost/postgres?sslmode=disable"; This creates several tables managed by pgstream, which are grouped in a schema called pgstream. After that you are good to go. Utility format aside, pgstream is also offered as library invokable from Go code. There's no official documentation on this yet but as an example of how it's used internally by the pgstream CLI in the stream pkg check this official example.

More InformationRelated ArticlesPeerDB Brings Real Time Streaming To PostgreSQL

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |