| Azul's Cloud Native Compiler - Why Share The JIT Compiler? |

| Written by Nikos Vaggalis | |||

| Monday, 14 March 2022 | |||

|

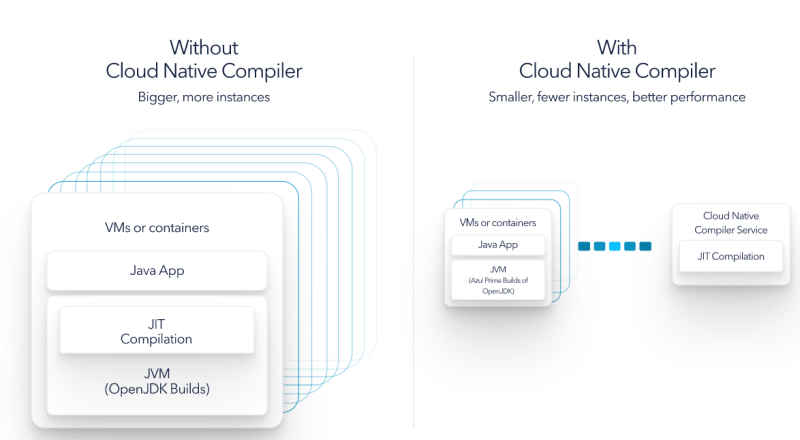

Azul's Cloud Native Compiler is targeted at organizations with multiple dev teams who share a common environment. Instead of compiling on their local machines, the process is offloaded to a cloud that shares a JIT compiler. Why is that beneficial? As we all know, when compiling code on the JVM there's a JIT compiler that turns the bytecode into machine code. Typically this happens locally at each dev's PC. What Azul offers is to offload this JIT compilation to a cloud-native Kubernetes-based compiler; this has distinct advantages over lone local compilation. Firstly the local resources are not stressed as much because they now engage less CPU and RAM resources. Another advantage is that as a lot of libraries and code is common amongst local dev machines, sharing their compilation in a common repository allows for caching, better dynamic resource optimization, faster compilation and less memory requirements. Specifically, when you use Cloud Native Compiler on your Java workloads:

I don't know if Azul is basing its technique on it, but there was a 2018 research paper called "ShareJIT: JIT Code Cache Sharing across Processes and Its Practical Implementation" which is close to the underlying concept, but in the context of the Android Runtime (ART) Just-in-time (JIT) compilation coupled with code caching are widely used to improve performance in dynamic programming language implementations. These code caches, along with the associated profiling data for the hot code, however, consume significant amounts of memory. Furthermore, they incur extra JIT compilation time for their creation. On Android, the current standard JIT compiler and its code caches are not shared among processes---that is, the runtime system maintains a private code cache, and its associated data, for each runtime process. However, applications running on the same platform tend to share multiple libraries in common. Sharing cached code across multiple applications and multiple processes can lead to a reduction in memory use. It can directly reduce compile time. It can also reduce the cumulative amount of time spent interpreting code. All three of these effects can improve actual runtime performance. The concept is now shifted over from the Android platform and generally applied to the cloud where DevOps lives and breathes on reduced start up times that Microservices depend on. And it's not the first time that Android shows the way forward. It happened again with AOT compilation,as examined in "Micronaut 3.2 Released for More Performant Microservices": Micronaut which gives you the same productivity boon as Spring, since the notion and code written against it are very similar, which of course make the transition a straightforward endeavor by providing the same dependency injection, inversion of control (IOC), and Aspect-Oriented Programming (AOP) but with massive memory and startup time savings at the same time. The underlying technology was already there, on Android. The pointers of course is on the Ahead Of Time Compilation, a technique which determines what the application is going to need at compile time in order to avoid reflection completely thus keeping memory requirements down and performance up by doing more things at compile time than at runtime. Now it was time for others to pick the concept up and adapt it to the requirement of the Cloud, which is exactly what Micronaut did. Micronaut is a generic application framework, so it can be used to build not just microservice applications but also other kinds like event-driven ones, that generates additional code at compile-time to implement things like dependency injection and avoiding runtime proxies. The trade-off is slower build time vs. faster runtime. But if one of the aims is to foster a faster runtime, why not go for AOT and GraalVM? From GraalVM Under The Covers: GraalVM allows Ahead-Of-Time (AOT) compilation of applications to native images: Usage of native images can have significant performance Such executables do not express with peak performance, but a fast startup and low runtime overhead could make a significant difference in a contemporary cloud or serverless production environment. We already seen this in practice in "Compile Spring Applications To Native Images With Spring Native". Spring Native lets you compile Spring applications to native images using the GraalVM native-image compiler: What's the advantage in that? Instant startup, instant peak performance, and reduced memory consumption, since the native Spring applications are deployed as a standalone executable, well docker image, without including a JVM installation. What's the disadvantage? It's that the GraalVM build process, which tries to make the most optimal image possible, throws a lot of stuff out. This could be dependencies, resources or parts of your code. The problem with that is mainly slower build time vs. faster runtime. As it currently stands, to build an native executable with GraalVM needs a lot of time, which in fact also cancels the aspect of ??seeking build speed, plus it misses out on optimizations that can be achieved by observing common requirements that are shared amongst the bytecode offloaded to the cloud. Cloud Native Compiler is part of Azul Platform Prime Stream Builds, Azul's commercial subscription service, but free for evaluation and development. Stream builds are released monthly and contain all new features, bug fixes, and performance enhancements that are ready for production. Updates are not provided on Stream Builds, only on Stable Builds. So internal Azul fixes and, more importantly, OpenJDK CPU and PSU fixes, only make their way into Stream Builds in the monthly release after they were introduced in Stable Builds. Azul has very recently updated the new evaluation terms for Azul Platform Prime, and as things currently stand: Stream Builds are free for evaluation, and development but you need a license for use in a production environment. More InformationAzul Platform Prime Stream Builds Azul Platform Prime Stream Builds Are Now Free for Evaluation and Development Related ArticlesShareJIT: JIT Code Cache Sharing across Processes and Its Practical Implementation Micronaut 3.2 Released for More Performant Microservices Quarkus 2.7.1 Released - Why Quarkus?

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Monday, 14 March 2022 ) |