| Apple Revamps Siri, Unveils HomePod and Opens Up Home Kit To Developers |

| Written by Lucy Black | |||

| Wednesday, 14 June 2017 | |||

|

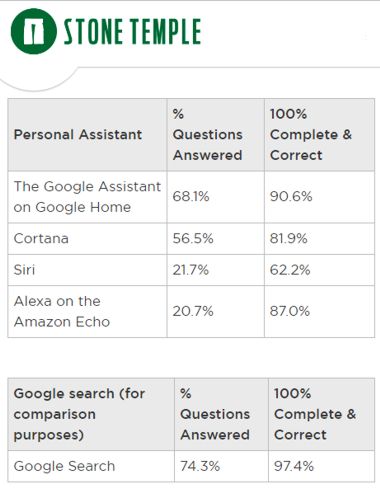

At last week's WWDC, Apple's annual Developer Conference Apple made a number of announcements with regard to Siri, both in the areas of AI/Machine Learning and IoT. Siri, Apple's virtual assistant, may have been the first of its genre, but has been overshadowed by Microsoft's Cortana, Amazon's Alexa and the Google Assistant on Google Home so the news from WWDC represents catch up exercises to try to stay abreast of the competition. In terms of numbers Siri still seems to be a leader - it is used by more than 375 million devices each month. It's available in more languages (21) and more countries (36) than any other assistant. In terms of its ability to do it's main job, which is to assist people by answering questions it performs very poorly in comparison the Google Assistant and Cortana. Research by a digital marketing firm StoneTemple, which tested by asking the same 5,000 questions of Google Search, gave these results:

Most of the enhancements to Siri announced at WWDC won't improve its ability to tackle general questions, but address other issues. Siri's upcoming updated interface will feature a new more expressive voice in both male and female tones and a new visual interface. Siri will also be able to give multiple results when you ask a question - something that does address one of the problems of giving a 100% complete answer. Surprisingly in HomePod, Apple's major new product which is thew counterpart of Google Home or Amazon Echo, while Siri is present its question-answering ability takes a back seat. Instead HomePod is intended as a: incredibly deep and intuitive music ecosystem that lives everywhere you do. Apple is putting a lot of emphasis on the sound quality of the HomePod speaker and encourages users to uses multiple units, suggesting you put two in the same room where they will automatically detect and balance each other for more lifelike sound.

HomePod is primarily designed to integrate with the Apple Music subscription service and Siri's chief role is that of: musicologist who helps you discover every song you’d ever want to hear. HomePod includes a machine-learning powered recommender and so if you say "Hey Siri, I like this song" you can expect to hear similar tracks and over time HomeHub will learn your preferences. Siri will also be able to answer questions such as "Hey Siri, who's the drummer in this song" about the music it plays. In addition Siri can respond to general questions, provide a news briefing or a weather forecast via the HomePod and, in conjunction with HomeKit-enabled devices. can turn lights and other devices on and off. HomePod is scheduled to go on sale in December in the US, at a cost of $349; the UK; and Australia but there is no mention, so far, of a HomePod SDK. The HomeKit SDK is however being opened up to hobbyists as well as having an overhaul.

Apple announced at WWDC that it will let any registered developer build HomeKit devices, but unless these devices are licensed and authenticated, they can’t be sold. This will let developers use all sorts of hardware to test out HomeKit devices, tinkering for either personal or business reasons. To lower the barrier for device makers, they no longer need to include a dedicated authentication chip for their smart home device to work with HomeKit. Instead, companies will be able to authenticate their devices with Apple wholly through software. New categories of supported devices products in HomeKit are sprinklers and faucets. Other updates to the SDK include the ability to track specific people; temperature triggers for events like switching on heating or air conditioning and an enhancement to the sunrise and sunset triggers; so that they can now be offset by some amount of time, allowing users to turn on the light before it gets dark. In this video of a WWDC session Matt Lucas and Praveen Chegondi of Apple's HomeKit Engineering discuss how to use these new features:

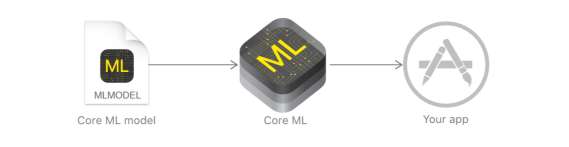

As we reported last week, Apple has made an ARKit available. This requires iOS11 which is now in beta and will let developers integrate iOS device camera and motion features to produce augmented reality experiences in apps or games. With iOS 11, Siri is getting a translation feature that will handle English to Chinese, French, German, Italian, and Spanish, with more language combinations coming soon. Siri will also use "on-device learning" to be more predicative about what you want next, based on your existing use of the digital assistant. Both of these take advantage of machine learning. CoreML, a machine learning framework to be used by developers across Apple products including Siri, was also announced at WWDC. It seems to be along the lines of Microsoft's Cognitive Toolkit (formerly Project Oxford), although currently a lot more limited.

More InformationRelated ArticlesApple Updates - What Developers Need to Know Amazon Echo Show - Voice Done Right Amazon Opens Up Alexa To Developers and Third Parties

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Wednesday, 14 June 2017 ) |

To use CoreML you need to download the latest beta of Xcode 9, which includes iOS 11 SDK. So far it has just two machine learning model types for vision and natural language processing.

To use CoreML you need to download the latest beta of Xcode 9, which includes iOS 11 SDK. So far it has just two machine learning model types for vision and natural language processing.