| Kafka 2 Adds Support For ACLs |

| Written by Kay Ewbank | |||

| Tuesday, 07 August 2018 | |||

|

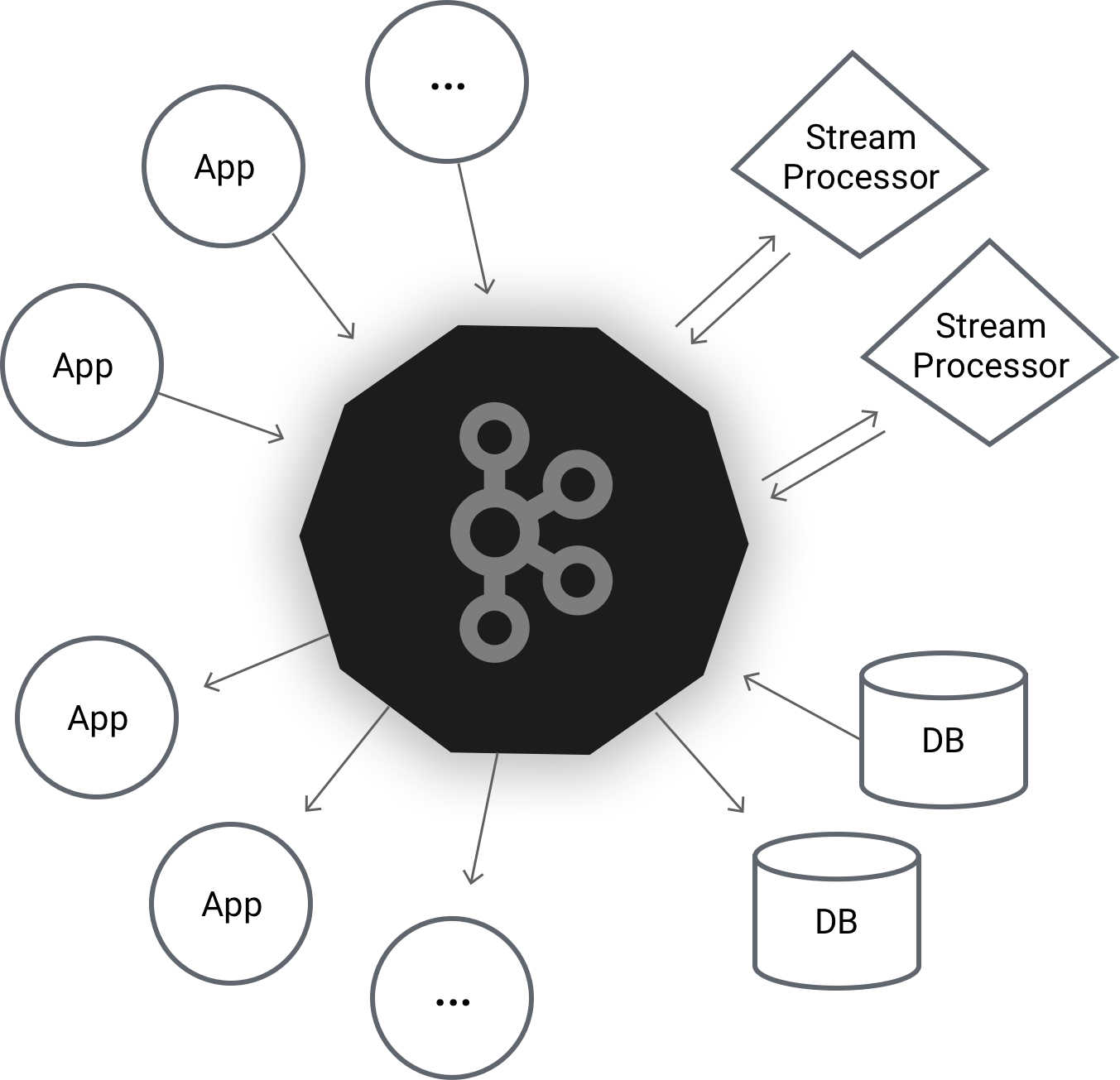

There's a new version of Apache Kafka with new features to improve authentication, security, and replication. Apache Kafka is a distributed streaming platform that can be used for building real-time streaming data pipelines between systems or applications.

It was originally developed at LinkedIn, from where it was taken on as an Apache project. It is a fast, scalable, durable, and fault-tolerant publish-subscribe messaging system that can be used in place of traditional message brokers. The first improvement is support for prefixed ACLs. This is designed to simplify access control management in large secure deployments. The new feature means that you can set up a single rule to grant bulk access to topics, consumer groups or transactional ids with a prefix. Access control for topic creation has also been improved so access can be granted to create specific topics or topics with a prefix. A new framework has been added to make it possible to authenticate to Kafka brokers using OAuth2 bearer tokens. The authentication can be customized using callbacks for token retrieval and validation. Security has been strengthened in the new release, partially thanks to default enabled host name verification for SSL connections so that they are not susceptible to man-in-the-middle attacks.

You can also now dynamically update SSL truststores without needing to restart the broker, and you can also configure security for broker listeners in ZooKeeper before starting brokers. This includes SSL keystore and truststore passwords and JAAS configuration for SASL. This means that you can now store sensitive password configs in encrypted form in ZooKeeper rather than in cleartext in the broker properties file. Replication is another area to have been addressed, with improvements to the replication protocol to avoid log divergence between the leader and its follower during fast leader failover. The developers have also improved the resilience of brokers by reducing the memory footprint of message down-conversions. This has been achieved by using message chunking, meaning that both the memory usage and memory reference time have been reduced to avoid OutOfMemory errors in brokers. One feature that will make it easier to handle working with quotas is the fact that Kafka clients are now notified of throttling before it is applied when quotas are enabled. Until now, there was no notification, meaning it was difficult for clients to distinguish between network errors and large throttle times when quotas were exceeded. The developers have also added a configuration option for the Kafka consumer to avoid indefinite blocking in the consumer. Kafka Connect, the framework for connecting Kafka with external systems, is another area to have received attention. You can now control how errors in connectors, transformations and converters are handled by enabling automatic retries and controlling the number of errors that are tolerated before the connector is stopped. More contextual information can be included in the logs to help diagnose problems and problematic messages consumed by sink connectors can be sent to a dead letter queue rather than forcing the connector to stop.

More InformationRelated ArticlesKafka Graphs Framework Extends Kafka Streams Apache Kafka Adds New Streams API

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Tuesday, 07 August 2018 ) |