| Spark Gets NLP Library |

| Written by Kay Ewbank | |||

| Friday, 27 October 2017 | |||

|

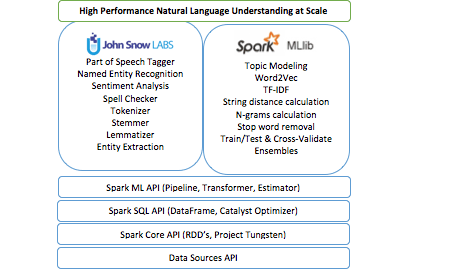

There's a new Natural Language Processing library for Apache Spark, that extends the Machine Learning logic of Spark to provide NLP annotations for machine learning pipelines that scale in a distributed environment. Apache Spark is a general-purpose cluster computing framework, with native support for distributed SQL, streaming, graph processing, and machine learning. Spark's logic consists of two main components: Estimators and Transformers. These are combined using Pipelines to put together multiple estimators and transformers within a single workflow, allowing multiple chained transformations along a Machine Learning task.

The John Snow Labs NLP Library comes from a big data analytics firm well known in health care organisation. Open sourced under the Apache 2.0 license it is written in Scala with no dependencies on other NLP or ML libraries. The framework makes use of the concepts of annotators, and comes out of the box with:

Because the library is tightly integrated with Spark ML, you can also perform tasks including word embeddings, topic modeling, stop word removal, and a variety of feature engineering functions including tf-idf, n-grams, and similarity metrics. These aspects come courtesy of Spark's own ML. The library relies on the use of Annotaters, and these come in two forms: Annotator Approaches are used to represent a Spark ML Estimator. These need a training stage in order to work. You use a function called fit(data) which trains a model based on some data. The Annotator Approach is used to produce the second type of annotator which is an annotator model or transformer. An Annotator Model is a Spark model or transformer. This means it, has a transform(data) function which takes a dataset, and adds to it a column with the result of the annotation. All transformers are additive, meaning they append to current data, never replace or delete previous information. Both forms of annotators can be included in a Pipeline and will automatically go through all stages in the provided order and transform the data accordingly. A Pipeline is turned into a PipelineModel after the fit() stage. The John Snow NLP Library is available on Github.

More InformationRelated ArticlesAzure Machine Learning Enhancements Azure Machine Learning Service Goes Live Machine Learning Goes Azure - Azure ML Announced Apache Spark With Structured Streaming Spark BI Gets Fine Grain Security Apache Spark Technical Preview

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Wednesday, 19 September 2018 ) |