| Kinect SDK 1.5 - Now With Face Tracking |

| Written by Harry Fairhead | |||

| Tuesday, 22 May 2012 | |||

|

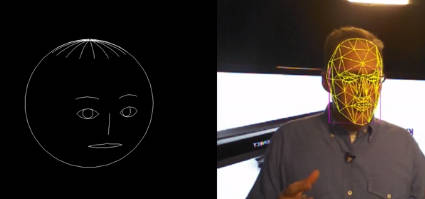

The new Kinect 1.5 SDK adds a lot of new features to the existing Kinect for Windows without any need for changes to the hardware. It now comes with face tracking, seated skeleton tracking and a lot of new developer tools. There are so many new features in the 1.5 SDK that it is difficult to know what is the most important but the face tracking SDK must come high on anyone's list. This makes use of the depth map to create a 3D wire frame model of the face. From this, facial features can be extracted - eye position, mouth position and so on. This isn't built into the Kinect like the skeletal tracking, but is an application that uses the data streams.

Notice that this isn't face recognition, as some sources are reporting. The sorts of thing that this can be used for is in animating an avatar or robot face. It could also be extended to recognize expressions, and even identity, but this would need some additional work. Seated tracking allows you to get a skeleton for a seated user. It tracks in near mode and provides data on 10 joints so the idea is that this can be used for close arm and hand detection which is ideal for gesture control. General skeleton tracking now provides joint orientation as well as position and it too works in near mode. This makes it much easier to map an avatar or robot to your current position. Putting together the face tracking SDK with the new joint orientation means that you could now use the Kinect to drive an Avatar or telepresence device much more accurately than before. Not only could you ensure that the body followed the posture and movements of the subject but the face could be made expressive. If you have worked with the Kinect you will know that testing is difficult because you have to stand or get some one else to stand in front of the Kinect to get some test data. Now you can record some test data and play it back using Kinect Studio. The ability to replay the same data should make it possible to fine tune an application and to find out how what went wrong. As well as new features, various things have been speeded up, making it possible to spend more time processing the data. The depth and video streams are also kept in sync and the video stream's quality has been improved. Although speech recognition isn't the most exciting part of the Kinect it is still the feature that might lead to real breakthroughs - and now in a range of different languages including Spanish, French, Japanese and more. Most of the new features have been introduced without breaking the version 1 API - which wasn't the case with moving from the beta to the first release. The number of demonstration programs, complete with source code, has also increased and this should make it easier to build applications that make use of these basic tasks - such as background removal and avatar tracking for example. Still missing from the samples is any sign of Kinect Fusion, the advanced real time 3D modeling system that allows you to "paint" a 3D model by moving the Kinect around. If you want something like this you still need to move over to the open source OpenCV or similar. You can see a demo of many of the new features in the following video:

Given the extended range of demo programs you can almost have fun with the Windows Kinect without resorting to programming and, by taking large chunks of "boilerplate" code from the examples, you can start to try things out more quickly - but the truly innovative applications are still going to need not only understanding of the SDK, but of 3D graphics, AI and more.

More InformationRelated ArticlesKinect 3D Full Body "Hologram" The Perfect Fit - thanks to Kinect-technology Shake n Sense Makes Kinects Work Together!

To be informed about new articles on I Programmer, subscribe to the RSS feed, follow us on Google+, Twitter, Linkedin or Facebook, install the I Programmer Toolbar or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Tuesday, 22 May 2012 ) |