| Turn Any Webcam Into A Microsoft Kinect |

| Written by Harry Fairhead |

| Saturday, 03 February 2018 |

|

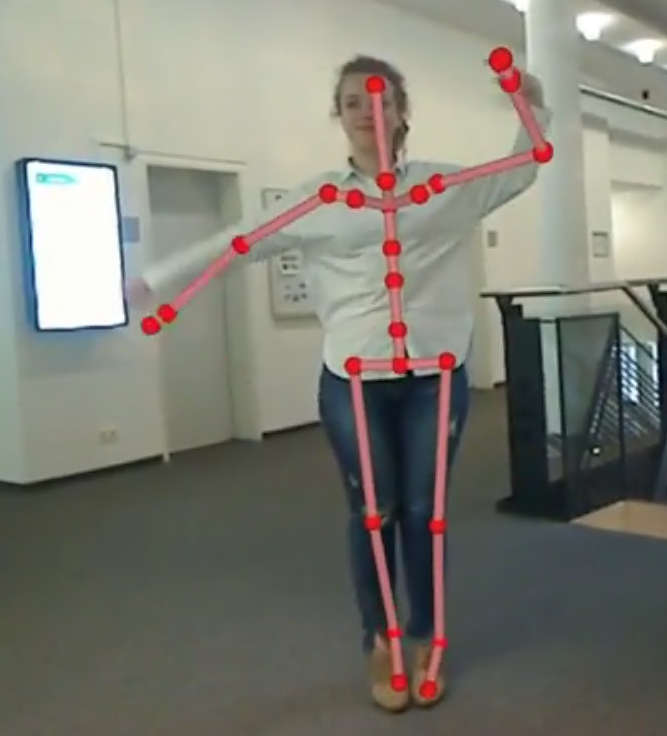

For some jobs you might not even need a depth camera like the Kinect. Take a webcam and Tensorflow and you can use AI to create skeleton tracking - meet VNect. The loss of the Microsoft Kinect is a blow to anyone wanting to experiment with 3D vision, but AI is moving on very fast. There are a number of body pose tracking solutions that just use video input, but it is clearly now easy enough to create your own using a port of a recently announced neural network - VNect - to Tensorflow. When you consider the amount of work that the original Kinect skeletonization algorithm took to create this is a remarkable sign of the times. The original Kinect algorithm wasn't based on a neural network but another machine learning technique called a forest of trees - basically multiple decision trees. Of course, the orignal algorithm also worked with much cleaner data in the form of the RGB plus depth signal from the camera. It seems that a neural network can do the job much better and only using RGB. VNect was reported at last year's (2017) SIGRAPH, and apologies for missing it, by a team from the Max Planck Institute, Germany; Saarland University, Germany and Universidad Rey Juan Carlos, Spain. You can see this system in use and find out about its design in the following video:

The team has recently improved VNect and produces some additional realtime video:

The neural network model complete with weights are available for download and building into your own body tracking system. If you don't want to tackle a reimplementation of the network then you could make use of Tim Ho's unofficial port to TensorFlow, which is available on GitHub. This makes it possible to more or less turn any low cost webcam into the equivalent of the Kinect in skeleton tracking mode. And to prove it is possible two programmers Or Fleisher and Dror Ayalon have put the VNect together with Unity to have some fun:

If you want a complete solution in C++, then take a look at CMU's Openpose. This is a realtime, multi-person, system that detects body, hand and facial keypoints. It doesn't do quite the same job as the neural network solution in that it only gives x,y co-ordinates of the keypoints in the image and not x,y,z co-ordinates in real space. Both are useful alternatives, but depth cameras provide full x,y,z co-ordinates for every point in the scene and sometimes this is what you want and nothing else will do.

More InformationVNect: Real-time 3D Human Pose Estimation with a Single RGB Camera Dushyant Mehta, Srinath Sridhar, Oleksandr Sotnychenko, Helge Rhodin, Mohammad Shafiei, Hans-Peter Seidel, Weipeng Xu, Dan Casas and Christian Theobalt Related ArticlesIntel Ships New RealSense Cameras Is This The Kinect Replacement We Have Been Looking For? Microsoft Kills The Kinect - Another Nail Practical Windows Kinect in C#

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

| Last Updated ( Saturday, 03 February 2018 ) |