| Plenoptic Sensors For Computational Photography |

| Written by David Conrad | |||

| Saturday, 29 December 2012 | |||

|

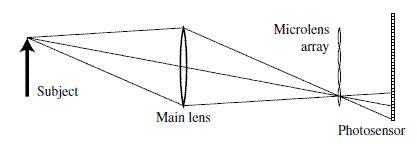

Light field photography based on the use of a plenoptic lens was brought to public attention by Lytro with the first camera that allowed users to focus after they took the shot. The big problem is that Lytro has been slow to improve and slow to capitalized on the fact that light field photography is essentially a matter of software. All this might be about to change. Light field photography is a sort of digital approach to holography. It aims to capture the complete configuration of the light in a scene so that any part can be reconstructed. The practical form of light field photography that is actually used is based on forming lots of images, each from a slightly different view point, using a microlens array behind the main lens. If you just look at the recorded image then all you see are lots of small versions of the total image. The trick is that you can use some complicated maths, Fourier transforms and convolutions to be precise to use the information in the small images to create a single big image and depending on exactly how you do this you can change the focal plane. What this means it that you can not only focus after the photo has been taken, you can even change the focus interactively.

Of course all of this requires a lot of processing power and light field photography is really only possible because we have so much processing power in the form of a GPU. There are probably lots of other tricks that can be performed with the raw data in a light field photo but so far none of these have proved particularly impressive. Lytro for example still haven't made available an API or made any attempt to encourage the wider programming community to join in and be inventive. As a result the whole business of using a photo taken with Lytro isn't quite as much fun as it might be - it still uses a Flash extension for example.

Clearly what is needed is something to make the whole situation much more open. The good news is that several companies but notably Toshiba are working on integrated planoptic sensors. According to The Asahi Shimbum: "The cube-shaped module is about 1 centimeter per side and contains a dense array of 500,000 lenses, each 0.03 millimeter in diameter, in front of an image sensor measuring 5 mm by 7 mm. "

Takashi Kamiguri

The sensor is clearly small enough to be used in mobile devices and the suggestion is that it is also capable of shooting video - refocusable video sounds fun but consider the problems of doing the computation and providing the user interface. It might also be possible to create 3D images using it but so far the details are vague. Toshiba claims to have production versions by the end of fiscal 2013 and it is looking for phone and tablet manufacturers to make use of it. This is a step in the right direction for medium quality computational photography. What creative code can you come up with that pushes the sensor into new applications? To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Sunday, 30 December 2012 ) |