| Guetzli Makes JPEGs Smaller |

| Written by David Conrad | |||

| Monday, 20 March 2017 | |||

|

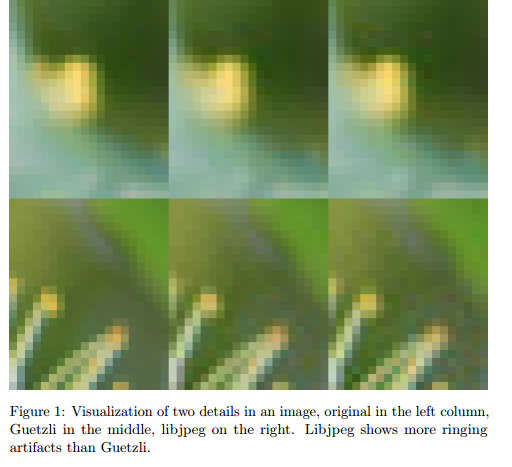

There is a lot of flexibility in how you can configure a JPEG file to best represent an image. Now Google's Guetzli can find optimum settings and so produce files that are up to 45% than other encoders working at the same perceptual quality. It doesn't take long to find examples of huge jpeg files that really don't add to the quality in a typical web page. You can even find big images reduced to a tiny size on the page but left at 95% JPEG compression. Just making it easier, or perhaps obligatory to size and compress JPEG images to more reasonable levels, would do a lot to speed up some parts of the web. What the Google team has done is rather more drastic than just tweaking the compression setting in GIMP or PhotoShop. The first problem that they had to solve was trying to gauge the quality of the image. To do this they invented Butteraugli, a model of human vision. "Butteraugli is a project that estimates the psychovisual similarity of two images. It gives a score for the images that is reliable in the domain of barely noticeable differences. Butteraugli not only gives a scalar score, but also computes a spatial map of the level of differences. One of the main motivations for this project is the statistical differences in location and density of different color receptors, particularly the low density of blue cones in the fovea. Another motivation comes from more accurate modeling of ganglion cells, particularly the frequency space inhibition." Once they had a method of judging the quality of an image the next step was to implement an optimization procedure that adjusted the JPEG compression parameters to produce the smallest file with the given quality. The program to do the job is called Guetzli [guɛtsli] — cookie in Swiss German. The two adjustments to the compression that can be made are a global setting of the quantization tables and a local optimization of replacing some of the frequency value by zero. The first optimization corresponds to the usual quality adjustment that you find on any encoder. Setting some of the frequencies to zero allows run length encoding to reduce the size of the file at the cost of lost detail in the area of the image that the block encodes. The optimization proceeds by modifying the global quality and then optimizing the coefficients.

The resulting images are 29-45% smaller compared to other encoders at the same quality. The big problem is that the optimization needs a lot of memory and is very slow. It can only be used to compress static content. However. once you have created an optimized JPEG image it can be decoded by any standard JPEG decoder and no changes are needed to the standard. Both projects are open source and available on GitHub. More InformationGuetzli: Perceptually Guided JPEG Encoder Users prefer Guetzli JPEG over same-sized libjpeg https://github.com/google/butteraugli. https://github.com/google/guetzli. Related ArticlesGoogle Zopfli - Nice Compression Shame About The Speed Mozilla Wants To Take A Byte Out Of JPEGs Google Zopfli - Nice Compression Shame About The Speed Data compression the dictionary way Network Coding Speeds Up Wireless by 1000%

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Monday, 20 March 2017 ) |