| A Faster Fourier Transform |

| Written by Mike James | |||

| Saturday, 21 January 2012 | |||

|

A research group at MIT has come up with an improved algorithm that could make it possible to do more with audio and image data with less powerful hardware. The Fourier transform is fundamental to signal processing algorithms and it practical terms it is at the heart of image, sound and video compression and most filtering and reconstruction methods. Put simply it is important. However, there was a day when computing the Fourier transform was a time consuming problem - so much so that the the naive algorithm made it next to impossible to use the technique on real data.

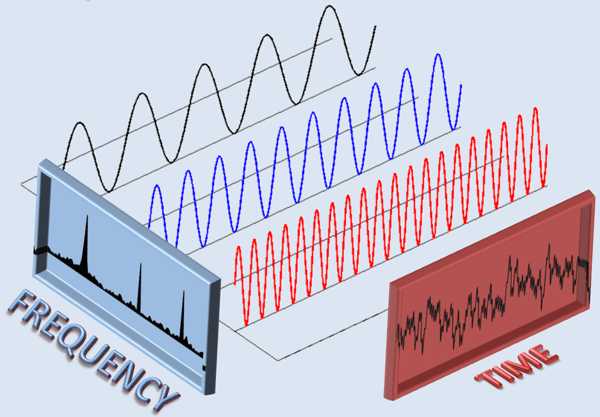

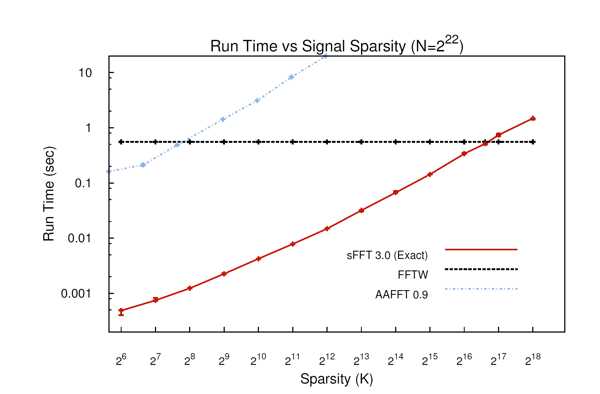

In the digital domain, a Fourier transform is a matrix multiplication, a rotation in fact, that takes you from the space domain to the frequency domain. That is, you give it the amplitude of a single at various time points and it returns a vector of frequency amplitudes and phase at various frequency points. As this is a matrix multiplication, the direct algorithm take n2 operations for an input vector of size n, which means you can't work with large data sets. The invention of the Fast Fourier Transform by J.W. Cooley and John Tukey in 1965, simply known as the Cooly-Tukey algorithm at the time, was a huge breakthrough. The crazy thing was that it had been invented before, arguably in 1805 by Gauss, but it had been ignored! The impact of the FFT was huge. It opened up all sorts of possibilities in signal processing, image processing and AI. It has been called the most important numerical algorithm, and this is not an exaggeration. The basic idea of the FFT is to simply use the divide and conquer strategy. If the size of the data vector n is factorable, n=n1n2 say, then you can compute a Fourier transform on n1 elements and then on n2 elements and put the result together to give you the full result. If n is highly factorable, you can repeat the division down to just sets of two data points. This is the FFT and you can show that it takes time proportional to nlogn, which is a big improvement over n2 for large n. You can also construct similar algorithms even if n isn't highly composite and FFT algorithms exist even if n is prime. Until recently the FFT was the best you could do in general, but it still isn't known if it is an optimal algorithm. It is obvious that a theoretical lower bound for complexity is n as you have to compute n Fourier coefficients. However, if the frequency spectrum if sparse, i.e. there are only k non-zero frequencies, then you could hope for an algorithm that has complexity proportional to k. Now a research group at MIT has demonstrated an algorithm that takes klogn time. The algorithm takes a data vector with n elements and returns the k largest Fourier components - which is exactly what you want in the case of most compression and approximate signal manipulation algorithms. If you are hoping for an elegant algorithm that exploits the symmetry of the situation, using a modified butterfly say, this is not the case. The ideas and implementation is based on using filters to divide the bandwidth up into bins so that each bin contains at most one of the significant frequencies. The filters (Gaussian and Dolph-Chebyshev) are constructed in a clever way so that they overlap in just the right way to make sure that you don't miss a significant component. After this, a divisive search is used to isolate the regions that contain the significant Fourier coefficients. The resulting transform is only an approximation to the true transform, but the accuracy can be quantified. Many signals are sparse, and if they aren't the compression algorithms that manipulate them assume that they are. In other words, a k-sparse approximate algorithm is good enough for many practical applications. What this means is that, using this sort of approach. you could implement faster MP3 or MP4 style compression or use a much less powerful processor. The important point about this new algorithm is that, even if k approaches n, the algorithm can be shown to be about as good as a conventional FFT.

In this graph FFTW is a conventional FFT, SFFT 3.0 is the new algorithm and AAFFT is the previous fastest sparse algorithm. So this is a breakthrough. As a result of its invention, this algorithm will make it possible to process sound, image and other data faster than ever before. What more can you ask - but it would be nice if it was algorithmically as beautiful as the original FFT. More Infomation:The previously fastest algorithm is a good way to understand the ideas behind the latest method: Simple and Practical Algorithm for Sparse Fourier Transform The algorithm described here is the subject of the paper: sFFT: Sparse Fast Fourier Transform Group Page Related articles:

Comments

or email your comment to: comments@i-programmer.info

To be informed about new articles on I Programmer, subscribe to the RSS feed, follow us on Google+, Twitter, Linkedin or Facebook or sign up for our weekly newsletter.

|

|||

| Last Updated ( Saturday, 21 January 2012 ) |