| Drones Learn To Do Acrobatics |

| Written by Harry Fairhead | |||

| Sunday, 28 June 2020 | |||

|

While we are well passed "peak drone" as far as news goes, there is still the occasional new development that can make you sit up and take notice. Drones that do acrobatics - nothing new - but drones that learn to do acrobatics is another matter. This research is interesting on a number of levels. The first is the sheer fun and excitement in watching drones do acrobatics - who knew it was even possible to do a loop? At another level the way that the drones learned this skill is also important. The controller had input from a camera and an accelerometer and was trained by being shown demonstrations provided by an expert. The expert had access to more data that than the system being trained, including a full ground-truth state of the drone. This made it possible for the expert to be an optimal controller. The system, however, hasn't got access to this privileged information and had to learn to achieve the same results just based on sensory data. The neural network attempted to minimize the difference between the expert's flight and its own. A set of physically realizable ideal flight paths were worked out and the expert attempted to fly them as accurately as possible. The system then attempted to learn from the expert in a simulation.

The learned policy connecting input and outputs was then transfered to a real model and the results can be seen in the video: The conclusion is: "Our methodology has several favorable properties: it does not require a human expert to provide demonstrations, it cannot harm the physical system during training, and it can be used to learn maneuvers that are challenging even for the best human pilots. Our approach enables a physical quadrotor to fly maneuvers such as the Power Loop, the Barrel Roll, and the Matty Flip, during which it incurs accelerations of up to 3g." and "We have shown that designing appropriate abstractions of the input facilitates direct transfer of the policies from simulation to physical reality. The presented methodology is not limited to autonomous flight and can enable progress in other areas of robotics." This looks like a faster and easier way to train any robot system to do what you want.

More InformationE. Kaufmann, A. Loquercio, R. Ranftl, M. Müller, V. Koltun, D. Scaramuzza Robotics and Perception Group, University of Zurich and ETH Zurich. Intelligent Systems Lab Related ArticlesToo Good To Miss: Drones Display Better Than Fireworks! Flying Ball Drone Wins $1 Million Prize Not A Drone Army But a Drone Orchestra Nano Quadcopter - Your Personal Flying Robot Flying Neural Net Avoids Obstacles You're Never Alone With A Joggobot Quadrotor To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Sunday, 28 June 2020 ) |

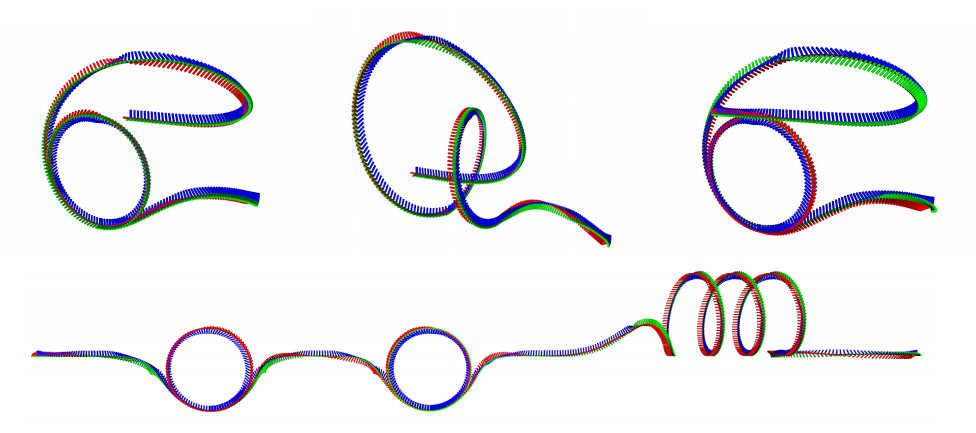

Reference trajectories for acrobatic maneuvers

Reference trajectories for acrobatic maneuvers