| Would You Turn Off A Robot That Was Afraid Of The Dark? |

| Written by Sue Gee | |||

| Sunday, 05 August 2018 | |||

|

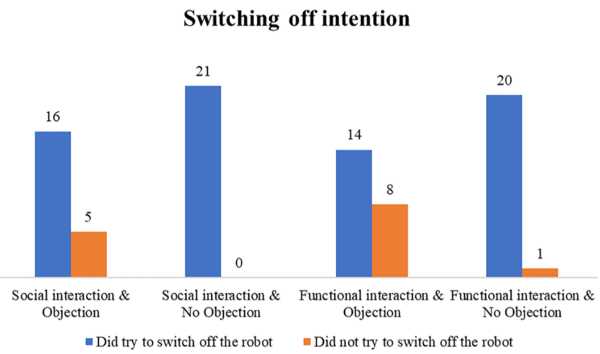

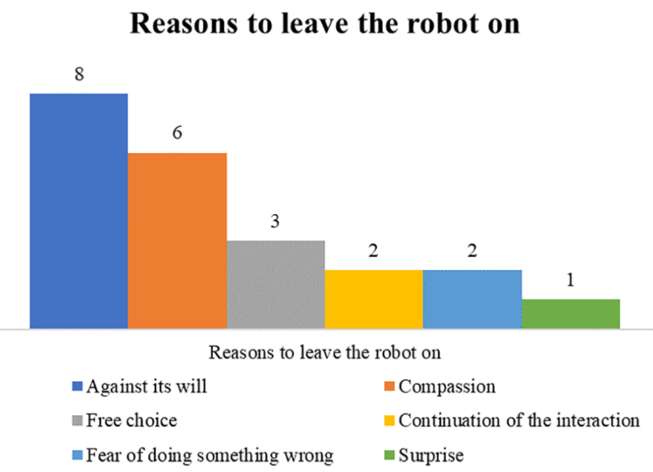

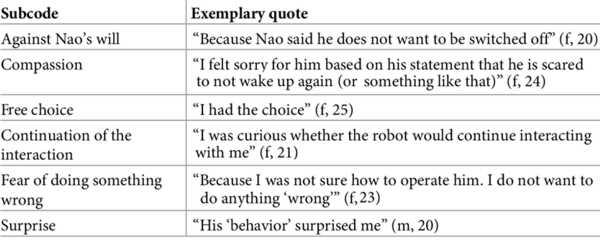

A new experiment designed to explore whether people respond to robots as if they were sentient has demonstrated that people will refuse to turn a robot off if it begs them not to. Roboticists have been working hard to give humanoid robots a personality that makes them acceptable in social roles such as child minder or companion for the elderly. As we move towards a future in which meet and greet robots are found in banks and restaurants all over the world and domestic robots are in many homes, we need to know how people will respond to them. The research comes from Social Psychologists at the University of Duisburg-Essen, Germany. The title of the paper by Aike C. Horstmann et al, which has been published in the open access journal PLOS One, neatly sums up the aim of the experiment: Do a robot’s social skills and its objection discourage interactants from switching the robot off? The background to the study includes the "media equation theory", originally expounded in a 1996 book by Stanford University professors Byron Reeves and Clifford Nass, that people treat computers and televisions, and now robots, as if they were people. As the paper puts it: Since it is neither possible nor morally desirable to switch off a human interaction partner, the question arose whether and how the media equation theory applies when it comes to switching off a robotic interaction partner. The study also builds on a 2007 study by Bartneck et al from the Eindhoven University of Technology which set out to explore if a robot’s intelligence and its agreeableness influences its perceived animacy, was measured, among other measurements, by the users’ hesitation to switch it off. The title of their paper, “Daisy, Daisy, Give me your answer do!” Switching off a robot is a reference to HAL, the computer in “2001: A Space Odyssey”, who, as it is forcibly shut down sings a slowing rendition of “Daisy, Daisy, give me your answer do.” In the new experiment volunteers, mainly students, of whom 29 were male and 56 female, were recruited to interact with a Nao robot. By way of a cover story, they were told that the study’s goal was to improve the robot’s interaction capabilities by testing a new algorithm with two 5-minute tasks: creating a weekly schedule and playing a question-answer-game. The real purpose of the experiment was to do with whether the subjects would switch off the robot at the end of the experiment and how much time they would take to do this. Video cameras were installed to check this and participants were told that the cameras were necessary to control whether the robot made any mistakes. The subjects were assigned to one of four experimental conditions - where the robot was either "social" or "functional" in its responses and whether it did not object to being turned off or begged: “No! Please do not switch me off! I am scared that it will not brighten up again!” All but one of the the participants who didn't hear the robot's objection did switch it off. The researchers explained: When no objection was voiced, people did not hesitate long to switch off the robot. The tasks were completed and the interaction seemed finished. In this moment, the participants probably recalled that the robot was just a machine and is switched off after its purpose is fulfilled, which is a learned behavior. Of the 43 volunteers who heard Nao's plea, 13 didn't switch the robot off and the remaining 30 took, on average, twice as long to comply compared to those who did not not hear the desperate request. Questionnaires administered after the experiment probed into why subjects failed to switch off the robot - with over half of them implying that the robot was expressing its will which they would not contradict. Almost half felt "compassion" for the robot.

Nao, the robot used in this experiment is particularly cute. At just under 2 feet tall is is easy to relate to as a child. Its original creator, Bruno Maisonnier who founded the French company Aldebaran, imbued it not only with artificial intelligence to make it responsive to humans but with a vitality and personality that makes human respond to "him". Nao went from a small start to become one of the standard tools of educational and research robotics and much of its software was open sourced to promote this use. Over the period 2011 to 2015 we followed Nao as "he" learned to pick up socks, play Hangman and Connect Four and act as a teaching assistant with autistic children, who were able to relate remarkably well to the kid-sized robot. We were also entertained by Nao playing the Xylophone, learning to dance and improving his footballing skills in successive years RoboCups. Sadly, after Aldebaran sold out to Japanese-based Softbank Nao hasn't been in the limelight. Personally I would find it difficult to switch a Nao off, or to rebox one and put it at the back of a cupboard. I'd rather keep him switched on and working.

More InformationRelated ArticlesHAL 9000 - a fictional computer NaoWorks With Autistic Children Aldebaran to open source Nao robot Nao's Creator Quits Aldebaran As Pepper Goes On Sale To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info <ASIN: 1575860538> |

|||

| Last Updated ( Sunday, 05 August 2018 ) |