| Data Preparer Optimises Data Cleaning |

| Written by Kay Ewbank | |||

| Thursday, 27 February 2020 | |||

|

A "hands-off data wrangling" tool has been launched by The Data Value Factory, a spin-out company from the University of Manchester. The developers say The Data Preparer system is designed to minimise the time spent to prepare data for analysis. The Data Preparer system achieves the improvements in time spent to prepare data for analysis by changing the way the data prep is carried out. Users describe what they need and the system will use all available evidence to clean and integrate their data. The results can then be refined by providing feedback and reworking the list of priorities.

The Data Value Factory’s co-founder Prof. Norman Paton said:

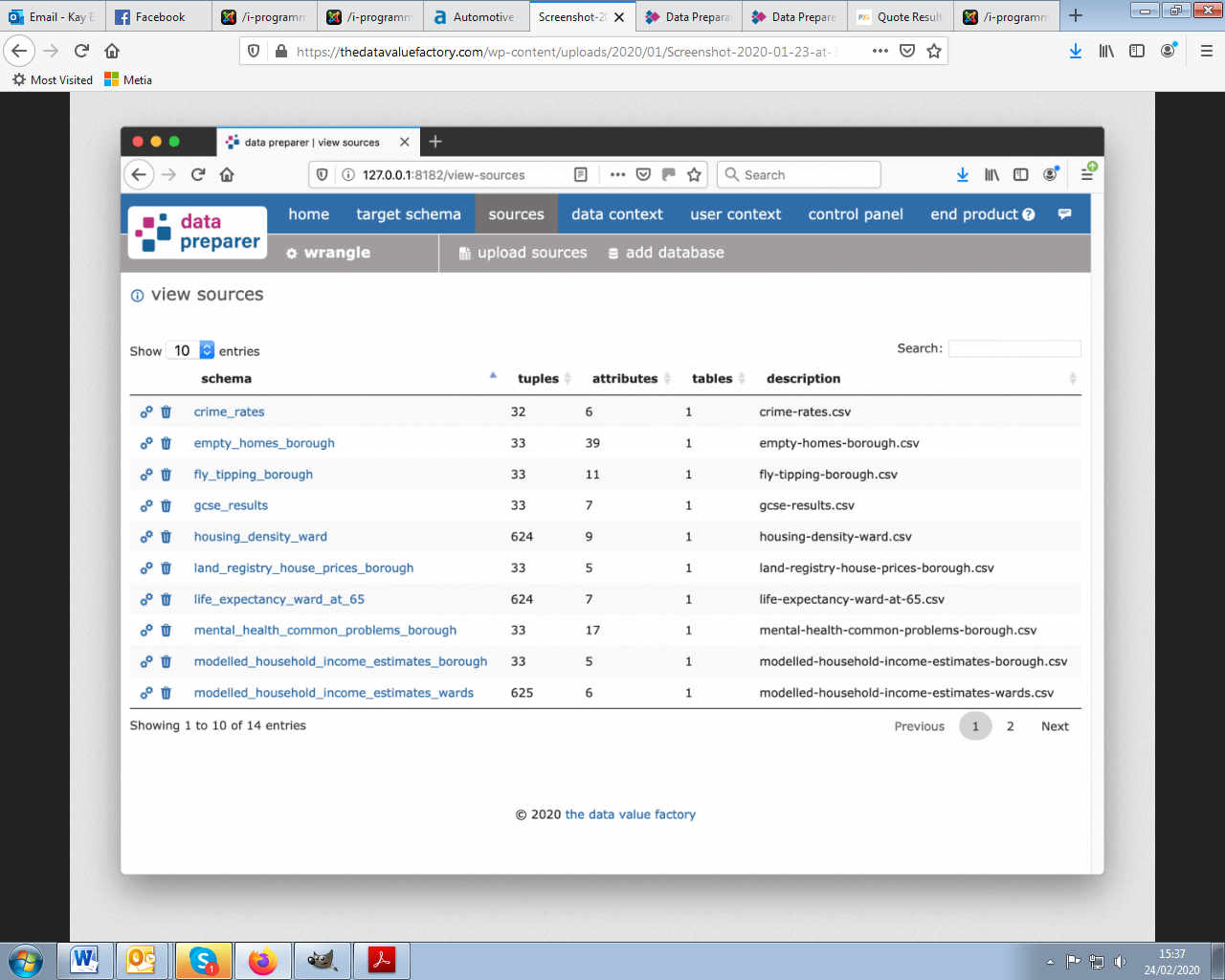

To use the tool, users provide any number of data sources, a target structure, quality priorities, and optionally example data. The target structure and quality priorities make users’ requirements explicit. The example data provides evidence that is used by Data Preparer to clean and integrate the data. The Data Preparer system will then explore how the data sources relate to each other and the target, repair and reformat where necessary, and populate the target from the sources. The user doesn't need to supply data processing pipelines, edit spreadsheets, or write scripts or rules to operate on the data, and data from several sources can be automatically repaired, transformed, and combined. Once the target data is assembled, you can look at the way the data values were arrived at, so it's possible to check the validity.

Existing data preparation solutions involve, without exception, a significant number of fine-grained decisions, typically impeding data preparation at scale. The Manchester-based company expects that in contrast to other data preparation tools where the user needs to make multiple decisions, those using Data Preparer will be able to get better value from their data even where the number of available data sources is prohibitively large for conventional manual data preparation, or where data sources lack a full schema definition, such as data lake sources, or web extraction results.

More InformationRelated ArticlesGitHub For Data Under Development New Database For Data Scientists Iodide - A New Tool For Scientific Communication And Exploration New Public Datasets Added To AWS

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

{laodposition comment} |

|||

| Last Updated ( Thursday, 27 February 2020 ) |