| CORFU - Flash Fault Tolerant Distributed Storage |

| Written by Alex Denham |

| Wednesday, 05 September 2012 |

|

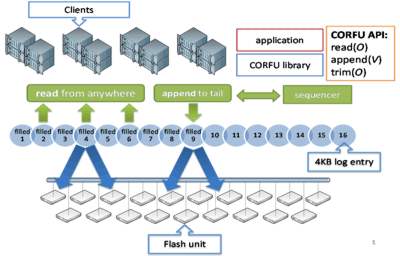

Researchers at Microsoft are working on a NAND flash based data storage system that is both strongly consistent and high performance, and that can be used in designs that are impractical on hard disk or too expensive on RAM. CORFU (Clusters of Raw Flash Units) is a cluster of network-attached flash that appears to the outside as a global shared log. The team working on CORFU has two primary goals for the technology; used as a shared log, the team says the use of flash storage reduces the trade-off between performance and consistency, so that applications such as databases can run at wire speed. When CORFU is used as a distributed SSD, it can eliminate storage servers so reducing the amount of power used and the cost of the overall hardware. Conventional data center storage at the moment is either set up to provide consistency or performance. Systems that provide consistency rely on aggregated storage, which is expensive and doesn’t provide fault tolerance. The less expensive partitioned storage is better for performance but doesn’t provide consistency. By using NAND flash, CORFU can provides strong consistency and high performance, the researchers say. The distributed nature of CORFU has several advantages. Data center designers can distribute the storage throughout the data center, so avoiding bandwidth bottlenecks. The distributed storage also means that hardware failures are less problematic because even if one part of the storage is lost, other parts remain in use. CORFU lets designers create structures that are impractical on hard disk or too expensive on RAM. CORFU makes the storage available as a global shared log, where hundreds of client machines make fast sequential writes to the tail of a single log, and fast contention-free reads from its body. This configuration reduces the impact of hardware failures so provides strong consistency. The uses suggested by the researchers include a consensus engine for consistent replication; a transaction arbitrator for isolation and atomicity; and an execution history for replica creation, consistent snapshots, and geo-distribution. They also suggest it could be used as a very fast primary data store that can be used to make fast appends on underlying media. The research team suggest a configuration (see Figure 1) where application servers access the shared global log using the CORFU library, which has a simple API. Most of the intelligence of CORFU is implemented in the library so that simple NAND flash storage units can be used as the hardware. Each position in the shared log is mapped to a set of flash pages on different flash units. The application servers act as the clients for the system, and the CORFU library maintains local copies of the map at the clients. When a client wants to read from a particular position in the shared log, it uses its local copy of the map to identify a corresponding physical flash page, and reads directly from the flash unit storing that page. To append data, a client first works out the next available position in the shared log, then writes data directly to the set of physical flash pages mapped to that position. Contention with other clients appending data is avoided using a sequencer node. (Click in diagram to enlarge) Figure 1: Clients using the CORFU library to access the global log stored across many flash units

Microsoft researchers are prototyping a network-attached flash unit on an FPGA platform to be used with the CORFU stack. This combination provides the same rate of networked throughput as a XEON-hosted SSD, but with 33 percent lower read latency and an order of magnitude less power. More InformationCORFU: Clusters of Raw Flash Units Related ArticlesMicrosoft's New File System ReFS

Comments

or email your comment to: comments@i-programmer.info

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

|

| Last Updated ( Wednesday, 05 September 2012 ) |