| Can AI Change the Face of Cyber Security? |

| Written by Edward Jones |

| Friday, 12 August 2016 |

|

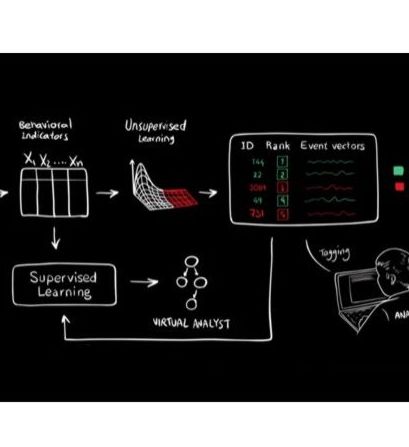

Researchers at CSAIL are working on an artificial intelligence platform that is already capable of detecting 85% of cyber attacks and provides a glimpse of a future where humans may no longer be needed to defend business systems. How much responsibility can be given over to ‘robots’ to do the work of security professionals? Businesses face an ever increasing challenge to protect their assets from cyber criminals. The sophistication and frequency of attacks continue to elevate as these criminals take advantage of rapidly advancing technologies. Even using the latest machine driven security systems, it is becoming increasingly difficult to differentiate between a genuine employee or website visitor and a criminal seeking to breach or bring down their network and systems. Cyber security professionals are facing the prospect that they have reached a glass ceiling in terms of what humans can achieve. Does the future of cyber security defence now depend of robots? In April 2016, the Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT announced an artificial intelligence platform named AI2. Developed in partnership with machine-learning start-up PatternEx, AI2 sparks an interesting debate about the future role of artificial intelligence in protecting an organisation from cyber attacks and the extent to which businesses will trust robots to take care of their security. Currently, AI2 uses unsupervised machine-learning to analyse data and detect suspicious activity. Its findings are then fed to cyber security experts (humans), who confirm whether the suspected activity is an attack or a false positive. The machine then takes this learning and incorporates it into the next set of data, continuously learning and improving. This video describes how the platform works in simplified terms:

As of April 2016, the platform had analysed more than 3.6 billion log lines, identifying 85 percent of attacks. This is three times more effective than existing benchmarks achieved by machine learning platforms. The system is also capable of reducing the number of false positives by a factor of five. As impressive as the achievements AI2 are, it is unlikely cyber security professionals are ready to hand over responsibility of defending their systems just yet. Especially considering the platform still requires human intervention to learn and improve. It is also not clear what action the system takes in the event of an attack, and whether this defence is automated. For now advancements can be found when humans and machines work together. Machines can do the heavy lifting, scanning up to 1 million log lines a day, a task that could take a team of experts months, identifying focus areas for the team to work on. Humans identify false positives and take steps to help machines learn to be more effective. Despite the larger improvements demonstrated by MIT and PatternX, the system still misses 15% of genuine attacks. Humans are still required to plug this gap to keep an organisation safe. Although for now it looks like humans must lead the way with cyber security defence, utilising machine-led security systems to augment our efforts, AI2 is demonstrating that cyber security detection and protection systems are advancing at a rapid rate. The time when all-encompassing artificially intelligent security platforms can be left alone to defend our business appears to be drawing ever closer.

More InformationArtificial Intelligence (AI) capable of detecting 85% of cyber attacks Related ArticlesAchieving Autonomous AI Is Closer Than We Think Mayhem Wins DARPA Cyber Grand Challenge

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook, Google+ or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

| Last Updated ( Monday, 15 August 2016 ) |