| Tricking The Tesla |

| Written by Alex Armstrong | |||

| Sunday, 23 February 2020 | |||

|

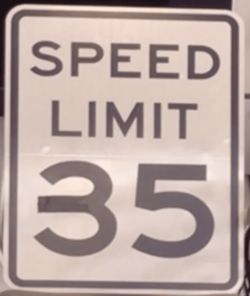

The success of autonomous vehicles relies on them being safe and trustworthy. Security researchers have revealed how easy it is to fool self-driving Teslas by sticking tape on a speed limit sign and projecting a hologram of an Elon Musk lookalike on the roadway. McAfee Advanced Threat Research, a team led by Steve Povolny, has coined the term "model hacking" to described its use of adversarial attacks targeting artificial intelligence models and features. The defacing of a standard 35 mph sign using just a 2-inch strip pf black electrical tape was the culmination of a series of lab tests into how to fool machine learning algorithms into misclassifying traffic signs with the MobileEye camera system and the Tesla Model S as test subjects. As the video shows, with this small alteration, the ADAS (advanced driving assistance system) on the Tesla Model S detected the speed limit sign, interpreted the 35 mph sign as indicating 85 mph and sped away. As we have previously noted regarding adversarial images, small changes that certainly often not even noticed by humans who continue to see what they expect to see are enough to mislead the neral networks used by computer vision systems. So McAfee has identified a situation in which autonomous vehicles is at greater risk. In addition, humans who were trying to decide whether it was a 35 or an 85 would take clues from the context into account. However, the setting for this test offered no such additional information as it took place in what appears to be an empty parking lot and so lacked the opportunity for validating the liikelihood of any specific speed limit. A different type of perceptual challenge to driver assistance systems was demonstrated by researchers from Ben- Gurion University who started from the premise that the lack of ability to validate visual perceptions leaves semi/fully autonomous vehicles vulnerable to malicious attack. They created depthless objects referred to as "phantoms" using drone-mounted projectors to trick the ADAS that objects were in the road ahead. Projecting a tuxedo-clad figure based on Elon Musk, caused the Tesla Model X to brake and take evasive action - which could put it in the path of oncoming vehicles. The researchers also projected fake speed limit signs and fake road markings, which also misled the auto-pilot systems. Human drivers might well have been duped by the fake road markings and would probably have been uncertain how to respond to seeing a phantom Elon Musk looming in the road ahead of them. Commenting on the research, Ben Nassi, lead author of the paper Phantom of the ADAS: Phantom Attacks on Driver-Assistance Systems and a Ph.D. student of Prof. Yuval Elovici in BGU's Department of Software and Information Systems said: "This type of attack is currently not being taken into consideration by the automobile industry. These are not bugs or poor coding errors but fundamental flaws in object detectors that are not trained to distinguish between real and fake objects and use feature matching to detect visual objects." In fact, computer vision systems can probably be trained to distinguish between one-dimensional phantoms and solid objects rather better than human drivers. However there is another approach to problems of this type which is to through legislation. Laws have had to be passed to permit having autonomous vehicles on the road and maybe we need extra rules and regulations to protect both driverless cars and human car drivers from malicious behavior such as altering road traffic signs and projecting misleading images onto the carriageway. Perhaps appropriate legislation already exists and it just waiting to be used. Either way it's not always necessarily to improve artificial intelligence but to use some common sense.

More InformationModel Hacking ADAS to Pave Safer Roads for Autonomous Vehicles Phantom of the ADAS: Phantom Attacks on Driver-Assistance Systems (pdf) by Ben Nassi, Dudi Nassi , Raz Ben-Netanel , Yisroel Mirsky , Oleg Drokin , Yuval Elovici Related ArticlesRobot cars - provably uncrashable?

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Monday, 24 February 2020 ) |