| The Best Wordle Initial Guess Is... |

| Monday, 18 April 2022 | ||||||||||||

|

SLATE - probably. It is amazing that a game as simple as Wordle has taken the world by storm. It gives hope that there might be other similar gems waiting to be unearthed. But what about a good strategy for solving the game?

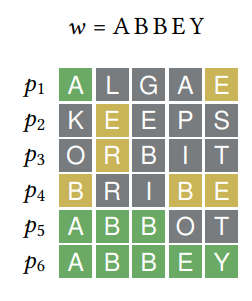

Wordle is essentially the game of mastermind, where you have to guess a set of colors but with words. The game would be exactly mastermind if it wasn't for the fact that all the guesses have to be valid words in an agreed dictionary. Currently the dictionary is 2,315 five letter words, using American spelling, but this can be changed to produce different varieties of the game. The need to use the words in the dictionary means that you cannot freely select "probe" words - they have to be words in the dictionary. If you can use probe words then the best choice for the first guess is a selection of most probable letters. Subequent guesses should be based on the infromation derived from the first guess and again the probabilities of letters occuring. If you can't use arbitary probe words then things are more difficult. One attempt at a good algorithm in a recent paper is interesting because it takes into account the dictionary in use and the similarities between words. The approach is interesting because it reduces the words to vectors - which is a common trick when neural networks are applied to language. In this case the coding to vectors is very simple. The first approach is a 26 x1 column vector recording which letters are in the word irrespective of postion. For example CAB would be (1,1,1,0,0,0 etc). This sort of vector gives you the frequency that individtual letters occur. A more sophisticated approach is to code using a 26 x 1 for each of the five possible positions i.e. a 26x5 matrix or stacking the vectors one after another a 130x1 vector. This sort of representation gives the frequencies of each letter by position. The approach works by creating a list of possible solutions given the information so far and treating this as a big matrix of vectors. Next perform a principle components analyis of the matrix to find the most characteristic distribution of letters - the eigenword. Of course this will have fractional enties proportional to the likelihood of each letter occuring in the solution. To convert these to actual letter selections take each word in the set of possible solutions and and compute the similarity (measured by corelation) between it and the eigenword and select the one with the largest similarity. Given the descretness of words and the fuzzyness of the eigenvector approach it is amazing that anything works - but it seems to. Applying the analysis to find the best starting guess revealed:

The first row makes use of only acceptable guesses i.e. the entire dictionary for the first guess and using the two different methods of representing words by vectors. The second row uses published Wordle solutions as the initial acceptable guess. Testing the initial guess words by actually playing the game using them proved that SLATE was the best choice resulting in solutions in just over four guesses and a win in close to 99% of the time. The improvement over a random algorithm isn't huge and there might be even better methods. If you want to try your hand you can try the Python code in a Jupyter notebook provided.

More Information Wordle and Rank One Approximation - Using Math to Win Social Credit in Wordle Related Articles

N Queens Completion Is NP Complete Rubik's Cube Is Hard - NP Hard Column Subset Selection Is NP-complete Unshuffling A Square Is NP-Complete Classic Nintendo Games Are NP Hard

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

||||||||||||

| Last Updated ( Monday, 18 April 2022 ) |