| Open Source Has As Good Code Quality As Proprietary Code |

| Written by Alex Armstrong | |||

| Thursday, 09 May 2013 | |||

|

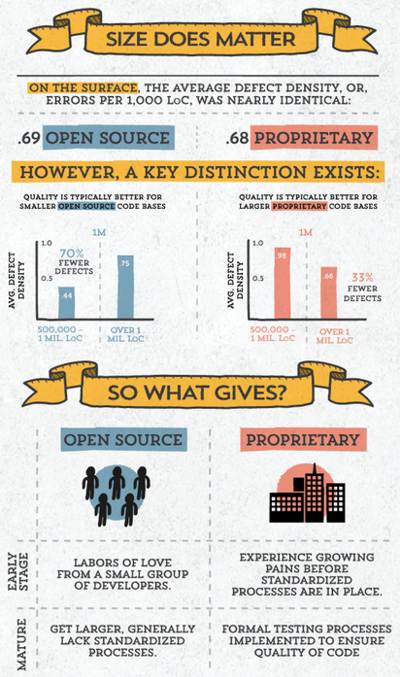

The recently released 2012 Coverity Scan Report shows that open source and proprietary code vie for top spot when it comes to reducing detected defects. The Coverity Scan service was initiated in 2006 with the U.S. Department of Homeland Security as the largest public-private sector research project in the world, focused on open source software quality and security. DHS ended the project but Coverity continues to offer its development testing technology as a free service to the open source community. C C++ and, since early 2013, Java-based projects can apply to be scanned and get reports on defects. LibreOffice, MariaDB, NetBSD, NGINX, Git, zsh, Thunderbird and Firefox are among the well-known projects scanned by Coverity Scan. The latest report details the analysis of more than 450 million lines of software code, the single largest sample size that the report has studied to date. Among it key findings is the reassuring one that for the second consecutive year both open source code and proprietary code scanned by Coverity have achieved defect density below one in every thousand lines of code, which is the industry-standard density defect level and provides the index of 1.0. Proprietary code has an average defect density of 0.68, open source code averages 0.69 and Linux, described by Coverity as the "benchmark of quality" achieved 0.59. A particularly interesting finding is that as projects grow beyond a million lines of code there is a switch in the performance of open source and proprietary code. Quality is better for smaller open source projects with a defect density of.44 in projects with between 500,000 – 1,000,000 lines of code than for larger ones in which defect density rose to .75. The opposite phenomenon is observed in proprietary code where the smaller projects had a defect density of .98 which decreased to 0.66 as they exceeded the threshold. Coverity concludes: This discrepancy can be attributed to differing dynamics within open source and proprietary development teams, as well as the point at which these teams implement formalised development testing processes. This is illustrated in this portion of the infographic summarizing the report's findings. Click on it to see the full infographic:

Despite the low defect density the defects that remain are serious. Fewer than 10% of the defects fixed affected open source code - 20,720 of the 229,219 defects, but of those 36% were classified as high risk, meaning that they could pose a considerable threat to overall software quality and security if undetected. The most common were resource leaks, memory corruption and illegal memory access, which are difficult to detect without automated code analysis. Coverity's Chief Marketing Officer, Jennifer Johnson, commented: "This year’s report had one overarching conclusion that transcended all others: development testing is no longer a nice-to-have, it’s a must-have. Of course static analysis doesn't find all of the bugs so these estimates are a lower bound for bugs per thousand lines. More InformationRegister to obtain 2012 Coverity Scan Report Related ArticlesLibreOffice Initiative To Eradicate Most Annoying Bugs Code Digger Finds The Values That Break Your Code TraceGL - An Oscilloscope For Code A Bug Hunter's Diary (book review)

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Thursday, 09 May 2013 ) |