| Tensor Operations Are NP Hard |

| Written by Mike James | |||

| Thursday, 01 August 2013 | |||

|

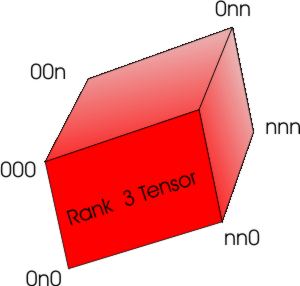

Most non-mathematicians might think that tensor operations are pretty hard without any formal proof, but new results prove that they are NP Hard which is not good news if you are trying to work something out using them. A tensor is the n-dimensional generalization of a matrix. A matrix is a 2D tensor in that it has rows and columns. A 3D tensor is a cube of numbers with rows, columns and slices. In a matrix the numbers are indexed by two variables, e.g. something like aij; in a tensor the number of indexing variables can be more than two, e.g. aijk for a tensor of dimension, or more accurately rank 3. We use matrix algebra and matrix numerical methods very often in almost any numerical program - it is the foundation of just about all the number crunching we do. We solve linear systems of equations, find eigenvalues, perform singular value decompositions and so on. These might be regarded as difficult math by some programmers, but for the majority of these tasks we have polynomial time algorithms. That is, vector and matrix computations are in P.

Tensor algebra generalizes the the linear algebra that we are all familiar with to higher dimensions - multi-linear algebra. It turns up in advanced geometry, the theory of gravity - the general theory of relativity that is, numerical mechanics, and so on. It is also being used in AI and computer vision and a number of cutting edge approaches to all sorts of topics. The good thing about tensor algebra is that from an algorithmic point of view it isn't really anything new - in most cases just a few more indexes to cope with. Surely the algorithms that we need to work with say rank-3 tensors is no more difficult than for rank-2 tensors, i.e. matrices? It has to be in P - right? Well no. According to a recent paper, things are much worse for rank-3 tensors. It seems that they mark a dividing line between the linear convex problems that are tractable and the more difficult non-linear non-convex class. In the paper a whole list of generalizations of tractable matrix problems are shown to be NP-hard in their rank 3 formulations. These include finding eigenvalues, finding approximate eigenvalues, symmetric eigenvalues, singular values, proving positive definiteness, approximating a tensor by rank 1 tensors and so on. There is even a mention of a generalized question about finding a matrix function which is shown to be undecidable for any tensor 20x20x2 or greater. This is a big surprise because the equivalent matrix function can be easily found and another hint that throwing in just one extra index to a matrix problem makes it very much more difficult. As the title of the paper puts it - Most tensor problems are NP-hard. Should we abandon tensor methods because most of them are not in P? You need to keep in mind that these sorts of analysis are only valid asymptotically when the size of the tensor n in an n x n x n tensor gets very large. It could well be that for a range of small n we can find algorithms that get the job done in reasonable time. The fact that a problem is NP-hard doesn't mean its impossible! The paper concludes with some open questions and, if you are reasonable at math you should find it an interesting read. More InformationMost tensor problems are NP-hard Related ArticlesFinding Solutions To Diophantine Equations By Smell Travelling Salesman - A Movie About P=NP Pancake flipping is hard - NP hard

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Thursday, 01 August 2013 ) |