| The Truth About Spaun - The Brain Simulation |

| Written by Mike James | |||

| Sunday, 02 December 2012 | |||

|

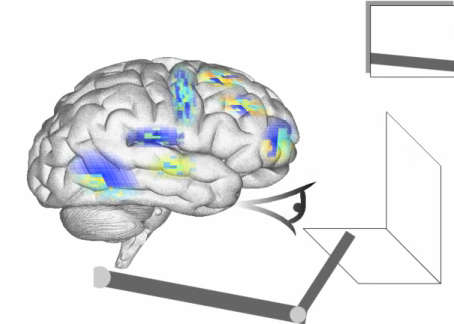

Spaun is a 2.5 million neuron model of the brain that can do useful things - recognize numbers, do arithmetic and write the answers using a simulated arm - but is this the breakthrough it seems to be. Hard on the heels of the news that IBM has simulated 530 Billion neurons we have headline news about Spaun. This is another big neural simulation but unlike the IBM neural network Spaun does impressive things. In a paper published in Science, and behind a paywall despite the fact that this is public funded research, Chris Eliasmith and his colleagues describe Spaun and release a video showing it recognize numbers, generate answers to simple numerical questions, and write them down using a simulated arm. The whole presentation is very impressive and designed to draw the maximum possible impression that this is indeed a brain. As a result it has been reported that Spaun has an eye which lets it see the world and an artificial thalamus to perform visual processing. You can see in the video that even the activity of the neurons in the network are mapped to a drawing of the brain showing where the subsystems are located.

You are invited, encouraged and can't help but conclude that this is a thinking brain. This has resulted in headlines like: Scientists create functioning, virtual brain that can write, remember lists and even pass basic IQ test The mappings are rather like showing a cartoon of a man with muscles flexing as a way of explaining how a steam engine cylinder works. It may not be wrong, but it would be misleading to conclude that a man is a steam engine. Over-claiming results is one of the things that damages the entire subject of AI and while this simulation is an interesting step along the road it is important to understand exactly what is going on. The first thing to point out is that Spaun doesn't learn anything. It can be arranged to tackle eight pre-defined tasks and it doesn't learn any new tasks or modify the way it performs existing tasks. The whole system is based on the Neural Engineering Framework - NEF- which can be used to compute the values of the strengths of connections needed to make a neural network do a particular task. If you want a neural net to implement a function of the inputs f(x) then NEF will compute the parameters for a leaky integrate and fire network that will do the job. This is an interesting approach, but it doesn't show any of the plasticity that the real brain and real neural networks show. If anything, this approach is more like the original McCulloch and Pitts networks where artificial neurons were hand-crafted to create logic gates. For example. you can put neurons together to create a NAND gate and from here you can use them to implement a complete computer - a PC based on a Pentium, say, using the neuronal NAND gates to implement increasingly complex logic. It would all work but it wouldn't be a thinking brain or a model of a neuronal computer.

In the same way, Spaun is an interesting mechanism composed of sub-units that have been engineered to a specification using the NEF. The key idea in building these structures is the "Semantic Pointer" - Spaun is short for Semantic Pointer Architecture Unified Network. But the fact that the overall system can do the tasks that are demonstrated is just a reflection of the fact that they were engineered to do exactly that task. This in itself is impressive and worth further study, but we need to understand that this is not as much like a brain as it appears to be. You can have a go at your own NEF based systems using the Nengo neural simulator which was used to implement Spaun among other demonstration networks. You can place some neurons on the design surface and in no time at all convert them into a control system or a memory device. You could then put these devices together into plausible brain-like organizations that also do specific tasks. It is very clever but there isn't a scrap of machine learning in there. This is neural nets as engineering. For example, the control of an arm or other motor device follows traditional control systems engineering principles with feedback, overshoot and transfer functions. So moving away from the hype - this is still impressive. If the same techniques can be modified and extended to include the learning methods being discovered and tried out by the "deep learning" school of AI, then perhaps we will have a brain in a box very soon. In the meantime, what we have is a demonstration that a highly structured neural net can do brain-like things as long as we put the structure in. The NEF seems like something worth finding out about. Eliasmith is writing a book about it called "How to build a brain" but meanwhile there's an older title, already published:

More InformationChris Eliasmith's Home Page

Related ArticlesIBM's TrueNorth Simulates 530 Billion Neurons The Influence Of Warren McCulloch Google's Deep Learning - Speech Recognition A Neural Network Learns What A Face Is The Paradox of Artificial Intelligence To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Sunday, 02 December 2012 ) |