| Google Explains How AI Photo Search Works |

| Written by Mike James | |||

| Friday, 14 June 2013 | |||

|

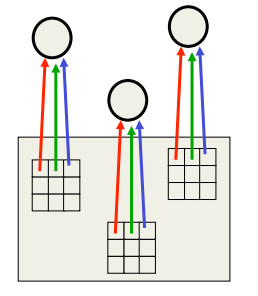

A short time ago we reported that Google was using some sort of AI to find photos that contained named objects. It seemed a reasonable guess to say that it was using convolutional neural network but there were no details - now there are. If you missed the breakthrough, we reported it in Deep Learning Finds Your Photos where we speculated that it was based on a deep convolutional network and commented: Surely Google could make a bit more of a fuss about the technology than it is doing at the moment! Yes it is a convolutional neural network and more specifically one based SuperVison built by the team led by Geoffrey Hinton that won the ImageNet Large Scale Visual Recognition Challenge 2012. The Google AI group that was started by Jeff Dean and Andrew Ng evaluated the approach and agreed that it did work better than anything else. This was the main reason Google acquired the AI startup, DNNresearch, that Hinton et al had put together. Google has used the technology to implement photo search as part of Google+. What is surprising about the result is that the basic idea, that of a neural network, which was introduced many years ago and then discredited, reinvented, discredited and so on, is being used essentially unchanged. The main new ideas are better training algorithms, but the overall the reason we now have something that looks like "real" AI in use is that we can train bigger networks. The details revealed on the Google Blog are much as you might expect from a study of the original. To make it suitable for Google+ photo classification, 2000 classes were derived based on the most popular manually applied labels that also seemed to have a visual content, i.e. "car" but not "beautiful". A total of 5000 images were used per class in training and then the most accurately learned 1100 classes were implemented in the final version. In training it was noticed that the network managed to generalize from the training to the test set even though the training set was based on images derived from the web that tended to show "archetypal" images. The test set, which was composed of real users images, tended to be more varied and less "archetypal".

An archetypal flower

A typical flower photo as uploaded to Google+

You can conclude that generalization was due to the convolutional design of the network, i.e. it responds in the same way to a pattern no matter where it is located in the photo but this doesn't explain the scale invariance. The blog also notes that the network learned categories with more than one common type of example - a car can be a photo of the outside of a car or the interior. This is also surprising as the final layer is a linear classifier, which doesn't generally do well on multimodal decision making. Presumably the low levels of the network were successful in mapping the two modes to the same sort of area in the data fed to the final layer. Even more surprising is the way the network managed to do a good job of learning abstract ideas - like kiss, dance and meal. Presumably this is because these apparently abstract concepts have more limited ranges of visual expression than you might have guessed. It also manged to classify finely distinguished classes like plant types. Perhaps the most interesting of all observations is that when the network got it wrong it did so in a way that seemed reasonable to a human observer. For example, when the network classifies the photo of a small donkey as a dog you can't help but say "I see what it is getting at". If you have done any work with expert systems or other types of AI system you will know that this is remarkable because a really big problem is explaining how the system got to its answer and more often than not why it got something so very wrong. All of these points suggest that this is just the start of the development of the system, but it works well enough to let the public use it. What seems to be needed is more of the same and not some amazing new breakthrough that means we use something completely different. We are at the billion parameter level and we need to progress to the trillion parameter level.

The age of the artificial neural network that can do really useful work seems to be upon us. More InformationImproving Photo Search: A Step Across the Semantic Gap ImageNet Classification with Deep Convolutional Neural Networks Finding your photos more easily with Google Search Neual Networks For Machine Learning Related ArticlesDeep Learning Finds Your Photos Deep Learning Researchers To Work For Google Google's Deep Learning - Speech Recognition A Neural Network Learns What A Face Is Speech Recognition Breakthrough

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Monday, 17 June 2013 ) |