| Meta's MultiModal, MultiLingual Translator |

| Written by Sue Gee |

| Tuesday, 21 January 2025 |

|

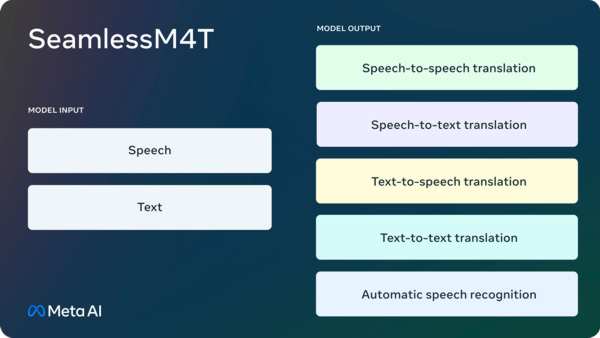

Meta has taken us a long way towards creating a Babel Fish, a tool that helps individuals translate speech between any two languages. This is thanks to SEAMLESSM4T which is open-source for non-commercial use and which Meta hopes will propel further research on inclusive speech translation technologies. SEAMLESSM4T, where M4T stands for Massively Multilingual and Multimodal Machine Translation, is a single model that supports speech-to-speech translation (currently from 101 to 36 languages), speech-to-text translation (from 101 to 96 languages), text-to-speech translation (from 96 to 36 languages), text-to-text translation (in 96 languages) and automatic speech recognition (in 96 languages). The model was originally unveiled in 2023 and, in keeping with Meta's policy of supporting open science was open-sourced under a Creative Commons licence to allow researchers and developers to build on its work. Meta also released the metadata of SeamlessAlign, its multimodal translation dataset, totaling 470,000 hours of mined speech and text alignments. SEAMLESSM4T builds on work Meta and others have made over the years in the quest to create a universal translator. In 2022 I reported on No Language Left Behind (NLLB), a text-to-text machine translation model that supports 200 languages. Since then this has been integrated into Wikipedia as one of its translation providers. Meta had also demoed a Universal Speech Translator, which was the first direct speech-to-speech translation system for Hokkien, a language without a widely used writing system. Through this, Meta developed SpeechMatrix, the first large-scale multilingual speech-to-speech translation dataset. Meta also shared Massively Multilingual Speech, which provides automatic speech recognition, language identification, and speech synthesis technology across more than 1,100 languages. SeamlessM4T draws on findings from all of these projects to enable a multilingual and multimodal translation experience stemming from a single model, built across a wide range of spoken data sources and with state-of-the-art results. In this video, Paco Guzmán introduces the main features of SEAMLESSM4T and Sravya Pouri gives us a demonstration of "code switching", which happens when a multi-lingual speaker switches languages while they are speaking, using Hindi, Telegu and English. This showcases the model's automatic speech recognition. This video shows translations between English and Russian in speech-to-speech, speech-to-text and text-to speech together with language recognition:

This month a paper, "Joint speech and text machine translation for up to 100 languages", authored by the SEAMLESS Communication Team, a group of 68 multi-national researchers, has been published in Nature. The paper reveals that SEAMLESSM4T outperforms the existing state-of-the-art cascaded systems, achieving up to 8% and 23% higher BLEU (Bilingual Evaluation Understudy) scores in speech-to-text and speech-to-speech tasks, respectively. Beyond quality, when tested for robustness, our system is, on average, approximately 50% more resilient against background noise and speaker variations in speech-to-text tasks than the previous state-of-the-art systems. The paper also outlines how the model incorporates strategies to mitigate gender bias and toxicity, ensuring more inclusive and safer translations. One response to the paper includes: SEAMLESSM4T represents a step forward in building inclusive and accessible systems, offering an effective bridge between cultures and languages for application in both digital and face-to-face contexts. a conclusion with which I concur. If you want to try the model for yourself,you can do so here: https://seamless.metademolab.com/ You'll need a computer equipped with a camera and a microphone.

More InformationSEAMLESS Communication Team. Joint speech and text machine translation for up to 100 languages. Nature 637, 587–593 (2025). Related ArticlesMicrosoft Research Achieves Human Parity For Chinese English Translation Transcription On Par With Human Accuracy Neural Networks Applied To Machine Translation Speech Recognition Breakthrough Skype Translator Cracks Language Barrier Facebook Open Sources Natural Language Processing Model To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Tuesday, 21 January 2025 ) |