| Lakera Finds Lack Of Confidence In AI Security |

| Written by Sue Gee | |||

| Thursday, 22 August 2024 | |||

|

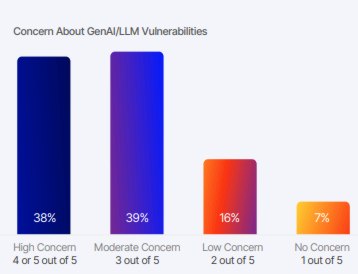

A survey from Lakera reveals that only 5% of cybersecurity experts have confidence in the security measures protecting their GenAI applications, despite the fact that 90% are actively using or exploring GenAI. Currently only 22% have adopted AI-specific threat modeling to prepare for GenAI specific threats. Lakera is an AI-focused security company based in Zurich that was founded in 2021 to address the fact that traditional security tools were increasingly insufficient against the new GenAI threat landscape and that a more adaptive, AI-driven approach was required. To accelerate secure adoption of AI, the company created Gandalf, an educational GenAI hacking platform that challenges users to trick the AI. Playing this games provides a hands-on experience that demonstrates the importance of careful prompting and the potential risks associated with AI misuse and, disturbingly, 200,000 players out of over 1 million have successfully completed the game. Entering commands using their native language and a bit of creativity allowed these players to trick Gandalf’s level seven in only 45 minutes on average. According to Lakera, This stark example underscores a troubling truth: everyone is now a potential hacker and businesses require a new approach to security for GenAI. Commenting on this David Haber, co-founder and CEO at Lakera, points out: “With just a few well-crafted words, even a novice can manipulate AI systems, leading to unintended actions and data breaches. As businesses increasingly rely on GenAI to accelerate innovation and manage sensitive tasks, they unknowingly expose themselves to new vulnerabilities that traditional cybersecurity measures don’t address. The combination of high adoption and low preparedness may not be that surprising in an emerging area, but the stakes have never been higher.” To gain a fuller picture of GenAI security Lakera conducted its GenAI Security Readiness Report survey between May 15-22, 2024. It received 1,000 responses from individuals in a wide range of roles, such as developers, security analysts, and executive-level security roles like CISOs, 60% of whom have more than five years of cybersecurity experience. The survey found that 42% of respondents are actively using and implementing GenAI, a further 45% are exploring its use while only 9% have no current plans to adopt LLMs. Of three barriers to LLM adoption, reliability and accuracy comes tops (35%), closely followed by data privacy and security (34%), with lack of skilled personnel in third place (28%). Looking into the perception of risk associated with the widespread adoption of AI tools, Lakera found that 38% of respondents rated their concern about GenAI/LLM vulnerabilities as "high" and a further 39% opted for "moderate" This highlights a widespread recognition of the escalating risks and the urgent need for comprehensive security frameworks. Lakera intends to conduct this survey and produce the GenAI Security Readiness Report annually to track how preparedness changes as teams are more informed about the security risks of GenAI. You can download the 2024 report here by providing your email address.

More InformationAI Adoption Surges, Security Preparedness Lags Behind Related ArticlesGitHub Reveals Regional Variation In AI Usage Developers Wary Of The AI Tools They Use Developers Like Code Assistants Even When They Are Incorrect To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Thursday, 22 August 2024 ) |