| Look Once to Hear - A Spy's Dream Come True |

| Written by Harry Fairhead |

| Sunday, 23 June 2024 |

|

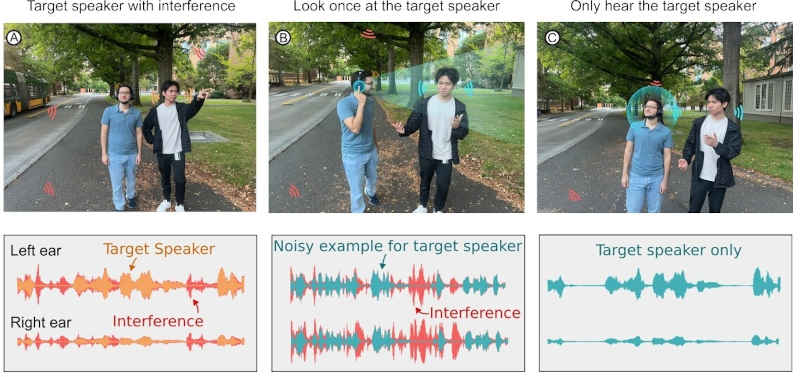

Deep learning has triumphed again. You can don a pair of headphones, look at a person talking and from then on the system will track the person so you can hear them as they move away or become swamped in noise. It's the ultimate cocktail party effect. A team from the Paul G. Allen Center for Computer Science & Engineering, University of Washington, has done something I personally would have assumed impossibly difficult. Past work proved that it was possible to track a speaker but, only if a clean high-quality recording of their voice was already available. Even this is a difficult task without AI. Signal processing algorithms aren't easy to implement and trying to extract the features necessary to identify a speaker is very difficult. But we don't have to - AI can do the job for us. The new approach makes use of AI to both find the important features of a specified speaker and to track them. A beam forming microphone array is used to pick up audio in the direction that the user is looking. As the user is looking at the target there should be no time lag between each ear and this can be used to select the target signal. A pretrained neural network extracts the characteristics of the target speaker and this is then fed into a second neural network that tracks the target without the assumption that the user is lookng directly at them.

This all sounds very computationally expensive, but the whole thing works in realtime running on an Orange Pi 5B - which is a very low-cost IoT device. The system takes 5.47ms to process an 8ms chunk of audio - which is remarkable and leaves space, or rather time, for extras. The speed was obtained by converting a PyTorch version to an ONNX model. That it works is evident in this video: This is a first step on an interesting road. As well as allowing communication in difficult situations and its potential to help hearing impaired people follow a conversation, it could be developed and integrated with larger systems. You could add a speech recognition network and produce a transcript. With some tweaking and improvement it would be a gift to any spook. What could be an easier way to bug a situation than to simply look at the person you want to eavesdrop on and then turn away and look completely disinterested. If you are attracted by trying to implement any of these, and more ideas, the good news is that the code is open source and available on GitHub.

More InformationLook Once to Hear: Target Speech Hearing with Noisy Examples The paper won Best Paper Honorable Mention at CHI 2024. Related ArticlesWhisper - Open Source Speech Recognition You Can Use Speech2Face - Give Me The Voice And I Will Give You The Face To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Sunday, 23 June 2024 ) |