| Apache Spark Now Understands English |

| Written by Nikos Vaggalis |

| Monday, 10 July 2023 |

|

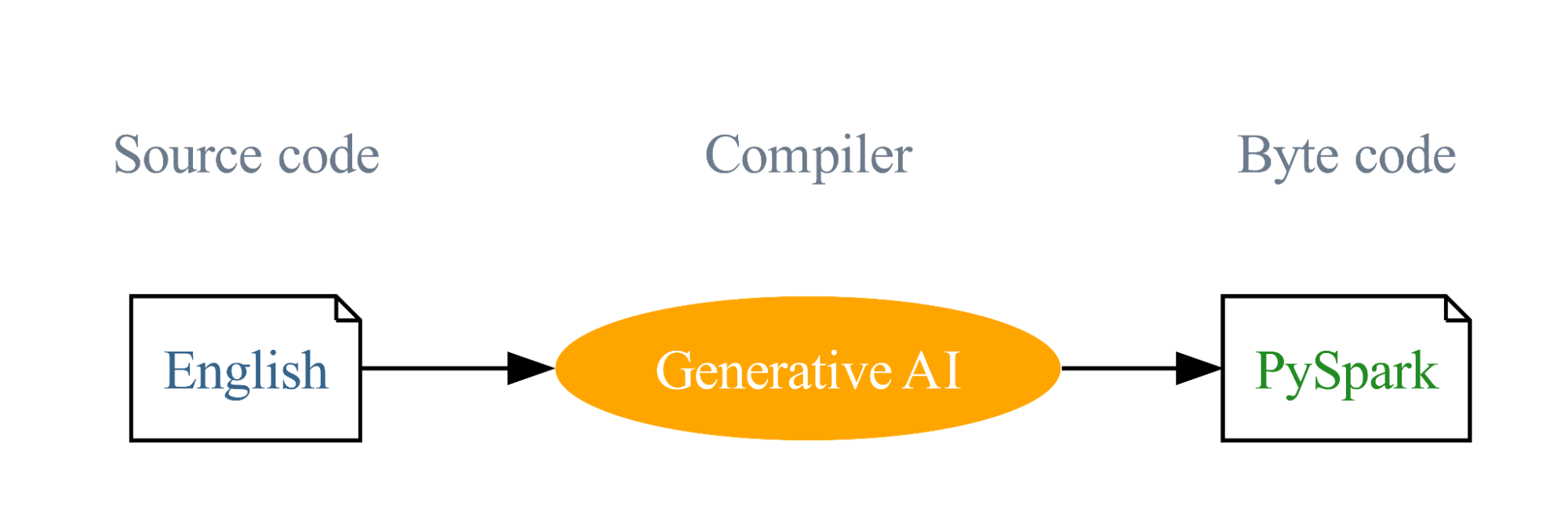

An SDK for Apache Spark has been released that takes English instructions and compiles them into PySpark objects, making Spark more user-friendly and accessible.

It was expected that at some point someone would use Generative AI to compile English to SQL or code. SQL although easy to grasp has been the barrier to Management's direct interaction with the data silos of the organization. But in actuality, the developer was the bridge between those two edges. He would get the request of the Management in English or another natural language and translate that to SQL/code in order to generate the necessary report. That role is now played by AI so that even those who do not understand SQL operations can easily use the ability to quickly query business data and generate reports. But... where the developer had the edge, is that he had knowledge of the schema, the internal details of the database, the business logic which he utilized to construct the query. An AI system without that knowledge was destined to fail. Nowadays however LLM's can be trained on your data and thus gain the knowledge that was missing. Such an attempt to use natural language to access data is Alibaba's Chat2DB , a general-purpose SQL client and reporting tool for databases which integrates ChatGPT capabilities which integrates AIGC's capabilities and is able to convert natural language into SQL. It can also convert SQL into natural language and provide optimization suggestions for SQL to greatly enhance the efficiency of developers. According to its website: It is a tool for database developers in the AI era, and even non-SQL business operators in the future can use it to quickly query business data and generate reports.

transformed_df = df. ai. transform('get 4 week moving average sales by dept') The English SDK, with its understanding of Spark tables and DataFrames, handles the complexity, returning a DataFrame directly and correctly.

The SDK offers the following key features: Data Ingestion You can ingest data via search engine: auto_df = spark_ai. create_df("2022 USA national auto sales by brand") Or you can ingest data via URL: auto_df = spark_ai. create_df("https://www.carpro. com/blog/full-year-2022-national-auto-sales-by-brand")

The SDK provides functionalities on a given DataFrame that allow for transformation, plotting, and explanation based on your English description. DataFrame Transformation auto_top_growth_df=auto_df. ai. transform("brand with the highest growth") auto_top_growth_df. show() DataFrame Explanation auto_top_growth_df. ai. explain() DataFrame Attribute Verification auto_top_growth_df. ai. verify("expect sales change percentage to be between -100 to 100") User-Defined Functions (UDFs) This feature simplifies the UDF creation process, letting you focus on function definition while the AI takes care of the rest. @spark_ai. udf SELECT student_id, convert_grades(grade_percent) FROM grade Caching The SDK incorporates caching to boost execution speed, make reproducible results, and save cost. Thus tooling enters a new era powered by natural language. The potential is beyond imagination - talking to robots anyone? A dream come true?

More InformationIntroducing English as the New Programming Language for Apache Spark English SDK for Apache Spark Github Related ArticlesStable Diffusion Animation SDK For Python TLDR Explains Code Like I Am Five To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

| Last Updated ( Monday, 10 July 2023 ) |