| Amazon's AI Wake-Up - Free Code Assistant |

| Written by Mike James | |||

| Wednesday, 19 April 2023 | |||

|

Amazon is a company that you might expect to be at the front of commercializing AI - after all they have the computing power in AWS and the expertise in the form of Alexa. In a bit of a catch up maneuver Amazon is now offering a free code assistant, CodeWhisperer and promises more. The interesting thing here is that by making it free they are undercutting Microsoft's offering by $10 a month. CodeWhisperer isn't a sudden development - a beta test program was launched back in June 2022, see Amazon Previews CodeWhisperer. It is now available for free for anyone wanting to try it out, not just AWS users.

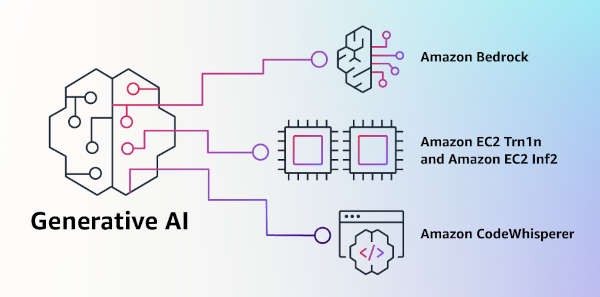

It works with Python, Java, JavaScript, TypeScript, and C# plus ten new languages, including Go, Kotlin, Rust, PHP, and SQL. CodeWhisperer can be accessed from IDEs such as VS Code, IntelliJ IDEA, AWS Cloud9, and many more via the AWS Toolkit IDE extensions. Notice that this includes AWS-specific services, so it might not just be programmers interested in it. After a few days use, the general impression seems to be that it isn't as useful as Microsoft's CoPilot. This is probably due to the fact that CoPilot is based on GPT3.5 and hence trained on a wide range of material whereas CodeWhisperer was trained specifically on code. CodeWhisperer was trained on billions of lines of code drawn from open source repositories, internal Amazon repositories, API documentation, and forums. Unfortunately there isn't any detailed documentation on how it was trained or its architecture so we have to guess. Will the existence of CodeWhisperer force Microsoft to reduce the price of Copilot? When you compare like-with-like, Copilot for business and CodeWhisperer Professional are both $20 per user per month. This means that the price pressure on Microsoft is much less than it first appears. Also $10 per month, the minimum cost for Copilot, is cheap for an effective tool, even compared to zero for a tool that doesn't quite do the job. At the same time as announcing the availability of CodeWhisperer, Amazon announced Bedrock which it refers to as a Foundational Model (FM) rather than a LLM (Large Language Model). Instead of being a single model, Bedrock seems to be a collection: "Bedrock customers can choose from some of the most cutting-edge FMs available today. This includes the Jurassic-2 family of multilingual LLMs from AI21 Labs, which follow natural language instructions to generate text in Spanish, French, German, Portuguese, Italian, and Dutch. Claude, Anthropic’s LLM, can perform a wide variety of conversational and text processing tasks and is based on Anthropic’s extensive research into training honest and responsible AI systems. Bedrock also makes it easy to access Stability AI’s suite of text-to-image foundation models, including Stable Diffusion (the most popular of its kind), which is capable of generating unique, realistic, high-quality images, art, logos, and designs." It also seems that fine tuning is something that Amazon thinks it can make a killer feature: "Customers simply point Bedrock at a few labeled examples in Amazon S3, and the service can fine-tune the model for a particular task without having to annotate large volumes of data (as few as 20 examples is enough)." Amazon has also made available AWS instances that are customized to run its AI software: announcing today the general availability of Inf2 instances powered by AWS Inferentia2, which are optimized specifically for large-scale generative AI applications with models containing hundreds of billions of parameters. Inf2 instances deliver up to 4x higher throughput and up to 10x lower latency compared to the prior generation Inferentia-based instances. They also have ultra-high-speed connectivity between accelerators to support large-scale distributed inference. These capabilities drive up to 40% better inference price performance than other comparable Amazon EC2 instances and the lowest cost for inference in the cloud. This could be Amazon's big money earner, given the recent quote from Amazon CEO Andy Jassy: “Most companies want to use these large language models but the really good ones take billions of dollars to train and many years and most companies don’t want to go through that.” It is true that the AI world has changed a lot since the introduction of foundational models. Previously a company or a researcher would consider the architecture needed, how many layers, what response function, total number of neurons and so on and would then collect the data and train the model. Today it looks more and more as if the pursuit of AI is the reuse and fine tuning of pretrained models - and this is a very big change because it puts the control into the hands of the few companies with the billions to train the few models that we will all use.

More InformationAnnouncing New Tools for Building with Generative AI on AWS Related ArticlesAnnouncing Copilot For Business Chat GPT 4 - Still Not Telling The Whole Truth To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Wednesday, 19 April 2023 ) |