| Self-Debugging Is Possible |

| Written by Mike James | |||

| Wednesday, 12 April 2023 | |||

|

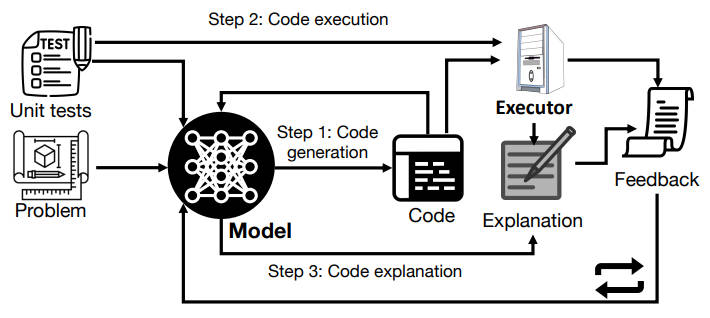

Self-debugging seems to not only be possible but very effective - and yes this makes me worry all the more for the future of programming. It's not that it's a predetermined bad future. It is more that it is an increasingly unknown future. I've seen large language models like GTP3 create programs and it's impressive, but you can't help but think that its just churning out bits of code its seen during its training and so not too much to worry about. When it comes to SELF-DEBUGGING an application of a large language model from researchers at Google and UC Berkeley to debug the code it generates things get a little more spooky. The idea is to get the model to explain the code and use this to fix it. This, in a human context, is often called rubber duck debugging because the programmer is encouraged to describe how the program works to a rubber duck - personally I use a wastepaper bin. In this case the explanations that the model produces and the results of running the code are used as feedback to the model to produce a new, corrected, program. Previous attempts at the same task used human feedback on the code to get the model to make corrections, but this case demonstrates that the model can provide its own feedback and hence can debug by itself, without outside help.

The model was tested with different feedback - simple feedback that just said the program worked or not, unit test results and a generated code explaination. The most effective was unit test results combined with code explanations. Here is an extract from a task where a Python translation of a C++ program fails a unit test: Below are C++ programs with incorrect Python translations. Explain the original code, then explain the translations line by line and correct them using the provided feedback. [c++] unsigned int program_for_factorial_of_a_number ( [/c++] [explanation] The code is an implementation of calculating the factorial of a number. if ( n == 0 ) return 1; The function is defined recursively. When the given number is equal to 0, the result of the factorial is 1. return n * program_for_factorial_of_a_number ( n - 1 ); Otherwise, the result of the factorial is the product of the given number and the (given number minus 1) factorial. [/explanation] [python] def program_for_factorial_of_a_number(n): return (1 if ((n == 1)) else (n * [/python] Explain the Python translation line by line. [explanation] The code is an implementation of calculating the factorial of a number. return (1 if ((n == 1)) else (n * program_for_factorial_of_a_number((n - 1)) )) The function is defined recursively. When the given number is equal to 1, the result of the factorial is 1. Otherwise, the result of the factorial is the product of the given number and the (given number minus 1) factorial. [/explanation] The Python translation does not do the same thing as the C++ code. These are the results of one failed unit test that tests whether the Python translation’s outputs match the C++ program’s outputs: Failed: assert program_for_factorial_of_a_number(0) == 1 Actual Result: RecursionError: maximum recursion depth exceeded in comparison Correct the translation. [python] def program_for_factorial_of_a_number(n): [/python] There are lots more examples like this, but what I find most disturbing is the way the correction doesn't mimic the C++ program. The paper has a lot more examples and they are all slightly disturbing. The conclusion of the paper is also worth reading: "In particular, we demonstrate that SELF-DEBUGGING can teach the large language model to perform rubber duck debugging; i.e., without any feedback on the code correctness or error messages, the model is able to identify its mistakes by explaining the generated code in natural language. SELF-DEBUGGING achieves the state-of-the-art performance on several code generation benchmarks, including the Spider dataset for text-to-SQL generation, TransCoder for C++-to-Python translation, and MBPP for text-toPython generation." While all this academic work is going on, programmers are experimenting. A GitHub user, BioBootloader, has posted a project called Wolverine that uses GPT4 to fix Python programs. It seems to work in a similar way to SELF-DEBUGGING in that it explains the program and then creates a new iteration until all the bugs are out.

There isn't as much information on this project, but you can always try it out for yourself. Could feeding explanations back to fix problems with other model generated content be a general solution to the tendency to hallucinate or make things up? More InformationTeaching Large Language Models to Self-Debug Xinyun Chen, Maxwell Lin, Nathanael Schärli, Denny Zhou (Google Research and UC Berkeley) Related ArticlesThe Robots Are Coming - AlphaCode Can Program! Chat GPT 4 - Still Not Telling The Whole Truth Google's Large Language Model Takes Control The Year of AI Breakthroughs 2022 Can DeepMind's Alpha Code Outperform Human Coders? The Unreasonable Effectiveness Of GPT-3 To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Wednesday, 12 April 2023 ) |