| Gato And Artificial General Intelligence |

| Written by Mike James | |||

| Wednesday, 18 May 2022 | |||

|

DeepMind has set the cat among the pigeons - again. Gato is a transformer model trained on a range of different subject areas that claims to be a "multi-modal" solution, i.e. it's an AI that can do more than one thing well. This isn't in dispute, but the idea that this is the solution to Artificial General Intelligence is... I was recently contemplating revising my books on AI written before the neural network revolution and I decided that it wasn't worth it. Modern AI has only one hero - the neural network and while the other "stuff" is interesting as background, it isn't where the action is any more. It can solve some problems in useful ways, but AI it really isn't. When I hear regression being listed as machine learning I still think that it is stretching the point. Modern AI is almost completely dominated by the neural network and yet it is far from being accepted, even by its leading practitioners, as the solution to AI and certainly not to general intelligence. Put simply, it is too slow to train, it forgets what it learned when you try to teach it something else and it makes really, really stupid mistakes of a kind that are not like any a human would make. The first breakthrough in AI wasn't anything new. We just discovered that neural networks work much better than anyone could possibly have expected if you make them big and deep. Even the real father of AI, Geoffrey Hinton, was taken off-guard by their success. After pioneering the neural network, he went off to investigate other ideas, notably the Boltzman machine which proved very hard to train, only to be pleased and amazed when his original best bet turned out to work - we just had to make it bigger and feed it more data. The latest breakthrough is the transformer model. Before this, to take account of temporal correlations, we needed to use recurrent neural networks, which were much more difficult to train and much less successful. When they worked they worked wonders, but most of the time it was better to avoid such an architecture. Then the idea of attention was invented and a number of different language models making use of it started to change the way we think about neural networks. So much so that they were renamed "foundational models" and are taken by one faction to be the future of AI and by the remaining faction as being nothing but a magic trick with no substance. The problem with language models such as GPT 3 is that they generate complex langauge responses to inputs that seem to be logical and reasoned and yet we can view them as nothing more than a capture of the statistical regularities of the language in question. This means that the anti-faction can say that they are a trick and there is no intelligence behind what they do and the pro-faction can just ignore them and get on with computing.

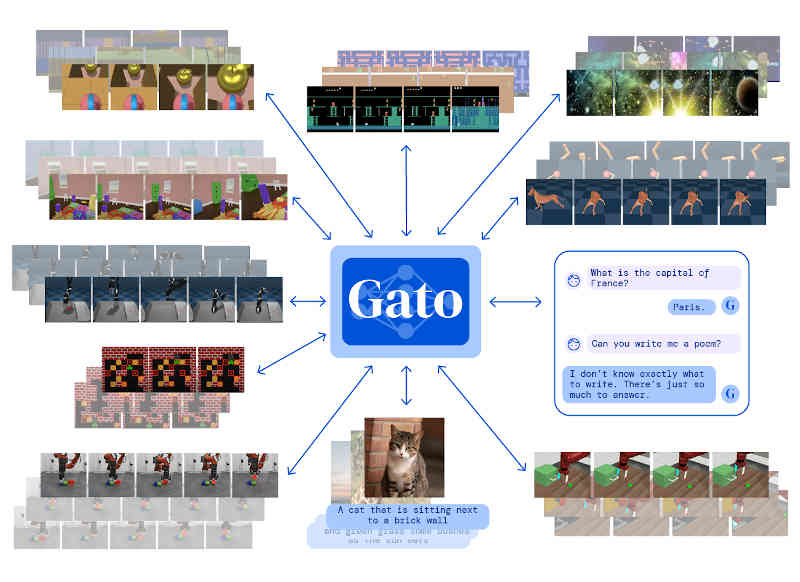

Now we have Gato that uses the same basic archecture, but it can do more than just language modelling. Gato can play games, stack blocks with a robot arm, caption images, chat and so on. Notice that this isn't a set of different networks each one trained on the example data and with a different set of weights. This is a single, fully-trained, network with one set of weights that can be used for many different "intelligent" applications. This is both astonishing and disturbing. The reason is that this is a neural network with a set of weights, admittedly a large set of weights, which implements a nonlinear function which encapsulates all of this "intelligence". There is no logic, no reason and certainly no thought process involved. You give it the numerical inputs, it computes in a very basic way and gives you the outputs. It's amazingly simple and yet amazingly huge. This would be so much run of the mill news of another AI breakthrough except... Now we have Nando de Freitas and other DeepMind researchers claiming, or hinting, that this is it: "My opinion: It’s all about scale now! The Game is Over! It’s about making these models bigger, safer, compute efficient, faster at sampling, smarter memory, more modalities, INNOVATIVE DATA, on/offline, …" This is all we need for AI in the future. If you think deep neural networks were big, you ain't seen nuttin yet. All we need is more neurons and more data. The anti-faction is, of course, up in arms about the stupidity of the claim - but we have been here before and this is likely to continue right up to the day that AI is accepted as being equal to I. I'm not claiming that this is going to happen any time soon - the scaling that is required is big - the human brain is so much bigger than Gato. The naysayers are stuck with the problem that, no matter what AI uses, they would claim that it wasn't enough because it wasn't "thinking" or wasn't "reasoning" or just wasn't human. It is the first paradox of AI that if you understand how it works, then it isn't intelligent. The transformer models that we are seeing do such amazing things are not the final answer. They have to be so much bigger and there is most likely a role to play where different models work together as a system - but overall it seems likely that Gato, writ much larger and with many tweaks, is the road to AGI.

More InformationRelated ArticlesThe Unreasonable Effectiveness Of GPT-3 Cohere - Pioneering Natural Language Understanding The Paradox of Artificial Intelligence Artificial Intelligence - Strong and Weak To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Wednesday, 18 May 2022 ) |