| From Ears To Faces |

| Written by Mike James |

| Sunday, 07 June 2020 |

|

New research gives a whole new meaning to the phrase "lend me your ears". The results reveal that given an image of a person's ear a neural network can reconstruct the person's face with amazing accuracy.

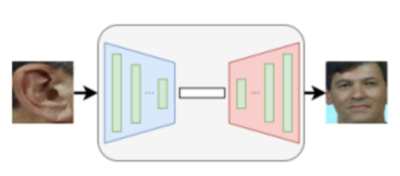

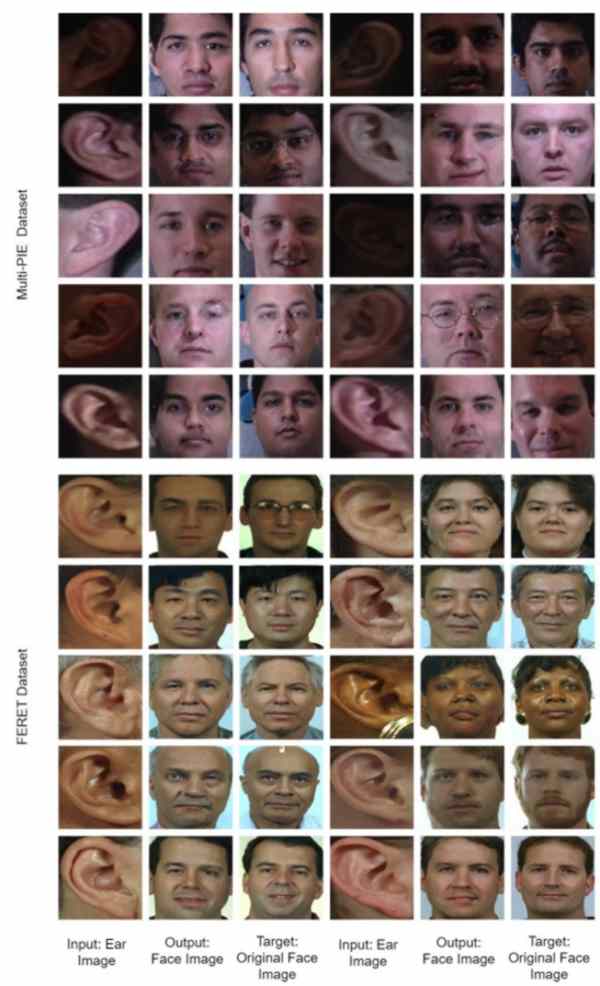

OK, at one level this is yet another example of what can be done with a large image database and a GAN-based model. But who would have thought that on the basis of just an ear it would be possible to produce a "fake face" that closely resembled the real face. Take a look for yourself: The research comes from Dogucan Yaman, Fevziye Irem Eyiokur and Hazım Kemal Ekenel who were students in the Department of Computer Engineering of Istanbul Technical University when they first developed their fascination for ears. The purpose of their first paper, "Domain Adaptation for Ear Recognition Using Deep Convolutional Neural Networks" was published in 2017 in IET Biometrics was to investigate "the unconstrained ear recognition problem" and led to them collecting the Mutli-PIE ear dataset using the Multi-PIE face dataset. They used this to analyze the effect of ear image quality, for example illumination and aspect ratio, on the classification performance - which presumably is why some of the images of ears in the first five rows of the set of photos above are of a low quality. For their more recent research their goal was to generate a frontal face image of a subject given his/her ear image as the input. Having formulated the problem as a paired image-to-image translation task they collected datasets of ear and face image pairs from the Multi-PIE and FERET datasets to train GAN-based models. The title of the paper Ear2Face is a reference to recent research from MIT CSAIL in which a neural network Speech2Face predicts what someone looks like based on a voice sample, see Speech2Face - Give Me The Voice And I Will Give You The Face for our report on their impressive deep learning breakthrough. One of the great things about Machine Learning is how we are being allowed to participate. Although not much of the material is available yet, Dogucan Yaman has set up a GitHub repo for Ear2Face where the experimental setup and codes will be available. It also has a less technical explanation of the basis for deep biometric modality mapping. Even so I find it amazing that so much information is present in just an ear. While it is obvious that our genes determine our face and those same genes determine what our ears look like, who would have thought that there was so much correlation between the two outcomes. It is also amazing that the variation in ears is sufficient to determine a face. At a guess would would suppose that ears occupy a much lower dimensional feature space than a face. But it seems that, as I said in the begining, if you lend me your ears I can show you your face. More InformationEar2Face: Deep Biometric Modality Mapping Dogucan Yaman, Fevziye Irem Eyiokur, Hazım Kemal Ekenel

Related ArticlesSpeech2Face - Give Me The Voice And I Will Give You The Face GANs Create Talking Avatars From One Photo More Efficient Style Transfer Algorithm 3D Face Reconstruction Applied to Art Find Your 2000-Year-Old Double With Face Recognition A Neural Net Creates Movies In The Style Of Any Artist Style Transfer Applied To Cooking - The Case Of The French Sukiyaki

|

| Last Updated ( Sunday, 07 June 2020 ) |