| Too Good To Miss: Our Robot Overlords Attempt To Master Jenga |

| Written by David Conrad | |||

| Friday, 27 December 2019 | |||

|

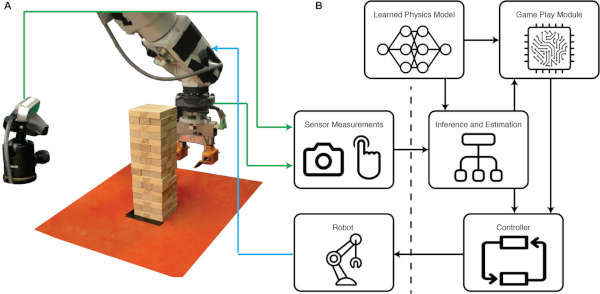

There are some news items from the past year that deserve a second chance. For any human who has sustained injury from falling Jenga blocks the news that a robot has developed enough touch and vision to do the task is bitter-sweet. Jenga is that game where you have to pull blocks from a tower of blocks and then place the extracted block on the top of the tower, so making it sparser and taller. If you have tried it then you know it isn't easy and it gets harder with the number of blocks already removed. To build a robot to do the job is tough because it's not just a game of logic and judgment, it is also about learning to move the blocks without dislodging other blocks. It's a physical game. A team from MIT have put together a special robot arm and the software needed to make it play Jenga: At the start of the video the voice-over says "requiring a steady hand and nerves of steel", Well the robot doesn't have to worry about the injuries from falling blocks so it just needs the steady hand.

What is interesting is that the robot learned after seeing only 300 towers. It doesn't use the standard deep neural network approach, which was tried out in a simulator and found to need orders of magnitude more data. There is a neural network involved, but it is a Bayesian neural network and there is a lot of hand-built structure in the model. Essentially the robot learned that if a block was hard to push then its extraction was probably going to bring the tower down. Notice this isn't trivial because it has to have a concept of what "hard to move" means among other things. At the moment the performance isn't good enough to challenge a human Jenga champion, but it's early days. Soon after AlphaGo beat the best at the game of Go, we reflected and took comfort in the fact that robots and AI couldn't stack stones like a human. With this sort of approach perhaps they can.

More InformationSee, feel, act: Hierarchical learning for complex manipulation skills with multisensory fusion N. Fazeli, M. Oller, J. Wu, Z. Wu, J. B. Tenenbaum and A. Rodriguez Related ArticlesWe May Have Lost At Go, But We Still Stack Stones Better AlphaGo Revealed As Mystery Player On Winning Streak Why AlphaGo Changes Everything Robots Learn To Navigate Pedestrians

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Friday, 27 December 2019 ) |