| Baidu Makes Breakthrough in Simultaneous Translation |

| Written by Sue Gee | |||

| Thursday, 25 October 2018 | |||

|

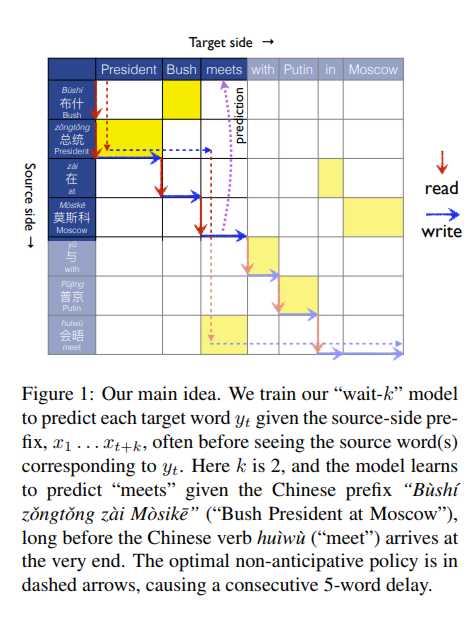

Baidu Research has announced STACL (Simultaneous Translation with Anticipation and Controllable Latency), an automated system that is able to conduct high quality translation concurrently between two languages. One area in which artificial intelligence is already making an impactful difference is in translating from one spoken language to another. Earlier this year we reported that Microsoft Research Achieves Human Parity For Chinese English Translation which was a breakthrough in the fluency and accuracy of machine translation. Now Baidu Research has made advances with the task of simultaneous translation and has made demos available on GitHub. The abstract of the paper made available on arXiv, co-authored by Mingbo Ma, Liang Huang, Hao Xion, Kaibo Liu, Chuanqiang Zhang, Zhongjun He, Hairong Liu, Xing Li and Haifeng Wang states: Simultaneous translation, which translates sentences before they are finished, is useful in many scenarios but is notoriously difficult due to word-order differences and simultaneity requirements. We introduce a very simple yet surprisingly effective `wait- This figure from the paper shows how the wait-k model works: This video shows this sentence being translated with a wait of 5 words:

Referring to this example, the Baidu Research blog explains: We tackled this challenge using an idea inspired by human simultaneous interpreters, who routinely anticipate or predict materials that the speaker is about to cover in a few seconds into the future. However, different from human interpreters, our model does not predict the source language words in the speaker’s speech but instead directly predict the target language words in the translation, and more importantly, it seamlessly fuses translation and anticipation in a single “wait-k” model. In this model the translation is always k words behind the speaker’s speech to allow some context for prediction. We train our model to use the available prefix of the source sentence at each step (along with the translation so far) to decide the next word in translation. In the aforementioned example, given the Chinese prefix Bùshí zǒngtǒng zài Mòsīkē (“Bush President in Moscow”) and the English translation so far “President Bush” which is k=2 words behind Chinese, our system accurately predicts that the next translation word must be “meet” because Bush is likely "meeting" someone (e.g., Putin) in Moscow, long before the Chinese verb appears. Just as human interpreters need to get familiar with the speaker’s topic and style beforehand, our model also needs to be trained from vast amount of training data which have similar sentence structures in order to anticipate with a reasonable accuracy. (click in image to enlarge) The blog post explains that STACL is flexible in terms of the latency-quality trade-off, such that the user can specify a delay of between one and five words. This means it can accommodate closely related languages such as French and Spanish where even word-by-word translation works very well. However, for distant languages such as English and Chinese and languages with different word order such as English and German, higher latency copes better. This example demonstrates the differences between simultaneous machine translation with a wait of 5 words and of 3 words:

The conclusion of the blog post suggests that there is no immediate expectation that human simultaneous translators are going to be out of a job thanks to STACL: Even with the latest advancement, we are fully aware of the many limitations of a simultaneous machine translation system. The release of STACL is not intended to replace human interpreters, who will continue to be depended upon for their professional services for many years to come, but rather to make simultaneous translation more accessible. More InformationSTACL: Simultaneous Translation with Integrated Anticipation and Controllable Latency Related ArticlesMicrosoft Research Achieves Human Parity For Chinese English Translation Transcription On Par With Human Accuracy Neural Networks Applied To Machine Translation Speech Recognition Breakthrough Skype Translator Cracks Language Barrier To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Thursday, 25 October 2018 ) |