| Playing a Piano and Composing Reduced to Eight Keys |

| Written by Mike James | |||

| Saturday, 20 October 2018 | |||

|

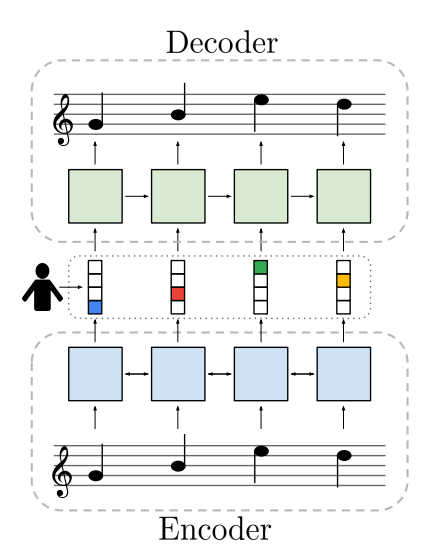

If you have harboured a dream of just sitting down in front of a piano and playing something amazing impromptu then your dream might become a reality - and without all the hard work of learning music theory. AI gets creative in yet another way. The idea seems to have originated in the practice of playing air guitar: "— performers strum fictitious strings with rhythmical coherence and even move their hands up and down an imaginary fretboard in correspondence with melodic contours, i.e. rising and falling movement in the melody. This suggests a pair of attributes which may function as an effective communication protocol between non-musicians and generative music systems: 1) rhythm, and 2) melodic contours. In addition to air guitar, rhythm games such as Guitar Hero also make use of these two attributes" The idea is to convert such general "high level" musical specifications to actual notes on a piano and the suggestion is that just eight buttons or keys might be enough. But wait! A piano has 88 keys (usually) and hence represents an 88-dimensional Boolean space corresponding to which keys are pressed or not. Can you really expect to map an 8-dimensional button space to an 88-dimensional space? The answer, according to Chris Donahue, Ian Simon, Sander Dieleman, researchers from Google AI and DeepMind, seems to be yes and this is where music theory comes in. Not all combinations of keys are ever used together; the majority sound discordant. In other words, Western music uses a particular subspace of the 88-dimensional space. Of course. it is even more complicated than this because what note follows another and what notes make pleasing chords are also restricted. So in principle it should be possible to build a lower-dimensional input device to output something that sounds like music. At this point you should be thinking "neural network" but there is a problem - we don't have any examples of the use of an 8-button keyboard being used to play music. This means we haven't got a training set and we need to do something different. Another way to use a neural network is to use it to implement an autoencoder. All an autoencoder learns to reproduce its inputs. What is the use of this, you might ask? The answer is that if you use fewer neurons in the autoencoder than are needed to simply commit the inputs to memory, the result is a structured dimensional reduction, which is what we are looking for. This is the basic principle of the method, but in this case we also need the relationships between successive notes and this implies that we need a recurrent neural network - something that is much more difficult to train. In this case two recurrent networks were used to form an autoencoder. The first, the encoder, takes standard 88 note piano sequences and maps them onto eight outputs; the second, the decoder, takes the eight outputs of the encoder and maps them to the same 88 note sequence. The training is simply to learn to correctly reproduce the input music, but notice that all of the data is passing though the 8-button represenation. Take away the first neural network and you can press the buttons manually and hopefully produce some new music.

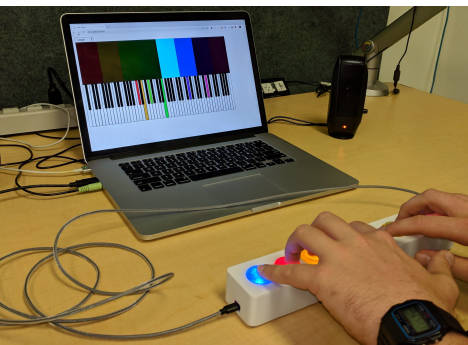

The training used 1400 performances of the Piano-e-Competition and hence it learned to reproduce high quality performances. The result is Piano Genie and you can hear it in performance in the following video: You can also try it out for yourself at https://tensorflow.github.io/magenta-demos/piano-genie/. The model, using TensorFlow, is also available for you to play around with in a different way. The paper concludes with: "We have proposed Piano Genie, an intelligent controller which allows non-musicians to improvise on the piano. Piano Genie has an immediacy not shared by other work in this space; sound is produced the moment a player interacts with our software rather than requiring laborious configuration. Additionally, the player is kept in the improvisational loop as they respond to the generative procedure in real-time. We believe that the autoencoder framework is a promising approach for learning mappings between complex interfaces and simpler ones, and hope that this work encourages future investigation of this space." They also tried to find out what people thought of it by letting people try it and then asking how much they enjoyed it. In the main they seemed to enjoy it and were impressed. Personally, as a non-playing music lover, I found the interaction to be interesting but I didn't feel that I was really in control simply guiding the overall direction of higher or lower - perhaps it takes time. Try it. I'm particularly interested in any differences between users who can play the piano and those who can't. It is clear that this needs some work. It is perhaps pointing the way to new ways to play and compose music, but I think it needs something more. It is clear that the musical space isn't 88-dimensional but it probably isn't 8-dimensional either - I wonder what would happen with 12 buttons? More InformationPiano Genie https://tensorflow.github.io/magenta-demos/piano-genie/ Related ArticlesAI Plays The Instrument From The Music The World's Ugliest Music - More than Random How the Music Flows from Place to Place To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Saturday, 20 October 2018 ) |