| A Neural Network Reads Your Mind |

| Written by Mike James | |||

| Thursday, 11 January 2018 | |||

|

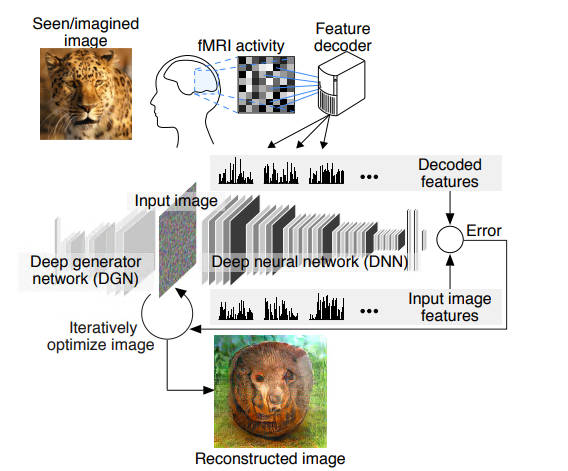

Is this AI or UI? The idea is simple enough - train a neural network to recognize what you are seeing from just your brain activity. Is it even possible? The short answer seems to be yes. However, it is important to realize right from the start that this isn't tapping into EEG data, i.e. it isn't taking electrical impulses from the cranium and working out what you are thinking. It has long been argued that EEG is a very blunt instrument as far as working out what the brain is doing. This study from researchers from Kyoto University uses data from a functional MRI scan which indicates the activity of each region of the brain. Specifically the activity of the visual cortex is fed into a neural network which is then trained to produce an output that matches the visual input that the subject is seeing.

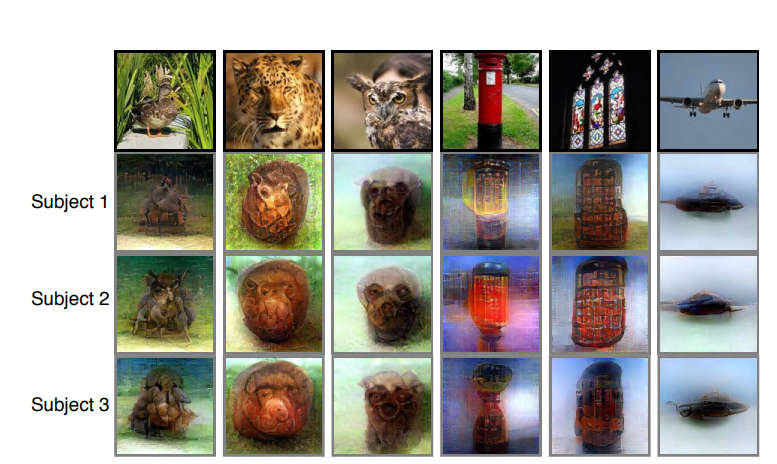

It seems unlikely but it does work. You don't get exact reproduction of the image but it is close enough to see the connection.

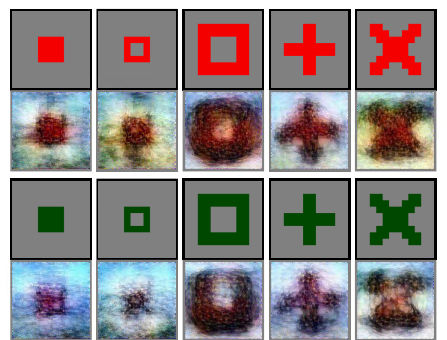

What is even more remarkable is that it works on generated shapes that weren't part of the training set, which only consisted of natural images. This strongly suggests that the neural network learned the structure of the visual cortex in a way that copies the relationship between input visual signal and activation of the various areas.

Adding another neural network to constrain the output of the first network to be closer to natural images improves the results. It is surprising that this works even as well as it does and there is plenty of scope for improvement and adding additional data to the input. What could you use it for? As the researchers state: We have presented a novel approach to reconstruct perceptual and mental content from human brain activity combining visual features from the multiple layers of a DNN. We successfully reconstructed viewed natural images, especially when combined with a DGN. Reconstruction of artificial shapes was also successful, even though the reconstruction models used were trained only on natural images. The same method was applied to imagery to reveal rudimentary reconstructions of mental content. Our approach could provide a unique window into our internal world by translating brain activity into images via hierarchical visual features. Yes, it could be used as a very special computer interface, but it could also be used to read subject's minds much like the situations and techniques conjectured in sci fi stories. One thing is very clear is that it proves that there is a lot of information in fMRI scans - more than you might have expected.

More InformationDeep image reconstruction from human brain activity Guohua Shen, Tomoyasu Horikawa, Kei Majima, and Yukiyasu Kamitani - Kyoto University, Related ArticlesGoogle Uses AI to Find Where You Live Evolution Is Better Than Backprop? OpenWorm Building Life Cell By Cell To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info

|

|||

| Last Updated ( Thursday, 11 January 2018 ) |