| ONNX For AI Model Interoperability |

| Written by Alex Armstrong | |||

| Monday, 11 September 2017 | |||

|

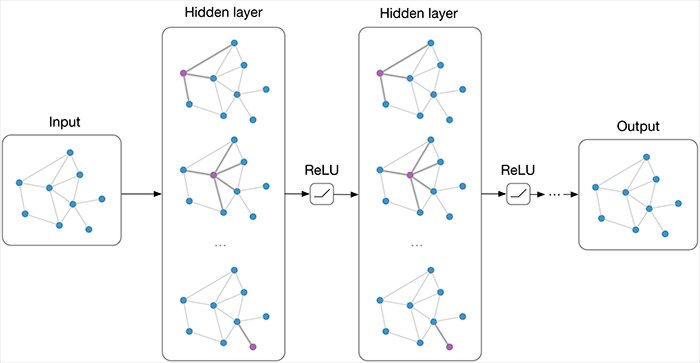

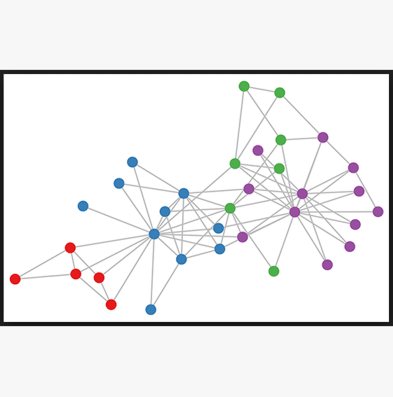

Unlikely as the collaboration seems, Microsoft and Facebook have co-developed the Open Neural Network Exchange (ONNX) format as an open source project. The GitHub Readme provides a clear and succinct statement of what the joint project is: Open Neural Network Exchange (ONNX) is the first step toward an open ecosystem that empowers AI developers to choose the right tools as their project evolves. ONNX provides an open source format for AI models. It defines an extensible computation graph model, as well as definitions of built-in operators and standard data types.

Facebook's Joaquin Quinonero Candela explains why this resource is required with: When developing learning models, engineers and researchers have many AI frameworks to choose from. At the outset of a project, developers have to choose features and commit to a framework. Many times, the features chosen when experimenting during research and development are different than the features desired for shipping to production. Many organizations are left without a good way to bridge the gap between these operating modes and have resorted to a range of creative workarounds to cope, such as requiring researchers work in the production system or translating models by hand. So ONNX has been provided to help AI developers to switch between frameworks which have their own formats for representing the computation graphs that represent neural networks. According to Microsoft's Eric Boyd the ONNX representation offers two key benefits:

Facebook's Caffe2 and Pytorch and Microsoft's Cognitive Toolkit (formerly CNTK) will be releasing support for ONNX in September and Microsoft plans to contribute reference implementations, examples, tools, and a model zoo. The already is a Pytorch tutorial Transfering a model from PyTorch to Caffe2 and Mobile using ONNX. ONNX works by tracing how a neural network generated using a specific frameworks executes at runtime and then using that information to create a generic computation graph that can be used in another framework. Its success will depend on the range of AI frameworks that it can model. Will we see ONNX representations for Google's TensorFlow, also an open source project and probably the most widely used or for Apache MXNet which is Amazon's preferred AI framework. More InformationFacebook and Microsoft introduce new open ecosystem for interchangeable AI frameworks Microsoft and Facebook create open ecosystem for AI model interoperability Related ArticlesMicrosoft Cognitive Toolkit Version 2.0 NVIDA Updates Free Deep Learning Software TensorFlow - Googles Open Source AI And Computation Engine AIGoes Open Source To The Tune Of $1 Billion To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info It works by tracing how a neural network generated using a specific frameworks executes at runtime and then using that information to create a generic computation graph that can be used in another framework. |

|||

| Last Updated ( Monday, 11 September 2017 ) |