| AI Applied To Cookies |

| Written by Lucy Black |

| Sunday, 23 July 2017 |

|

Are you one of those people who, when eating an unfamiliar dish, attempts to work out its ingredients in order to re-create it at home? It's something programmers are particularly prone to and now researchers at CSAIL are giving it a deep learning makeover. In a joint study with the Qatar Computing Research Institute (QCRI) and the Universitat Politecnica de Catalunya, the team trained an artificial intelligence system called Pic2Recipe to look at a photo of food and be able to predict the ingredients and suggest similar recipes. You can see it in action in this video:

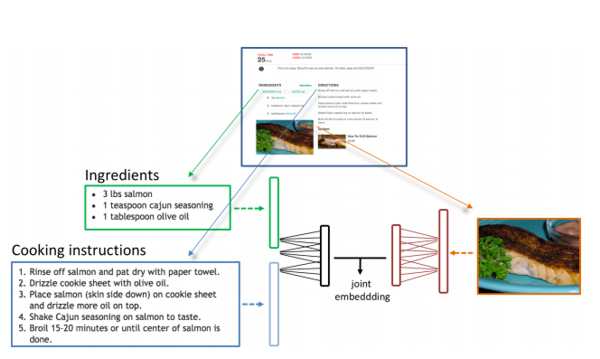

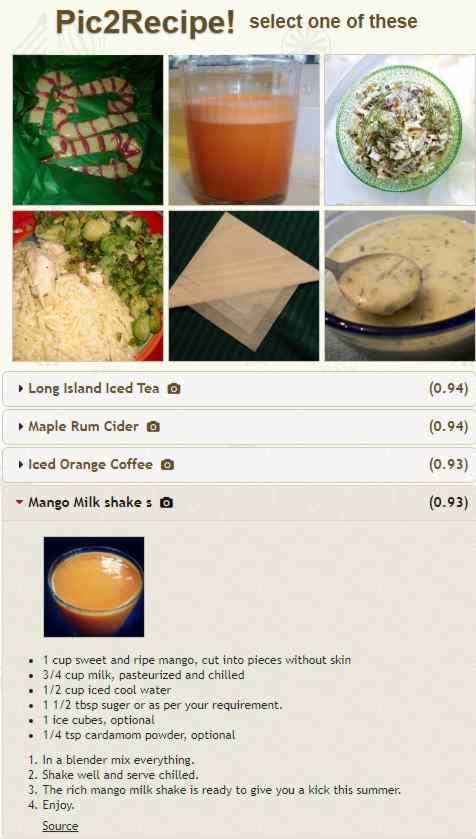

This may seem like the same sort of photo recognition exercise that has proliferated over the last couple of years, with apps for plant, bird and dog breed identification, and yes there are many elements in common. However the reason that Pic2Recipe is sufficiently novel to merit a paper to be presented at the Computer Vision and Pattern Recognition conference in Honolulu, according to MIT postdoc Yusuf Aytar is that: “In computer vision, food is mostly neglected because we don’t have the large-scale datasets needed to make predictions.” The rest of the team consists of MIT Professor Antonio Torralba, CSAIL graduate students Nick Hynes andf Javier Marin, Amaia Salvador of the Universitat Politecnica de Catalunya in Spain and scientist Ferda Ofli and research director Ingmar Weber of QCRI. Their paper, Learning Cross-modal Embeddings for Cooking Recipes and Food Images, introduces Recipe1M, a new large-scale, structured corpus of over 1m cooking recipes and 800k food images, which makes it the largest publicly available collection of recipe data. The aim isn't simply to add to make a huge cookery book but to train a neural network to retrieve recipes: The profusion of online recipe collections with user-submitted photos presents the possibility of training machines to automatically understand food preparation by jointly analyzing ingredient lists, cooking instructions and food images. Far beyond applications solely in the realm of culinary arts, such a tool may also be applied to the plethora of food images shared on social media to achieve insight into the significance of food and its preparation on public health and cultural heritage. Recipes were scraped from over two dozen popular cooking websites and processed through a pipeline that extracted relevant text from the raw HTML, downloaded linked images, and assembled the data into a compact JSON schema in which each datum was uniquely identified. The Recipe1M dataset has two layers. The first contains basic information including title, a list of ingredients, and a sequence of instructions for preparing the dish; all of these data are provided as free text. The second layer builds upon the first and includes any images with which the recipe is associated–these are provided as RGB in JPEG format. Recipe-image pairs enable an in-depth understanding of food from its ingredients to its preparation: You can experiment with Pic2Recipe courtesy of CSAIL using its supplied ptotos to explore recipes: Alternatively you can upload an image - but even with its large dataset it has a lot of gaps in its repertoire - but it is fairly good at recognizing baked goods:

If this app gives you food for thought there is probably a lot that can be done. The code train and evaluate models from the paper is on GitHub, where it has already been forked, and you can download the Recipe1M dataset after registering and agreeing to terms and conditions.

More InformationLearning Cross-modal Embeddings for Cooking Recipes and Food Images - Overview Learning Cross-modal Embeddings for Cooking Recipes and Food Imags (pdf) Related ArticlesiNaturalist Launches Deep Learning-Based Identification App What's That Dog - An App For Breed Recognition

To be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Twitter, Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

| Last Updated ( Sunday, 23 July 2017 ) |